Schneider Electric leverages Retrieval Augmented LLMs on SageMaker to ensure real-time updates in their ERP systems

The RAG is able to process large amounts of external knowledge pulled from the Google search and exhibit corporate or public relationships among ERP records… Augmenting the LLM with Google search guarantees the most up-to-date information… In our downstream task there was no need to accommodate…

This post was co-written with Anthony Medeiros, Manager of Solutions Engineering and Architecture for North America Artificial Intelligence, and Blake Santschi, Business Intelligence Manager, from Schneider Electric. Additional Schneider Electric experts include Jesse Miller, Somik Chowdhury, Shaswat Babhulgaonkar, David Watkins, Mark Carlson and Barbara Sleczkowski.

Enterprise Resource Planning (ERP) systems are used by companies to manage several business functions such as accounting, sales or order management in one system. In particular, they are routinely used to store information related to customer accounts. Different organizations within a company might use different ERP systems and merging them is a complex technical challenge at scale which requires domain-specific knowledge.

Schneider Electric is a leader in digital transformation of energy management and industrial automation. To best serve their customers’ needs, Schneider Electric needs to keep track of the links between related customers’ accounts in their ERP systems. As their customer base grows, new customers are added daily, and their account teams have to manually sort through these new customers and link them to the proper parent entity.

The linking decision is based on the most recent information available publicly on the Internet or in the media, and might be affected by recent acquisitions, market news or divisional re-structuring. An example of account linking would be to identify the relationship between Amazon and its subsidiary, Whole Foods Market [source].

Schneider Electric is deploying large language models for their capabilities in answering questions in various knowledge specific domains, the date the model has been trained is limiting its knowledge. They addressed that challenge by using a Retriever-Augmented Generation open source large language model available on Amazon SageMaker JumpStart to process large amounts of external knowledge pulled and exhibit corporate or public relationships among ERP records.

In early 2023, when Schneider Electric decided to automate part of its accounts linking process using artificial intelligence (AI), the company partnered with the AWS Machine Learning Solutions Lab (MLSL). With MLSL’s expertise in ML consulting and execution, Schneider Electric was able to develop an AI architecture that would reduce the manual effort in their linking workflows, and deliver faster data access to their downstream analytics teams.

Generative AI

Generative AI and large language models (LLMs) are transforming the way business organizations are able to solve traditionally complex challenges related to natural language processing and understanding. Some of the benefits offered by LLMs include the ability to comprehend large portions of text and answer related questions by producing human-like responses. AWS makes it easy for customers to experiment with and productionize LLM workloads by making many options available via Amazon SageMaker JumpStart, Amazon Bedrock, and Amazon Titan.

External Knowledge Acquisition

LLMs are known for their ability to compress human knowledge and have demonstrated remarkable capabilities in answering questions in various knowledge specific domains, but their knowledge is limited by the date the model has been trained. We address that information cutoff by coupling the LLM with a Google Search API to deliver a powerful Retrieval Augmented LLM (RAG) that addresses Schneider Electric’s challenges. The RAG is able to process large amounts of external knowledge pulled from the Google search and exhibit corporate or public relationships among ERP records.

See the following example:

Question: Who is the parent company of One Medical?

Google query: “One Medical parent company” → information → LLM

Answer: One Medical, a subsidiary of Amazon…

The preceding example (taken from the Schneider Electric customer database) concerns an acquisition that happened in February 2023 and thus would not be caught by the LLM alone due to knowledge cutoffs. Augmenting the LLM with Google search guarantees the most up-to-date information.

Flan-T5 model

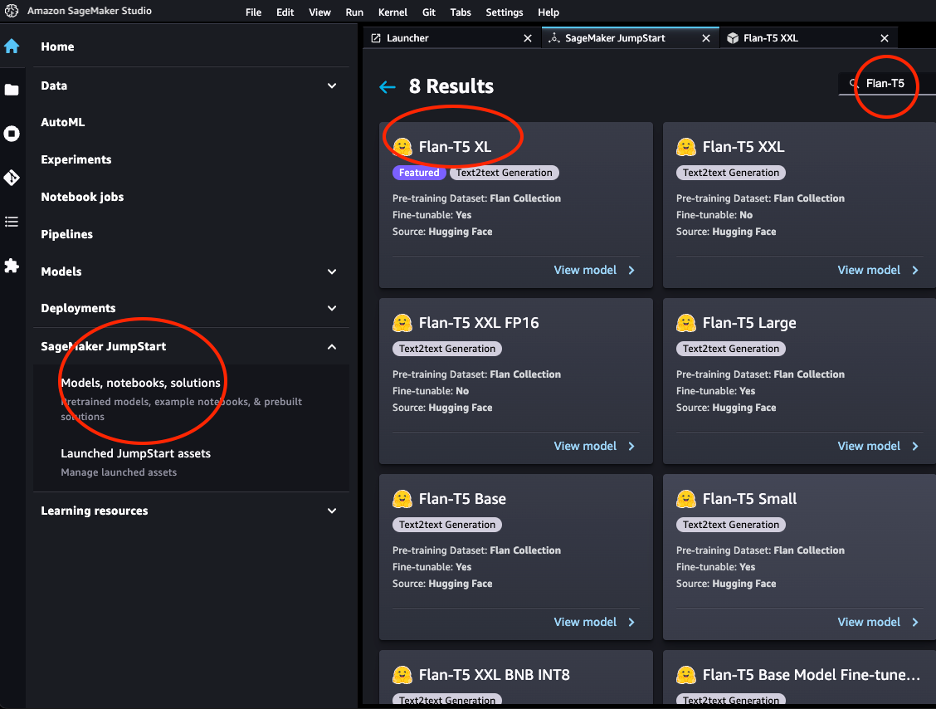

In that project we used Flan-T5-XXL model from the Flan-T5 family of models.

The Flan-T5 models are instruction-tuned and therefore are capable of performing various zero-shot NLP tasks. In our downstream task there was no need to accommodate a vast amount of world knowledge but rather to perform well on question answering given a context of texts provided through search results, and therefore, the 11B parameters T5 model performed well.

JumpStart provides convenient deployment of this model family through Amazon SageMaker Studio and the SageMaker SDK. This includes Flan-T5 Small, Flan-T5 Base, Flan-T5 Large, Flan-T5 XL, and Flan-T5 XXL. Furthermore, JumpStart provides a few versions of Flan-T5 XXL at different levels of quantization. We deployed Flan-T5-XXL to an endpoint for inference using Amazon SageMaker Studio Jumpstart.

Retrieval Augmented LLM with LangChain

LangChain is popular and fast growing framework allowing development of applications powered by LLMs. It is based on the concept of chains, which are combinations of different components designed to improve the functionality of LLMs for a given task. For instance, it allows us to customize prompts and integrate LLMs with different tools like external search engines or data sources. In our use-case, we used Google Serper component to search the web, and deployed the Flan-T5-XXL model available on Amazon SageMaker Studio Jumpstart. LangChain performs the overall orchestration and allows the search result pages be fed into the Flan-T5-XXL instance.

The Retrieval-Augmented Generation (RAG) consists of two steps:

- Retrieval of relevant text chunks from external sources

- Augmentation of the chunks with context in the prompt given to the LLM.

For Schneider Electric’ use-case, the RAG proceeds as follows:

- The given company name is combined with a question like “Who is the parent company of X”, where X is the given company) and passed to a google query using the Serper AI

- The extracted information is combined with the prompt and original question and passed to the LLM for an answer.

The following diagram illustrates this process.

Use the following code to create an endpoint:

Instantiate search tool:

In the following code, we chain together the retrieval and augmentation components:

The Prompt Engineering

The combination of the context and the question is called the prompt. We noticed that the blanket prompt we used (variations around asking for the parent company) performed well for most public sectors (domains) but didn’t generalize well to education or healthcare since the notion of parent company is not meaningful there. For education, we used “X” while for healthcare we used “Y”.

To enable this domain specific prompt selection, we also had to identify the domain a given account belongs to. For this, we also used a RAG where a multiple choice question “What is the domain of {account}?” as a first step, and based on the answer we inquired on the parent of the account using the relevant prompt as a second step. See the following code:

The sector specific prompts have boosted the overall performance from 55% to 71% of accuracy. Overall, the effort and time invested to develop effective prompts appear to significantly improve the quality of LLM response.

RAG with tabular data (SEC-10k)

The SEC 10K filings is another reliable source of information for subsidiaries and subdivisions filed annually by a publicly traded companies. These filings are available directly on SEC EDGAR or through CorpWatch API.

We assume the information is given in tabular format. Below is a pseudo csv dataset that mimics the original format of the SEC-10K dataset. It is possible to merge multiple csv data sources into a combined pandas dataframe:

# A pseudo dataset similar by schema to the CorpWatch API dataset

df.head()

Author: Anthony Medeiros

Anthony Medeiros is a Manager of Solutions Engineering and Architecture at Schneider Electric. He specializes in delivering high-value AI/ML initiatives to many business functions within North America. With 17 years of experience at Schneider Electric, he brings a wealth of industry knowledge and technical expertise to the team.

Anthony Medeiros is a Manager of Solutions Engineering and Architecture at Schneider Electric. He specializes in delivering high-value AI/ML initiatives to many business functions within North America. With 17 years of experience at Schneider Electric, he brings a wealth of industry knowledge and technical expertise to the team. Blake Sanstchi is a Business Intelligence Manager at Schneider Electric, leading an analytics team focused on supporting the Sales organization through data-driven insights.

Blake Sanstchi is a Business Intelligence Manager at Schneider Electric, leading an analytics team focused on supporting the Sales organization through data-driven insights. Joshua Levy is Senior Applied Science Manager in the Amazon Machine Learning Solutions lab, where he helps customers design and build AI/ML solutions to solve key business problems.

Joshua Levy is Senior Applied Science Manager in the Amazon Machine Learning Solutions lab, where he helps customers design and build AI/ML solutions to solve key business problems. Kosta Belz is a Senior Applied Scientist with AWS MLSL with focus on Generative AI and document processing. He is passionate about building applications using Knowledge Graphs and NLP. He has around 10 years of experience in building Data & AI solutions to create value for customers and enterprises.

Kosta Belz is a Senior Applied Scientist with AWS MLSL with focus on Generative AI and document processing. He is passionate about building applications using Knowledge Graphs and NLP. He has around 10 years of experience in building Data & AI solutions to create value for customers and enterprises. Aude Genevay is an Applied Scientist in the Amazon GenAI Incubator, where she helps customers solve key business problems through ML and AI. She previously was a researcher in theoretical ML and enjoys applying her knowledge to deliver state-of-the-art solutions to customers.

Aude Genevay is an Applied Scientist in the Amazon GenAI Incubator, where she helps customers solve key business problems through ML and AI. She previously was a researcher in theoretical ML and enjoys applying her knowledge to deliver state-of-the-art solutions to customers. Md Sirajus Salekin is an Applied Scientist at AWS Machine Learning Solution Lab. He helps AWS customers to accelerate their business by building AI/ML solutions. His research interests are multimodal machine learning, generative AI, and ML applications in healthcare.

Md Sirajus Salekin is an Applied Scientist at AWS Machine Learning Solution Lab. He helps AWS customers to accelerate their business by building AI/ML solutions. His research interests are multimodal machine learning, generative AI, and ML applications in healthcare. Zichen Wang, PhD, is a Senior Applied Scientist in AWS. With several years of research experience in developing ML and statistical methods using biological and medical data, he works with customers across various verticals to solve their ML problems.

Zichen Wang, PhD, is a Senior Applied Scientist in AWS. With several years of research experience in developing ML and statistical methods using biological and medical data, he works with customers across various verticals to solve their ML problems. Anton Gridin is a Principal Solutions Architect supporting Global Industrial Accounts, based out of New York City. He has more than 15 years of experience building secure applications and leading engineering teams.

Anton Gridin is a Principal Solutions Architect supporting Global Industrial Accounts, based out of New York City. He has more than 15 years of experience building secure applications and leading engineering teams.