Use anomaly detection with AWS Glue to improve data quality (preview)

We are launching a preview of a new AWS Glue Data Quality feature that will help to improve your data quality by using machine learning to detect statistical anomalies and unusual patterns… You get deep insights into data quality issues, data quality scores, and recommendations for rules that you …

We are launching a preview of a new AWS Glue Data Quality feature that will help to improve your data quality by using machine learning to detect statistical anomalies and unusual patterns. You get deep insights into data quality issues, data quality scores, and recommendations for rules that you can use to continuously monitor for anomalies, all without having to write any code.

Data quality counts

AWS customers already build data integration pipelines to extract and transform data. They set up data quality rules to ensure that the resulting data is of high quality and can be used to make accurate business decisions. In many cases, these rules assess the data based on criteria that were chosen and locked in at a specific point in time, reflecting the current state of the business. However, as the business environment changes and the properties of the data shift, the rules are not always reviewed and updated.

For example, a rule could be set to verify that daily sales are at least ten thousand dollars for an early-stage business. As the business succeeds and grows, the rule should be checked and updated from time to time, but in practice this rarely happens. As a result, if there’s an unexpected drop in sales, the outdated rule does not activate, and no one is happy.

Anomaly detection in action

To detect unusual patterns and to gain deeper insights into data, organizations try to create their own adaptive systems or turn to costly commercial solutions that require specific technical skills and specialized business knowledge.

To address this widespread challenge, Glue Data Quality now makes use of machine learning (ML).

Once activated, this cool new addition to Glue Data Quality gathers statistics as fresh data arrives, using ML and dynamic thresholds to learn from past patterns while looking outliers and unusual data patterns. This process produces observations and also visualizes trends so that you can quickly gain a better understanding of the anomaly.

You will also get rule recommendations as part of the Observations, and you can easily and progressively add them to your data pipelines. Rules can enforce an action such as stopping your data pipelines. In the past, you could only write static rules. Now, you can write Dynamic rules that have auto-adjusting thresholds and AnomalyDetection Rules that grasp recurring patterns and spot deviations. When you use rules as part of data pipelines, they can stop the data flow so that a data engineer can review, fix and resume.

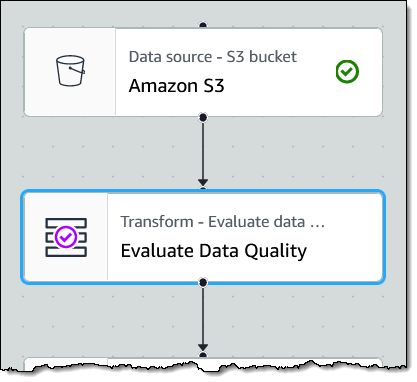

To use anomaly detection, I add an Evaluate Data Quality node to my job:

I select the node and click Add analyzer to choose a statistic and the columns:

Glue Data Quality learns from the data to recognize patterns and then generates observations that will be shown in the Data quality tab:

And a visualization:

After I review the observations I add new rules. The first one sets adaptive thresholds that check the row count is between the smallest of the last 10 runs and the largest of the last 20 runs. The second one looks for unusual patters, for example RowCount being abnormally high on weekends:

Join the preview

This new capability is available in preview in the following AWS Regions: US East (Ohio), US East (N. Virginia), US West (Oregon), Asia Pacific (Tokyo), and Europe (Ireland). To learn more, read Data Quality Anomaly Detection]].

Stay tuned for a detailed blog post when this feature launches!

Learn more

Data Quality Anomaly Detection

— Jeff;

Author: Jeff Barr