Databricks DBRX is now available in Amazon SageMaker JumpStart

Today, we are excited to announce that the DBRX model, an open, general-purpose large language model (LLM) developed by Databricks, is available for customers through Amazon SageMaker JumpStart to deploy with one click for running inference… You can try out this model with SageMaker JumpStart, a …

Today, we are excited to announce that the DBRX model, an open, general-purpose large language model (LLM) developed by Databricks, is available for customers through Amazon SageMaker JumpStart to deploy with one click for running inference. The DBRX LLM employs a fine-grained mixture-of-experts (MoE) architecture, pre-trained on 12 trillion tokens of carefully curated data and a maximum context length of 32,000 tokens.

You can try out this model with SageMaker JumpStart, a machine learning (ML) hub that provides access to algorithms and models so you can quickly get started with ML. In this post, we walk through how to discover and deploy the DBRX model.

What is the DBRX model

DBRX is a sophisticated decoder-only LLM built on transformer architecture. It employs a fine-grained MoE architecture, incorporating 132 billion total parameters, with 36 billion of these parameters being active for any given input.

The model underwent pre-training using a dataset consisting of 12 trillion tokens of text and code. In contrast to other open MoE models like Mixtral and Grok-1, DBRX features a fine-grained approach, using a higher quantity of smaller experts for optimized performance. Compared to other MoE models, DBRX has 16 experts and chooses 4.

The model is made available under the Databricks Open Model license, for use without restrictions.

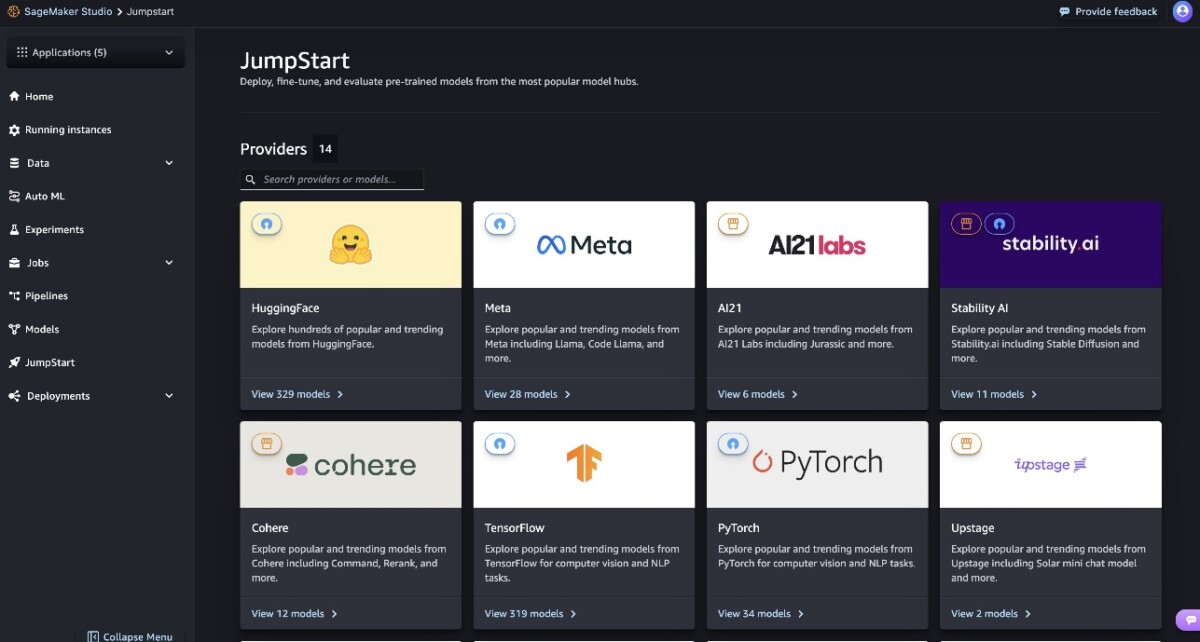

What is SageMaker JumpStart

SageMaker JumpStart is a fully managed platform that offers state-of-the-art foundation models for various use cases such as content writing, code generation, question answering, copywriting, summarization, classification, and information retrieval. It provides a collection of pre-trained models that you can deploy quickly and with ease, accelerating the development and deployment of ML applications. One of the key components of SageMaker JumpStart is the Model Hub, which offers a vast catalog of pre-trained models, such as DBRX, for a variety of tasks.

You can now discover and deploy DBRX models with a few clicks in Amazon SageMaker Studio or programmatically through the SageMaker Python SDK, enabling you to derive model performance and MLOps controls with Amazon SageMaker features such as Amazon SageMaker Pipelines, Amazon SageMaker Debugger, or container logs. The model is deployed in an AWS secure environment and under your VPC controls, helping provide data security.

Discover models in SageMaker JumpStart

You can access the DBRX model through SageMaker JumpStart in the SageMaker Studio UI and the SageMaker Python SDK. In this section, we go over how to discover the models in SageMaker Studio.

SageMaker Studio is an integrated development environment (IDE) that provides a single web-based visual interface where you can access purpose-built tools to perform all ML development steps, from preparing data to building, training, and deploying your ML models. For more details on how to get started and set up SageMaker Studio, refer to Amazon SageMaker Studio.

In SageMaker Studio, you can access SageMaker JumpStart by choosing JumpStart in the navigation pane.

From the SageMaker JumpStart landing page, you can search for “DBRX” in the search box. The search results will list DBRX Instruct and DBRX Base.

You can choose the model card to view details about the model such as license, data used to train, and how to use the model. You will also find the Deploy button to deploy the model and create an endpoint.

Deploy the model in SageMaker JumpStart

Deployment starts when you choose the Deploy button. After deployment finishes, you will see that an endpoint is created. You can test the endpoint by passing a sample inference request payload or by selecting the testing option using the SDK. When you select the option to use the SDK, you will see example code that you can use in the notebook editor of your choice in SageMaker Studio.

DBRX Base

To deploy using the SDK, we start by selecting the DBRX Base model, specified by the model_id with value huggingface-llm-dbrx-base. You can deploy any of the selected models on SageMaker with the following code. Similarly, you can deploy DBRX Instruct using its own model ID.

This deploys the model on SageMaker with default configurations, including the default instance type and default VPC configurations. You can change these configurations by specifying non-default values in JumpStartModel. The Eula value must be explicitly defined as True in order to accept the end-user license agreement (EULA). Also make sure you have the account-level service limit for using ml.p4d.24xlarge or ml.pde.24xlarge for endpoint usage as one or more instances. You can follow the instructions here in order to request a service quota increase.

After it’s deployed, you can run inference against the deployed endpoint through the SageMaker predictor:

Example prompts

You can interact with the DBRX Base model like any standard text generation model, where the model processes an input sequence and outputs predicted next words in the sequence. In this section, we provide some example prompts and sample output.

Code generation

Using the preceding example, we can use code generation prompts as follows:

The following is the output:

Sentiment analysis

You can perform sentiment analysis using a prompt like the following with DBRX:

The following is the output:

Question answering

You can use a question answering prompt like the following with DBRX:

The following is the output:

DBRX Instruct

The instruction-tuned version of DBRX accepts formatted instructions where conversation roles must start with a prompt from the user and alternate between user instructions and the assistant (DBRX-instruct). The instruction format must be strictly respected, otherwise the model will generate suboptimal outputs. The template to build a prompt for the Instruct model is defined as follows:

<|im_start|> and <|im_end|> are special tokens for beginning of string (BOS) and end of string (EOS). The model can contain multiple conversation turns between system, user, and assistant, allowing for the incorporation of few-shot examples to enhance the model’s responses.

The following code shows how you can format the prompt in instruction format:

Knowledge retrieval

You can use the following prompt for knowledge retrieval:

The following is the output:

Code generation

DBRX models demonstrate benchmarked strengths for coding tasks. For example, see the following code:

The following is the output:

Mathematics and reasoning

The DBRX models also report strengths in mathematic accuracy. For example, see the following code:

DBRX can provide comprehension as shown in the following output with the math logic:

Clean up

After you’re done running the notebook, make sure to delete all resources that you created in the process so your billing is stopped. Use the following code:

Conclusion

In this post, we showed you how to get started with DBRX in SageMaker Studio and deploy the model for inference. Because foundation models are pre-trained, they can help lower training and infrastructure costs and enable customization for your use case. Visit SageMaker JumpStart in SageMaker Studio now to get started.

Resources

- SageMaker JumpStart documentation

- SageMaker JumpStart foundation models documentation

- SageMaker JumpStart product detail page

- SageMaker JumpStart model catalog

About the Authors

Shikhar Kwatra is an AI/ML Specialist Solutions Architect at Amazon Web Services, working with a leading Global System Integrator. He has earned the title of one of the Youngest Indian Master Inventors with over 400 patents in the AI/ML and IoT domains. He has over 8 years of industry experience from startups to large-scale enterprises, from IoT Research Engineer, Data Scientist, to Data & AI Architect. Shikhar aids in architecting, building, and maintaining cost-efficient, scalable cloud environments for organizations and supports GSI partners in building strategic industry

Shikhar Kwatra is an AI/ML Specialist Solutions Architect at Amazon Web Services, working with a leading Global System Integrator. He has earned the title of one of the Youngest Indian Master Inventors with over 400 patents in the AI/ML and IoT domains. He has over 8 years of industry experience from startups to large-scale enterprises, from IoT Research Engineer, Data Scientist, to Data & AI Architect. Shikhar aids in architecting, building, and maintaining cost-efficient, scalable cloud environments for organizations and supports GSI partners in building strategic industry

Niithiyn Vijeaswaran is a Solutions Architect at AWS. His area of focus is generative AI and AWS AI Accelerators. He holds a Bachelor’s degree in Computer Science and Bioinformatics. Niithiyn works closely with the Generative AI GTM team to enable AWS customers on multiple fronts and accelerate their adoption of generative AI. He’s an avid fan of the Dallas Mavericks and enjoys collecting sneakers.

Niithiyn Vijeaswaran is a Solutions Architect at AWS. His area of focus is generative AI and AWS AI Accelerators. He holds a Bachelor’s degree in Computer Science and Bioinformatics. Niithiyn works closely with the Generative AI GTM team to enable AWS customers on multiple fronts and accelerate their adoption of generative AI. He’s an avid fan of the Dallas Mavericks and enjoys collecting sneakers.

Sebastian Bustillo is a Solutions Architect at AWS. He focuses on AI/ML technologies with a profound passion for generative AI and compute accelerators. At AWS, he helps customers unlock business value through generative AI. When he’s not at work, he enjoys brewing a perfect cup of specialty coffee and exploring the world with his wife.

Sebastian Bustillo is a Solutions Architect at AWS. He focuses on AI/ML technologies with a profound passion for generative AI and compute accelerators. At AWS, he helps customers unlock business value through generative AI. When he’s not at work, he enjoys brewing a perfect cup of specialty coffee and exploring the world with his wife.

Armando Diaz is a Solutions Architect at AWS. He focuses on generative AI, AI/ML, and data analytics. At AWS, Armando helps customers integrating cutting-edge generative AI capabilities into their systems, fostering innovation and competitive advantage. When he’s not at work, he enjoys spending time with his wife and family, hiking, and traveling the world.

Armando Diaz is a Solutions Architect at AWS. He focuses on generative AI, AI/ML, and data analytics. At AWS, Armando helps customers integrating cutting-edge generative AI capabilities into their systems, fostering innovation and competitive advantage. When he’s not at work, he enjoys spending time with his wife and family, hiking, and traveling the world.

Author: Shikhar Kwatra