How Skyflow creates technical content in days using Amazon Bedrock

This guest post is co-written with Manny Silva, Head of Documentation at Skyflow, Inc… Skyflow is a data privacy vault provider that makes it effortless to secure sensitive data and enforce privacy policies… Skyflow experienced this growth and documentation challenge in early 2023 as it expande…

This guest post is co-written with Manny Silva, Head of Documentation at Skyflow, Inc.

Startups move quickly, and engineering is often prioritized over documentation. Unfortunately, this prioritization leads to release cycles that don’t match, where features release but documentation lags behind. This leads to increased support calls and unhappy customers.

Skyflow is a data privacy vault provider that makes it effortless to secure sensitive data and enforce privacy policies. Skyflow experienced this growth and documentation challenge in early 2023 as it expanded globally from 8 to 22 AWS Regions, including China and other areas of the world. The documentation team, consisting of only two people, found itself overwhelmed as the engineering team, with over 60 people, updated the product to support the scale and rapid feature release cycles.

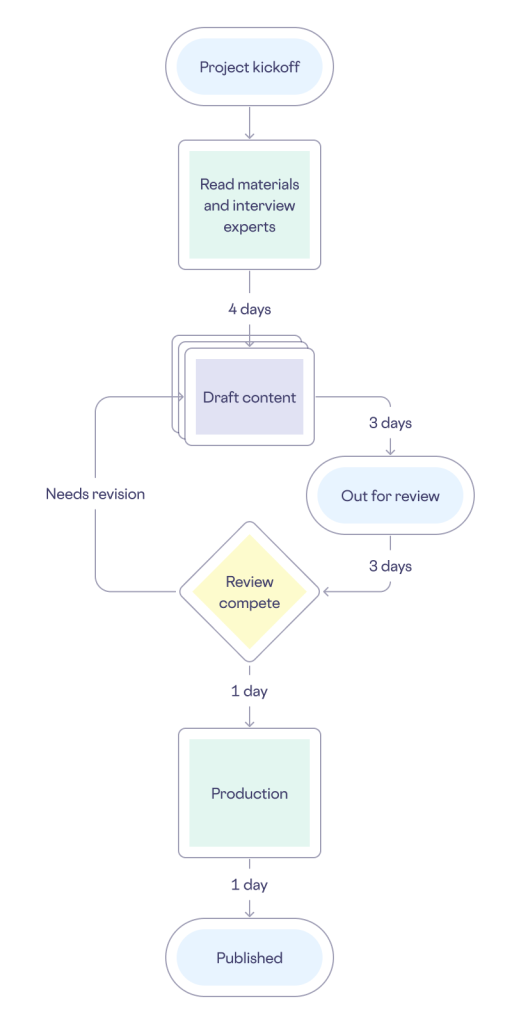

Given the critical nature of Skyflow’s role as a data privacy company, the stakes were particularly high. Customers entrust Skyflow with their data and expect Skyflow to manage it both securely and accurately. The accuracy of Skyflow’s technical content is paramount to earning and keeping customer trust. Although new features were released every other week, documentation for the features took an average of 3 weeks to complete, including drafting, review, and publication. The following diagram illustrates their content creation workflow.

Looking at our documentation workflows, we at Skyflow discovered areas where generative artificial intelligence (AI) could improve our efficiency. Specifically, creating the first draft—often referred to as overcoming the “blank page problem”—is typically the most time-consuming step. The review process could also be long depending on the number of inaccuracies found, leading to additional revisions, additional reviews, and additional delays. Both drafting and reviewing needed to be shorter to make doc target timelines match those of engineering.

To do this, Skyflow built VerbaGPT, a generative AI tool based on Amazon Bedrock. Amazon Bedrock is a fully managed service that makes foundation models (FMs) from leading AI startups and Amazon available through an API, so you can choose from a wide range of FMs to find the model that is best suited for your use case. With the Amazon Bedrock serverless experience, you can get started quickly, privately customize FMs with your own data, and integrate and deploy them into your applications using the AWS tools without having to manage any infrastructure. With Amazon Bedrock, VerbaGPT is able to prompt large language models (LLMs), regardless of model provider, and uses Retrieval Augmented Generation (RAG) to provide accurate first drafts that make for quick reviews.

In this post, we share how Skyflow improved their workflow to create documentation in days instead of weeks using Amazon Bedrock.

Solution overview

VerbaGPT uses Contextual Composition (CC), a technique that incorporates a base instruction, a template, relevant context to inform the execution of the instruction, and a working draft, as shown in the following figure. For the instruction, VerbaGPT tells the LLM to create content based on the specified template, evaluate the context to see if it’s applicable, and revise the draft accordingly. The template includes the structure of the desired output, expectations for what sort of information should exist in a section, and one or more examples of content for each section to guide the LLM on how to process context and draft content appropriately. With the instruction and template in place, VerbaGPT includes as much available context from RAG results as it can, then sends that off for inference. The LLM returns the revised working draft, which VerbaGPT then passes back into a new prompt that includes the same instruction, the same template, and as much context as it can fit, starting from where the previous iteration left off. This repeats until all context is considered and the LLM outputs a draft matching the included template.

The following figure illustrates how Skyflow deployed VerbaGPT on AWS. The application is used by the documentation team and internal users. The solution involves deploying containers on Amazon Elastic Kubernetes Service (Amazon EKS) that host a Streamlit user interface and a backend LLM gateway that is able to invoke Amazon Bedrock or local LLMs, as needed. Users upload documents and prompt VerbaGPT to generate new content. In the LLM gateway, prompts are processed in Python using LangChain and Amazon Bedrock.

When building this solution on AWS, Skyflow followed these steps:

- Choose an inference toolkit and LLMs.

- Build the RAG pipeline.

- Create a reusable, extensible prompt template.

- Create content templates for each content type.

- Build an LLM gateway abstraction layer.

- Build a frontend.

Let’s dive into each step, including the goals and requirements and how they were addressed.

Choose an inference toolkit and LLMs

The inference toolkit you choose, if any, dictates your interface with your LLMs and what other tooling is available to you. VerbaGPT uses LangChain instead of directly invoking LLMs. LangChain has broad adoption in the LLM community, so there was a present and likely future ability to take advantage of the latest advancements and community support.

When building a generative AI application, there are many factors to consider. For instance, Skyflow wanted the flexibility to interact with different LLMs depending on the use case. We also needed to keep context and prompt inputs private and secure, which meant not using LLM providers who would log that information or fine-tune their models on our data. We needed to have a variety of models with unique strengths at our disposal (such as long context windows or text labeling) and to have inference redundancy and fallback options in case of outages.

Skyflow chose Amazon Bedrock for its robust support of multiple FMs and its focus on privacy and security. With Amazon Bedrock, all traffic remains inside AWS. VerbaGPT’s primary foundation model is Anthropic Claude 3 Sonnet on Amazon Bedrock, chosen for its substantial context length, though it also uses Anthropic Claude Instant on Amazon Bedrock for chat-based interactions.

Build the RAG pipeline

To deliver accurate and grounded responses from LLMs without the need for fine-tuning, VerbaGPT uses RAG to fetch data related to the user’s prompt. By using RAG, VerbaGPT became familiar with the nuances of Skyflow’s features and procedures, enabling it to generate informed and complimentary content.

To build your own content creation solution, you collect your corpus into a knowledge base, vectorize it, and store it in a vector database. VerbaGPT includes all of Skyflow’s documentation, blog posts, and whitepapers in a vector database that it can query during inference. Skyflow uses a pipeline to embed content and store the embedding in a vector database. This embedding pipeline is a multi-step process, and everyone’s pipeline is going to look a little different. Skyflow’s pipeline starts by moving artifacts to a common data store, where they are de-identified. If your documents have personally identifiable information (PII), payment card information (PCI), personal health information (PHI), or other sensitive data, you might use a solution like Skyflow LLM Privacy Vault to make de-identifying your documentation straightforward. Next, the pipeline chunks the documents into pieces, then finally calculates vectors for the text chunks and stores them in FAISS, an open source vector store. VerbaGPT uses FAISS because it is fast and straightforward to use from Python and LangChain. AWS also has numerous vector stores to choose from for a more enterprise-level content creation solution, including Amazon Neptune, Amazon Relational Database Service (Amazon RDS) for PostgreSQL, Amazon Aurora PostgreSQL-Compatible Edition, Amazon Kendra, Amazon OpenSearch Service, and Amazon DocumentDB (with MongoDB compatibility). The following diagram illustrates the embedding generation pipeline.

When chunking your documents, keep in mind that LangChain’s default splitting strategy can be aggressive. This can result in chunks of content that are so small that they lack meaningful context and result in worse output, because the LLM has to make (largely inaccurate) assumptions about the context, producing hallucinations. This issue is particularly noticeable in Markdown files, where procedures were fragmented, code blocks were divided, and chunks were often only single sentences. Skyflow created its own Markdown splitter to work more accurately with VerbaGPT’s RAG output content.

Create a reusable, extensible prompt template

After you deploy your embedding pipeline and vector database, you can start intelligently prompting your LLM with a prompt template. VerbaGPT uses a system prompt that instructs the LLM how to behave and includes a directive to use content in the Context section to inform the LLM’s response.

The inference process queries the vector database with the user’s prompt, fetches the results above a certain similarity threshold, and includes the results in the system prompt. The solution then sends the system prompt and the user’s prompt to the LLM for inference.

The following is a sample prompt for drafting with Contextual Composition that includes all the necessary components, system prompt, template, context, a working draft, and additional instructions:

Create content templates

To round out the prompt template, you need to define content templates that match your desired output, such as a blog post, how-to guide, or press release. You can jumpstart this step by sourcing high-quality templates. Skyflow sourced documentation templates from The Good Docs Project. Then, we adapted the how-to and concept templates to align with internal styles and specific needs. We also adapted the templates for use in prompt templates by providing instructions and examples per section. By clearly and consistently defining the expected structure and intended content of each section, the LLM was able to output content in the formats needed, while being both informative and stylistically consistent with Skyflow’s brand.

LLM gateway abstraction layer

Amazon Bedrock provides a single API to invoke a variety of FMs. Skyflow also wanted to have inference redundancy and fallback options in case VerbaGPT experienced Amazon Bedrock service limit exceeded errors. To that end, VerbaGPT has an LLM gateway that acts as an abstraction layer that is invoked.

The main component of the gateway is the model catalog, which can return a LangChain llm model object for the specified model, updated to include any parameters. You can create this with a simple if/else statement like that shown in the following code:

By mapping standard input formats into the function and handling all custom LLM object construction within the function, the rest of the code stays clean by using LangChain’s llm object.Build a frontend

The final step was to add a UI on top of the application to hide the inner workings of LLM calls and context. A simple UI is key for generative AI applications, so users can efficiently prompt the LLMs without worrying about the details unnecessary to their workflow. As shown in the solution architecture, VerbaGPT uses Streamlit to quickly build useful, interactive UIs that allow users to upload documents for additional context and draft new documents rapidly using Contextual Composition. Streamlit is Python based, which makes it straightforward for data scientists to be efficient at building UIs.

Results

By using the power of Amazon Bedrock for inferencing and Skyflow for data privacy and sensitive data de-identification, your organization can significantly speed up the production of accurate, secure technical documents, just like the solution shown in this post. Skyflow was able to use existing technical content and best-in-class templates to reliably produce drafts of different content types in minutes instead of days. For example, given a product requirements document (PRD) and an engineering design document, VerbaGPT can produce drafts for a how-to guide, conceptual overview, summary, release notes line item, press release, and blog post within 10 minutes. Normally, this would take multiple individuals from different departments multiple days each to produce.

The new content flow shown in the following figure moves generative AI to the front of all technical content Skyflow creates. During the “Create AI draft” step, VerbaGPT generates content in the approved style and format in just 5 minutes. Not only does this solve the blank page problem, first drafts are created with less interviewing or asking engineers to draft content, freeing them to add value through feature development instead.

The security measures Amazon Bedrock provides around prompts and inference aligned with Skyflow’s commitment to data privacy, and allowed Skyflow to use additional kinds of context, such as system logs, without the concern of compromising sensitive information in third-party systems.

As more people at Skyflow used the tool, they wanted additional content types available: VerbaGPT now has templates for internal reports from system logs, email templates from common conversation types, and more. Additionally, although Skyflow’s RAG context is clean, VerbaGPT is integrated with Skyflow LLM Privacy Vault to de-identify sensitive data in user inference inputs, maintaining Skyflow’s stringent standards of data privacy and security even while using the power of AI for content creation.

Skyflow’s journey in building VerbaGPT has drastically shifted content creation, and the toolkit wouldn’t be as robust, accurate, or flexible without Amazon Bedrock. The significant reduction in content creation time—from an average of around 3 weeks to as little as 5 days, and sometimes even a remarkable 3.5 days—marks a substantial leap in efficiency and productivity, and highlights the power of AI in enhancing technical content creation.

Conclusion

Don’t let your documentation lag behind your product development. Start creating your technical content in days instead of weeks, while maintaining the highest standards of data privacy and security. Learn more about Amazon Bedrock and discover how Skyflow can transform your approach to data privacy.

If you’re scaling globally and have privacy or data residency needs for your PII, PCI, PHI, or other sensitive data, reach out to your AWS representative to see if Skyflow is available in your region.

About the authors

Manny Silva is Head of Documentation at Skyflow and the creator of Doc Detective. Technical writer by day and engineer by night, he’s passionate about intuitive and scalable developer experiences and likes diving into the deep end as the 0th developer.

Manny Silva is Head of Documentation at Skyflow and the creator of Doc Detective. Technical writer by day and engineer by night, he’s passionate about intuitive and scalable developer experiences and likes diving into the deep end as the 0th developer.

Jason Westra is a Senior Solutions Architect for AWS AI/ML startups. He provides guidance and technical assistance that enables customers to build scalable, highly available, secure AI and ML workloads in AWS Cloud.

Jason Westra is a Senior Solutions Architect for AWS AI/ML startups. He provides guidance and technical assistance that enables customers to build scalable, highly available, secure AI and ML workloads in AWS Cloud.

Author: Manny Silva