How Vidmob is using generative AI to transform its creative data landscape

For example, insights from creative data (advertising analytics) using campaign performance can not only uncover which creative works best but also help you understand the reasons behind its success… In this post, we illustrate how Vidmob, a creative data company, worked with the AWS Generative …

This post was co-written with Mickey Alon from Vidmob.

Generative artificial intelligence (AI) can be vital for marketing because it enables the creation of personalized content and optimizes ad targeting with predictive analytics. Specifically, such data analysis can result in predicting trends and public sentiment while also personalizing customer journeys, ultimately leading to more effective marketing and driving business. For example, insights from creative data (advertising analytics) using campaign performance can not only uncover which creative works best but also help you understand the reasons behind its success.

In this post, we illustrate how Vidmob, a creative data company, worked with the AWS Generative AI Innovation Center (GenAIIC) team to uncover meaningful insights at scale within creative data using Amazon Bedrock. The collaboration involved the following steps:

- Use natural language to analyze and generate insights on performance data through different channels (such as TikTok, Meta, and Pinterest)

- Generate research information for context such as the value proposition, competitive differentiators, and brand identity of a specific client

Vidmob background

Vidmob is the Creative Data company that uses creative analytics and scoring software to make creative and media decisions for marketers and agencies as they strive to drive business results through improved creative effectiveness. Vidmob’s influence lies in its partnerships and native integrations across the digital ad landscape, its dozens of proprietary models, and operating a reinforcement learning with human feedback (RLHF) model for creativity.

Vidmob’s AI journey

Vidmob uses AI to not only enhance its creative data capabilities, but also pioneer advancements in the field of RLHF for creativity. By seamlessly integrating AI models such as Amazon Rekognition into its innovative stack, Vidmob has continually evolved to stay at the forefront of the creative data landscape.

This journey extends beyond the mere adoption of AI; Vidmob has consistently recognized the importance of curating a differentiated dataset to maximize the potential of its AI-driven solutions. Understanding the intrinsic value of data network effects, Vidmob constructed a product and operational system architecture designed to be the industry’s most comprehensive RLHF solution for marketing creatives.

Use case overview

Vidmob aims to revolutionize its analytics landscape with generative AI. The central goal is to empower customers to directly query and analyze their creative performance data through a chat interface. Over the past 8 years, Vidmob has amassed a wealth of data that provides deep insights into the value of creatives in ad campaigns and strategies for enhancing performance. Vidmob envisions making it effortless for customers to utilize this data to generate insights and make informed decisions about their creative strategies.

Currently, Vidmob and its customers rely on creative strategists to address these questions at the brand level, complemented by machine-generated normative insights at the industry or environment level. This process can take creative strategists many hours. To enhance the customer experience, Vidmob decided to partner with AWS GenAIIC to deliver these insights more quickly and automatically.

Vidmob partnered with AWS GenAIIC to analyze ad data to help Vidmob creative strategists understand the performance of customer ads. Vidmob’s ad data consists of tags created from Amazon Rekognition and other internal models. The chatbot built by AWS GenAIIC would take in this tag data and retrieve insights.

The following were key success criteria for the collaboration:

- Analyze and generate insights in a natural language based on performance data and other metadata

- Generate client company information to be used as initial research for a creative

- Create a scalable solution using Amazon Bedrock that can be integrated with Vidmob’s performance data

However, there were a few challenges in achieving these goals:

- Large language models (LLMs) are limited in the volume of data they can analyze to generate insights without hallucination. They are designed to predict and summarize text-based information and are less optimized for computing creative data at a terabyte scale.

- LLMs don’t have straightforward automatic evaluation techniques. Therefore, human evaluation was required for insights generated by the LLM.

- There are 50–100 creative questions that creative strategists would normally analyze, which means an asynchronous mechanism was needed that would queue up these prompts, aggregate them, and provide the top-most meaningful insights.

Solution overview

The AWS team worked with Vidmob to build a serverless architecture for handling incoming questions from customers. They used the following services in the solution:

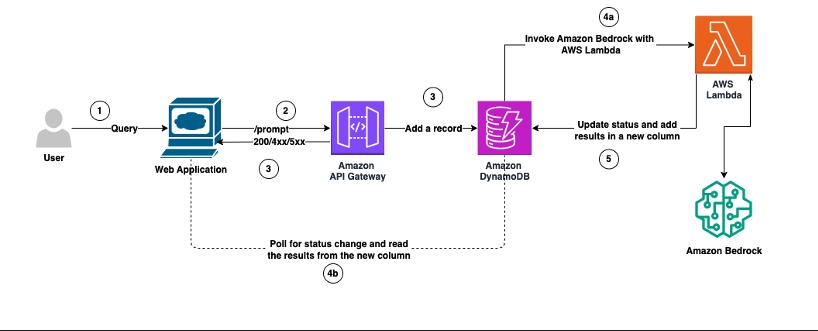

The following diagram illustrates the high-level workflow of the current solution:

The workflow consists of the following steps:

- The user navigates to Vidmob and asks a creative-related query.

- Dynamo DB stores the query and the session ID, which is then passed to a Lambda function as a DynamoDB event notification.

- The Lambda function calls Amazon Bedrock, obtains an output from the user query, and sends it back to the Streamlit application for the user to view.

- The Lambda function updates the status after it receives the completed output from Amazon Bedrock.

- In the following sections, we explore the details of the workflow, the dataset, and the results Vidmob achieved.

Workflow details

After the user inputs a query, a prompt is automatically created and then fed into a QA chatbot in which a response is outputted. The main aspects of the LLM prompt include:

- Client description – Background information about the client. This includes the value proposition, brand identity, and competitive differentiators, which is generated by Anthropic Claude v2 on Amazon Bedrock.

- Aperture – Important aspects to take into account for a user question. For example, for all questions relating to branding, “What is the best way to incorporate branding for my meta creative” might identify elements that include a logo, tagline, and sincere tone.

- Context – The filtered dataset of ad performance referenced by the QA bot.

- Question – The user query.

The following screenshot shows the UI where the user can input the client and their ad-related question.

On the backend, a router is used to determine the context (ad-related dataset) as a reference to answer the question. This depends on the question and the client, which is done in the following steps:

- Determine whether the question should reference the objective dataset (general for an entire channel like TikTok, Meta, Pinterest) or placement dataset (specific sub-channels like Facebook Reels). For example, “What is the best way to incorporate branding in my Meta creative” is objective-based, whereas “What is the best way to incorporate branding for Facebook News Feed” is placement-based because it references a specific part of the Meta creative.

- Obtain the corresponding objective dataset for the client if the query is objective-based. If it’s placement-based, first filter the placement dataset to only columns that are relevant to the query and then pass in the resulting dataset.

- Pass the completed prompt to the Anthropic’s Claude v2 model on Amazon Bedrock and display the outputs.

The outputs are displayed as shown in the following screenshot.

Specifically, the outputs include the elements that best answer the question, why this element may be important, and its corresponding percent lift for the creative.

Dataset

The dataset includes a set of ad-related data corresponding to a specific client. Specifically, Vidmob analyzes the client ad campaigns and extracts information related to the ads using various machine learning (ML) models and AWS services. The information about each campaign is collated into a single dataset (creative data). It notes how each element of a given creative performs under a certain metric; for example, how the CTA affects the view-through rate of the ad. The following two datasets were utilized:

- Creative strategist filtered performance data for each question – The dataset given was filtered by Vidmob creative strategists for their analysis. The filtered datasets include an element (such as logo or bright colors for a creative) as well as its corresponding average, percent lift (of a particular metric such as view-through rate), creative count, and impressions for each sub-channel (Facebook Explore, Reels, and so on).

- Unfiltered raw datasets – This dataset included objective-based and placement-based data for each client.

As we discussed earlier, there are two types of datasets for a particular client: objective-based and placement-based data. Objective data is used for answering generic user queries about ads for channels such as TikTok, Meta, or Pinterest, whereas placement data is used for answering specific questions about ads for sub-channels within Meta such as Facebook Reels, Instream, and News Feed. Therefore, questions such as “What are creative insights in my Meta creative” are more general and therefore reference the objective data, and questions such as “What are insights for Facebook News Feed” reference the News Feed statistics in the placement data.

The objective dataset includes elements and their corresponding average percent lift, creative count, p-values, and many more for an entire channel, whereas placement data includes these same statistics for each sub-channel.

Results

A set of questions were evaluated by the strategists for Vidmob, primarily for the following metrics:

- Accuracy – How correct the overall answer is with what you expect to be

- Relevancy – How relevant the LLM-generated output to the question is (or in this case, the background information for the client)

- Clarity – How clear and understandable the outputs from the performance data and their insights are, or if the LLM is making up things

The client background information for the prompt and a set of questions for the filtered and unfiltered data were evaluated.

Overall, the client background, generated by Anthropic’s Claude, outputted the value proposition, brand identity, and competitive differentiator for a given client. The accuracy and clarity were perfect, whereas relevancy was perfect for most samples. Perfect is determined as being given a 9/10 or 10/10 on the specific metrics by subject matter experts.

When answering a set of questions, the responses generally had high clarity and AWS GenAIIC was able to incrementally improve the QA chatbot’s accuracy and relevancy by adding extra tag information to filter the data by 10% and 5%, respectively. Overall, Vidmob expects a reduction in generating insights for creative campaigns from hours to minutes.

Conclusion

In this post, we shared how the AWS GenAIIC team used Anthropic’s Claude on Amazon Bedrock to extract and summarize insights from Vidmob’s performance data using zero-shot prompt engineering. With these services, creative strategists were able to understand client information through inherent knowledge of the LLM as well as answer user queries through added client background information and tag types such as messaging and branding. Such insights can be retrieved at scale and utilized for enhancing effective ad campaigns.

The success of this engagement allowed Vidmob an opportunity to use generative AI to create more valuable insights for customers in reduced time, allowing for a more scalable solution.

This is just one of the ways AWS enables builders to deliver generative AI-based solutions. You can get started with Amazon Bedrock and see how it can be integrated in example code bases today. If you’re interested in working with the AWS Generative AI Innovation Center, reach out to AWS GenAIIC.

About the Authors

Mickey Alon is a serial entrepreneur and co-author of ‘Mastering Product-Led Growth.’ He co-founded Gainsight PX (Vista) and Insightera (Adobe), a real-time personalization engine. He previously led the global product development team at Marketo (Adobe) and currently serves as the CPTO at Vidmob, a leading creative intelligence platform powered by GenAI.

Mickey Alon is a serial entrepreneur and co-author of ‘Mastering Product-Led Growth.’ He co-founded Gainsight PX (Vista) and Insightera (Adobe), a real-time personalization engine. He previously led the global product development team at Marketo (Adobe) and currently serves as the CPTO at Vidmob, a leading creative intelligence platform powered by GenAI.

Suren Gunturu is a Data Scientist working in the Generative AI Innovation Center, where he works with various AWS customers to solve high-value business problems. He specializes in building ML pipelines using Large Language Models, primarily through Amazon Bedrock and other AWS Cloud services.

Suren Gunturu is a Data Scientist working in the Generative AI Innovation Center, where he works with various AWS customers to solve high-value business problems. He specializes in building ML pipelines using Large Language Models, primarily through Amazon Bedrock and other AWS Cloud services.

Gaurav Rele is a Senior Data Scientist at the Generative AI Innovation Center, where he works with AWS customers across different verticals to accelerate their use of generative AI and AWS Cloud services to solve their business challenges.

Gaurav Rele is a Senior Data Scientist at the Generative AI Innovation Center, where he works with AWS customers across different verticals to accelerate their use of generative AI and AWS Cloud services to solve their business challenges.

Vidya Sagar Ravipati is a Science Manager at the Generative AI Innovation Center, where he leverages his vast experience in large-scale distributed systems and his passion for machine learning to help AWS customers across different industry verticals accelerate their AI and cloud adoption.

Vidya Sagar Ravipati is a Science Manager at the Generative AI Innovation Center, where he leverages his vast experience in large-scale distributed systems and his passion for machine learning to help AWS customers across different industry verticals accelerate their AI and cloud adoption.

Author: Mickey Alon