How Good Job Games Accelerates 43% with AWS Build Pipeline

Introduction In this blog, we will explore how Good Job Games crafted a scalable and secure build pipeline on AWS, utilizing services like Amazon EC2, Amazon EFS, and Amazon S3 to optimize its game development processes… Business Challenge: Outgrowing an On-Premise Setup Initially, Good Job G…

This post was co-authored by Kaan Turkmen, DevOps Engineer at Good Job Games.

Introduction

In this blog, we will explore how Good Job Games crafted a scalable and secure build pipeline on AWS, utilizing services like Amazon EC2, Amazon EFS, and Amazon S3 to optimize its game development processes.

Good Job Games was established in 2017 with a vision to create distinctive and engaging mobile gaming experiences accessible worldwide. Initially focusing on the development of hyper-casual games, the company’s portfolio quickly captured a significant audience, impacting over 2.5 billion users globally. With a core commitment to innovation and quality, the company has recently shifted its focus towards developing casual puzzle games, to continue providing excellent gaming experiences across diverse demographics.

Business Challenge: Outgrowing an On-Premise Setup

Initially, Good Job Games managed its build processes with an on-premise Mac farm without the aid of external tools like Jenkins or identity providers such as Okta. In the early stages, the company relied on an internal system that handled tasks such as build queuing. While this setup served Good Job Games well in the early stages, it soon became apparent that it lacked the scalability, reliability, and performance required to keep pace with the growing user base and development needs. Power outages and network interruptions disrupted the build processes, impacting productivity and potentially delaying critical updates and game releases.

Business Goal: Building a Future-Ready Infrastructure

Recognizing the limitations of the existing setup, Good Job Games embarked on a mission to create a reliable, efficient, and highly scalable build pipeline on AWS. The primary goals were:

- Establish an infrastructure to manage all game builds seamlessly, with the only limitation being the number of seats available on the Unity license.

- Optimize data transfers, both externally (from GitHub to VPC) and internally (between Availability Zones, from Linux to Mac instances).

- Develop a comprehensive disaster recovery plan for the Jenkins controllers and agents to handle potential outages.

- Implement a Dockerized Unity Accelerator to reduce build times and achieve isolation.

- Periodically update the golden AMI with cached repositories to decrease transfer costs.

- Integrate AWS Client VPN with Okta for seamless authentication, enabling remote builds outside the office’s internal network.

- Ensure consistency and isolation between builds with a containerized Unity Editor to increase reproducibility.

Solution Overview: Crafting a Scalable and Secure Build Pipeline

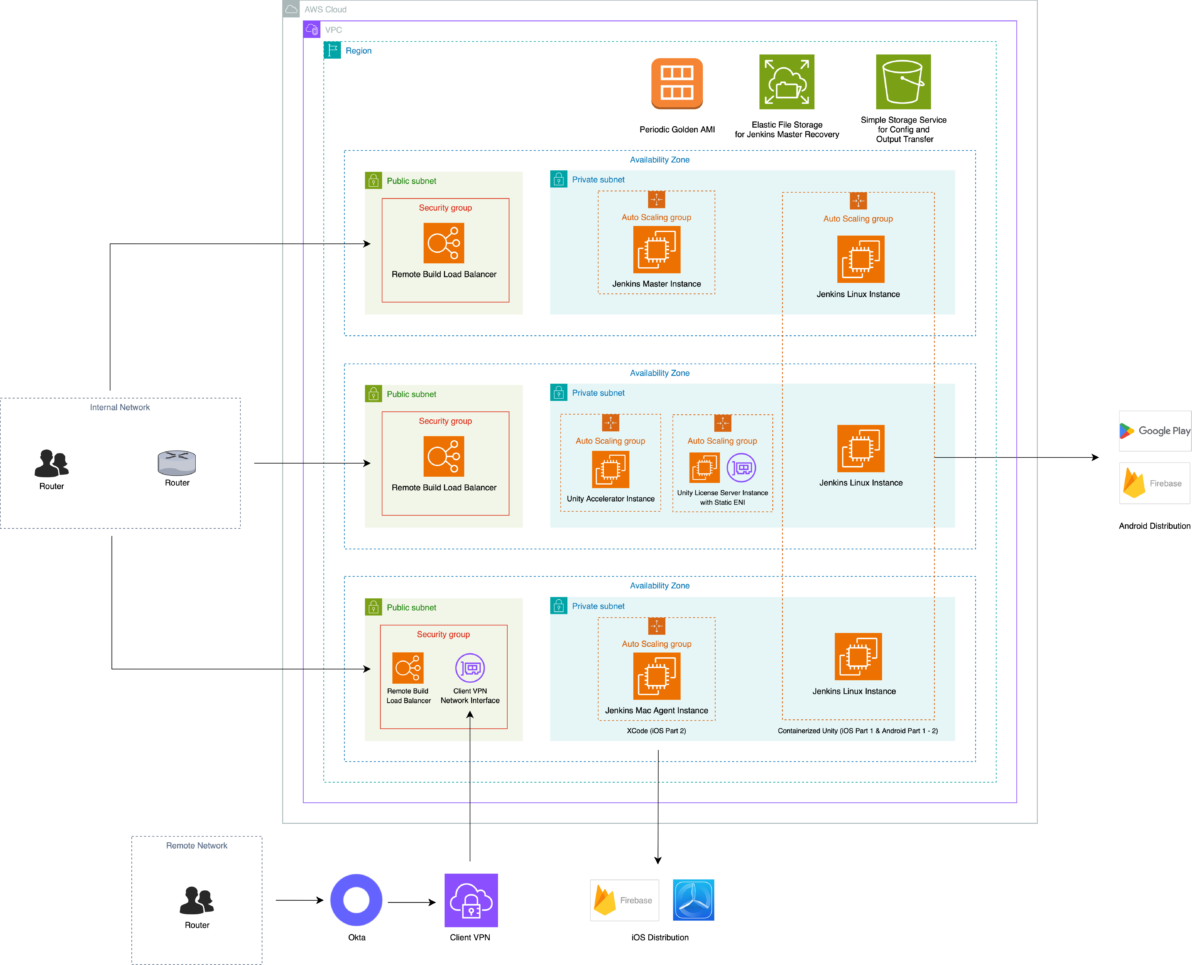

After carefully evaluating the requirements, Good Job Games chose to leverage the power and flexibility of AWS to create a robust and scalable build pipeline. At the core of the solution lies Amazon Elastic Compute Cloud (Amazon EC2), which provides the scalable compute resources to host the Jenkins controller and agents. This forms a backbone of continuous integration and delivery processes. Specifically, the company utilizes Amazon EC2 Mac Instances for jobs requiring macOS environments and leverage the latest generation of Amazon Linux 2023 running on varied instance types including C6i, C5, and M6i instances. These choices ensure optimal performance and compatibility for its diverse build and testing needs.

To optimize data transfers and ensure high availability, Good Job Games implemented a multi-layered approach. First, the company utilized Amazon Elastic File System (Amazon EFS) for persistent storage of the Jenkins controller data, ensuring durability and high availability. Simultaneously, it leveraged Amazon Simple Storage Service (Amazon S3) to efficiently serve asset bundles to players and facilitate the transfer of builds between machines.

To enhance security and enable remote access for the development team, Good Job Games integrated AWS Client VPN with Okta, further fortified by AWS Transit Gateway and strict authorization rules. This secure framework allows the company’s game developers to initiate builds seamlessly, even when working remotely outside the company’s internal network.

Some of the key challenges the team faced were managing large data transfers and ensuring secure authentication processes. To address this, the company implemented a multi-pronged strategy:

- Caching Repositories in Custom AMIs: By caching the large gaming repositories in custom-made Amazon Machine Images (AMIs) created using Packer, Good Job Games ensured that upon initializing a new EC2 instance, the repositories were immediately available. This approach significantly reduced transfer times and costs.

- Mirrored Repositories: To further optimize data transfer processes, the company incorporated mirrored repositories in the same Availability Zones where builds or accesses occurred. This approach not only reduced the load on the main repository but also improved build times by effectively distributing the access load and minimizing network latency.

- Periodic AMI Updates: To address potential synchronization issues between cached repositories and the latest codebase, the company implemented a periodic script that updates the Packer script. This ensures the AMIs remain up-to-date with the latest changes from the main repository.

These changes not only created a portable and compact architecture but also resulted in a 43% reduction in build times. The AWS cloud infrastructure, offering flexibility and performance that are difficult to replicate with on-premise machines, was the primary driver behind this improvement.

In a traditional on-premise setup, achieving the plug-and-play efficiency provided by AMIs is challenging, often requiring extensive manual configuration and significant engineering resources to implement and maintain a reliable setup. Any imperfections in this setup could lead to slower build times. Additionally, during critical periods like release weeks, on-premise systems often require additional machine power to handle the increased build load. However, this extra capacity often remains underutilized during non-release periods, leading to inefficiencies and wasted resources. As a result, the relationship between hardware utilization and build times is closely tied to cost-performance efficiency.

With AWS, Good Job Games combined custom AMIs with Auto Scaling groups, enabling the company to cache its large gaming repositories in these custom-built AMIs. This allowed required instances to be up and running in minutes with all necessary data pre-loaded. The ability to instantly launch high-performance instances during peak times, combined with AWS’s top-notch hardware, ensured that the builds ran without delay, regardless of demand. This scalability and resource efficiency allowed the company to significantly reduce build times during crucial periods.

Conclusion

The transition to AWS has not only bolstered the scalability and reliability of othe build pipeline but has also fostered a culture of innovation within Good Job Games. The flexibility and agility of AWS infrastructure has enabled the company to experiment and iterate on its game more quickly and efficiently iterate the game development processes rapidly, crucial for staying competitive in the fast-paced gaming industry.

By leveraging AWS Auto Scaling groups, Good Job Games has optimized its resource utilization, tailoring compute resources to current demands and eliminating unnecessary energy consumption. This dynamic scaling approach has not only reduced operational costs but also minimized environmental footprint, aligning with sustainability objectives.

Quantifying Success

With this architectural model on AWS, Good job Games have achieved significant improvements in operational efficiency:

- Build times have decreased by 43%, enhancing staff productivity, iteration and accelerating development cycles.

- The incidence of infrastructure-related issues has improved from 33% to less than 1%, remarkably increasing the reliability of processes.

As the company expands globally, AWS helps to scale. AWS also enables Good Job Games to deliver content quickly worldwide for a seamless gaming experience.

Looking Ahead: Embracing the Future of Gaming on AWS

The journey with AWS has been a testament to the power of cloud computing in the gaming industry. The extensive range of services offered by AWS has allowed Good Job Games to build a flexible and scalable infrastructure that supports its evolving business needs, positioning the company for continued success in the dynamic world of gaming.

“As we forge ahead, we remain committed to harnessing the capabilities of AWS to push the boundaries of innovation, delivering exceptional gaming experiences that captivate and delight players across the globe.” – Kaan Turkmen, DevOps Engineer at Good Job Games.

Author: Didem Koskos Gurel