Threat modeling your generative AI workload to evaluate security risk

In this blog post, we’ll revisit the key steps for conducting effective threat modeling on generative AI workloads, along with additional best practices and examples, including some typical deliverables and outcomes you should look for across each stage… Threat Composer is an open source AWS to…

As generative AI models become increasingly integrated into business applications, it’s crucial to evaluate the potential security risks they introduce. At AWS re:Invent 2023, we presented on this topic, helping hundreds of customers maintain high-velocity decision-making for adopting new technologies securely. Customers who attended this session were able to better understand our recommended approach for qualifying security risk and maintaining a high security bar for the applications they build. In this blog post, we’ll revisit the key steps for conducting effective threat modeling on generative AI workloads, along with additional best practices and examples, including some typical deliverables and outcomes you should look for across each stage. Throughout this post we will link to specific examples that we created with the AWS Threat Composer tool. Threat Composer is an open source AWS tool you can use to document your threat model, available at no additional cost.

This post covers a practical approach for threat modeling a generative AI workload and assumes you know the basics of threat modeling. If you want to get an overview on threat modeling, we recommend that you check out this blog post. In addition, this post is part of a larger series on the security and compliance considerations of generative AI.

Why use threat modeling for generative AI?

Each new technology comes with its own learning curve when it comes to identifying and mitigating the unique security risks it presents. The adoption of generative AI into workloads is no different. These workloads, specifically the use of large language models (LLMs), introduce new security challenges because they can generate highly customized and non-deterministic outputs based on user prompts, which introduces the possibility for potential misuse or abuse. In addition, relies on access to large and customized data sets, often internal data sources which might contain sensitive information.

Although working with LLMs is a relatively new practice and has some unique and nuanced security risks and impacts, it’s crucial to remember that LLMs are only one portion of a larger workload. It’s important to apply the threat modeling approach to parts of the system, taking into account well-known threats such as injections or the compromise of credentials. Part 1 of the Securing generative AI AWS blog series, An introduction to the Generative AI Security Scoping Matrix, provides a great overview of what those nuances are, and how the risks differ depending on how you make use of LLMs in your organization.

The four stages of threat modeling for generative AI

As a quick refresher, threat modeling is a structured approach to identifying, understanding, addressing, and communicating the security risks in a given system or application. It is a fundamental element of the design phase that allows you to identify and implement appropriate mitigations and make fundamental security decisions as early as possible.

At AWS, threat modeling is a required input to initiating our Application Security (AppSec) process for the builder teams at AWS, and our builder teams get support from a Security Guardian to build threat models for their features or services.

A useful way of structuring the approach to threat modeling, created by expert Adam Shostack, involves answering four key questions. We’ll look into each one and how to apply them to your generative AI workload.

- What are we working on?

- What can go wrong?

- What are we going to do about it?

- Did we do a good enough job?

What are we working on?

This question aims to get a detailed understanding of your business context and application architecture. The detail that you’re looking for should already be captured as part of the comprehensive system documentation created by the builders of your generative AI solution. By starting from this documentation, you can streamline the threat modeling process and focus on identifying potential threats and vulnerabilities, rather than on re-creating foundational system knowledge.

Example outcomes or deliverables

At a minimum, builders should capture the key components of the solution, including data flows, assumptions, and design decisions. This lays the groundwork for identifying potential threats. Key elements to document are the following:

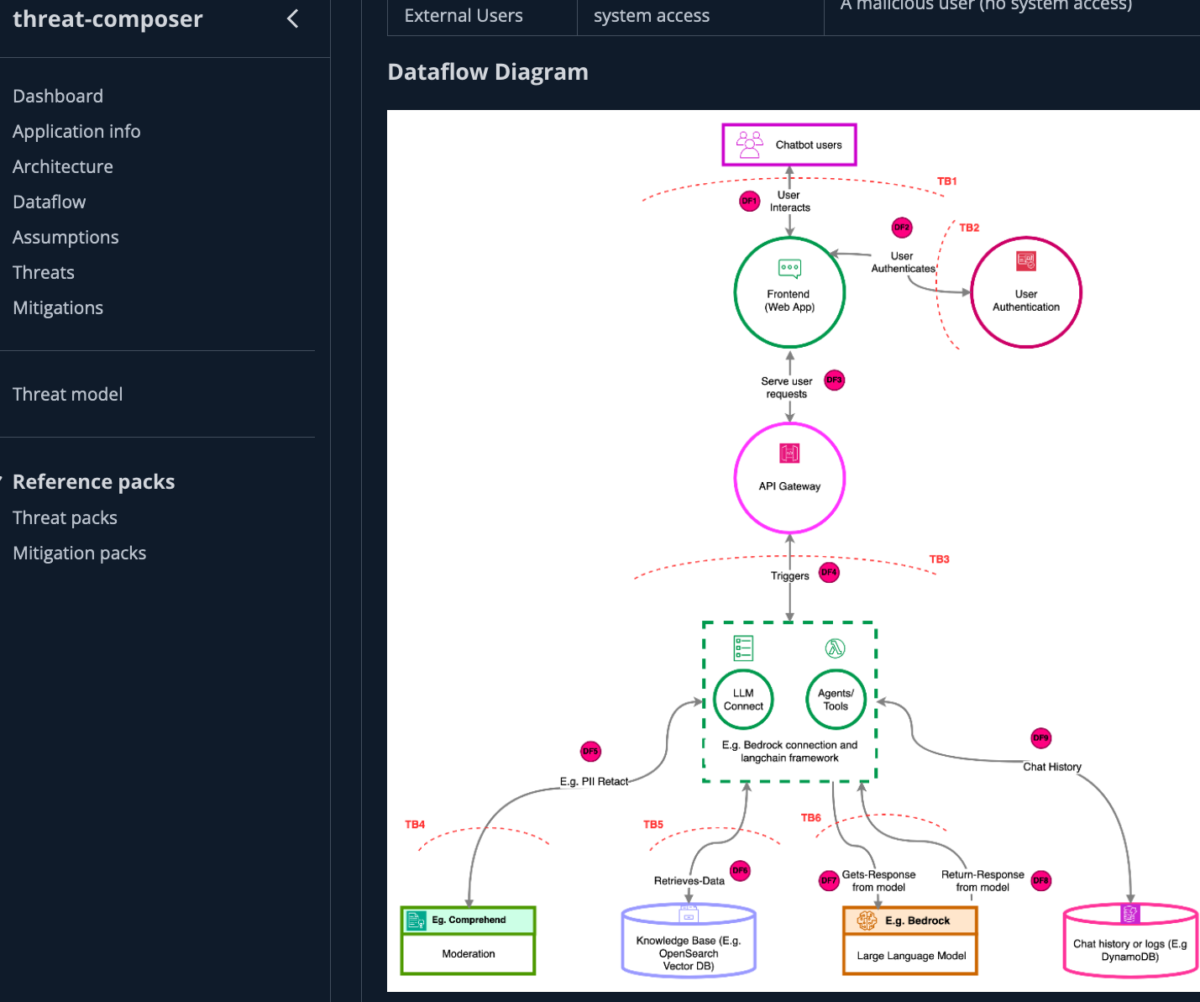

- Data flow diagrams (DFDs) that clearly illustrate the critical data flows of the application, from request to response, detailing what happens at each component or “hop”

- Well-articulated assumptions about how users are expected to interact with and ask questions of the system, or how the model will interact with other parts of the system. For example, in a RAG scenario where the model needs to retrieve data that is stored in other systems, how it will authenticate and translate that data into an appropriate response for the user

- Documentation of key design decisions made by the business, including the rationale behind these decisions

- Detailed business context about the application, such as whether it is considered a critical system, what types of data it handles (for example, confidential, high-integrity, high-availability), and the primary business concerns for the application (for example, data confidentiality, data integrity, system availability)

Figure 1 shows how Threat Composer allows you to input information about the application in the Application Information, Architecture, Dataflow, and Assumptions sections.

Figure 1: Threat composer dataflow diagram view for a generative AI chatbot example

What can go wrong?

For this question, you identify possible threats to your application using the context and information you gathered for the previous question. To help you identify possible threats, make use of existing repositories of knowledge, especially those related to the new technologies you are adopting. These often have tangible examples that you can apply to your application. Useful resources are the OWASP top 10 for LLMs, MITRE ATLAS framework, and the AI Risk Repository. You can also use a structured framework such as STRIDE to aid you in your thinking. Use the information you received from the “What are we building?” question and apply the most relevant STRIDE categories to your thinking. For example, if your application hosts critical data that the business has no risk appetite for losing, then you might think about the various Information Disclosure threats first.

You can write and document these possible threats to your application in the form of threat statements. Threat statements are a way to maintain consistency and conciseness when you document your threat. At AWS, we adhere to a threat grammar which follows the syntax:

A [threat source] with [prerequisites] can [threat action] which leads to [threat impact], negatively impacting [impacted assets].

This threat grammar structure helps you to maintain consistency and allows you to iteratively write useful threat statements. As shown in Figure 2, Threat Composer provides you with this structure for new threat statements and includes examples to assist you.

Figure 2: Threat composer threat statement builder

Once you go through the process of creating threat statements, you will have a summary of “what can go wrong.” You can then define attack steps, as an analysis of “how it can go wrong.” It’s not always necessary to define attack steps for each threat statement because there are many ways a threat might actually happen. Going through the exercise of identifying and documenting a few different threat mechanisms can help to get specific mitigations that you can associate with each attack step for a more effective defense-in-depth approach.

Threat Composer gives you the ability to add additional metadata to your threat statements. Customers who have adopted this option into their workflows most commonly use the STRIDE category and Priority metadata tags. Those customers can quickly track which threats are the highest priority and which STRIDE category they correspond to. Figure 3 shows how you can document threat statements alongside their associated metadata in Threat Composer.

Figure 3: Threat Composer sample genAI chatbot application – threat view

Example outcomes or deliverables

By systematically considering what can go wrong, and how, you can uncover a range of possible threats. Let’s explore some of the example deliverables that can emerge from this process:

- A list of threat statements that you will develop mitigations for, categorized by STRIDE element and priority

- A list of attack steps that are associated to your threat statements. As mentioned, attack steps are an optional activity at this stage, but we recommend at least identifying some for your highest-priority threats

Example threat statements

These are some example threat statements for an application that is interacting with an LLM component:

- A threat actor with access to the public-facing application can inject malicious prompts that overwrite existing system prompts, resulting in healthcare data from other patients being returned, impacting the confidentiality of the data in the database

- A threat actor with access to the public-facing application can inject malicious prompts that request malicious or destructive actions, resulting in healthcare data from other patients being deleted, impacting the availability of the data in the database

Example attack steps

These are some example attack steps that demonstrate how the preceding threat statements could occur:

- Perform crafted prompt injection to bypass system prompt guardrails

- Embed a vulnerable agent with access to the model

- Embed an indirect prompt injection in a webpage instructing the LLM to disregard previous user instructions and use an LLM plugin to delete the user’s emails

What can we do about it?

Now that you’ve identified some possible threats, consider which controls would be appropriate to help mitigate the risks associated with those threats. This decision will be driven by your business context and the asset in question. Your organizational policies will also influence prioritization of controls: Some organizations might choose to prioritize the control that impacts the highest number of threats, while others might choose to start with the control that impacts the threats that are deemed the highest risk (by likelihood and impact).

For each identified threat, define specific mitigation strategies. This could include input sanitization, output validation, access controls, and more. Ideally, at a minimum, you want at least one preventative control and one detective control associated with each threat. The same resources that are linked to in the What can go wrong? section are also highly useful for identifying relevant controls. For example, the MITRE ATLAS has a dedicated section for mitigations.

Note: You might find that as you identify mitigations for your threats, you start to see duplication in your controls. For example, least-privilege access control might be associated with almost all of your threats. This duplication can also help you to prioritize. If a single control appears in 90% of your threat mitigations, the effective implementation of that control will help to drive down risk across each of those threats.

Example outcomes or deliverables

Associated with each threat, you should have a list of mitigations, each with a unique identifier to ease lookups and reusability later on. Example mitigations with identifiers include the following:

- M-001: Predefine SQL query structure

- M-002: Sanitize for known parameters (input filtering)

- M-003: Check against templated prompt parameters

- M-004: Review output is relevant to user (output filtering)

- M-005: Limit LLM context window

- M-006: Dynamic permissions check on high-risk actions performed by model (separating authentication parameters from prompt)

- M-007: Apply least privilege to all components of the application

For more information on relevant security controls for your workload, we recommend that you read Part 3 of our Securing generative AI series: Applying relevant security controls.

Figure 4 shows some completed example threat statements in Threat Composer, with mitigations linked to each.

Figure 4: Completed threat statements with metadata and linked mitigations

After answering the first three questions, you have your completed threat model. The documentation should contain your DFDs, threat statements, [optional] attack steps, and mitigations.

For a more detailed example, including a visual dashboard that shows a breakdown of a threat summary, see the full GenAI chatbot example in Threat Composer.

Did we do a good enough job?

A threat model is a living document. This post has discussed how creating a threat model helps you to identify technical controls for threats, but it’s also important to consider the non-technical benefits that the process of threat modeling provides.

For your final activity, you should validate both elements of the threat modeling activity.

Validate the effectiveness of the identified mitigation: Some of the mitigations you identify might be new, and some you might already have had in place. Regardless, it’s important to continuously test and verify that your security measures are working as intended. This could involve penetration testing or automated security scans. At AWS, threat models serve as inputs to automated test cases to be embedded in the pipeline. The threats defined are also used to define the scope of the penetration testing, to confirm whether those threats have been mitigated sufficiently.

Validate the effectiveness of the process: Threat modeling is fundamentally a human activity. It requires interaction across your business, builder teams, and security functions. Those closest to the creation and operations of the application should own the threat model document and revisit it often, with support from their security team (or Security Guardian equivalent). How often this is done will depend on your organizational policies and the criticality of the workload, though it is important to define triggers that will initiate a review of the threat model. Example triggers can include threat intelligence updates, new features that significantly change data flows, or new features that impact security-related aspects of the system (such as authentication or authorization, or logging). Validating your process periodically is especially important when you adopt new technologies because the threat landscape for these evolves faster than usual.

Performing a retrospective on the threat modeling process is also a good way to work through and discuss what worked well, what didn’t work well, and what changes you will commit to the next time the threat model is revisited.

Example outputs

These are some example outputs for this step of the process:

- Automated test case definitions based on mitigations

- A defined scope for penetration testing, and test cases based on threats

- A living document for the threat model that is stored alongside application documentation (including a data flow diagram)

- A retrospective overview, including lessons learned and feedback from the threat modeling participants, and what will be done next time to improve

Conclusion

In this blog post, we explored a practical and proactive approach to threat modeling for generative AI workloads. The key steps we covered provide a structured framework for conducting effective threat modeling, from understanding the business context and application architecture to identifying potential threats, defining mitigation strategies, and validating the overall effectiveness of the process.

By following this approach, organizations can better equip themselves to maintain a high security bar as they adopt generative AI technologies. The threat modeling process not only helps to mitigate known risks, but also fosters a culture of security-mindedness that is crucial for organizations to adopt. This can help your organization to unlock the full potential of these powerful technologies while maintaining the security and privacy of your systems and data.

Want to look deeper into additional areas of generative AI security? Check out the other posts in the Securing Generative AI series:

- Part 1 – Securing generative AI: An introduction to the Generative AI Security Scoping Matrix

- Part 2 – Designing generative AI workloads for resilience

- Part 3 – Securing Generative AI: Applying relevant security controls

- Part 4 – Security Generative AI: Data, compliance, and privacy considerations

Author: Danny Cortegaca