Automate the machine learning model approval process with Amazon SageMaker Model Registry and Amazon SageMaker Pipelines

ML models in production are not static artifacts… They reflect the environment where they are deployed and, therefore, require comprehensive monitoring mechanisms for model quality, bias, and feature importance… Organizations often want to introduce additional compliance checks that validate t…

Innovations in artificial intelligence (AI) and machine learning (ML) are causing organizations to take a fresh look at the possibilities these technologies can offer. As you aim to bring your proofs of concept to production at an enterprise scale, you may experience challenges aligning with the strict security compliance requirements of their organization. In the face of these challenges, MLOps offers an important path to shorten your time to production while increasing confidence in the quality of deployed workloads by automating governance processes.

ML models in production are not static artifacts. They reflect the environment where they are deployed and, therefore, require comprehensive monitoring mechanisms for model quality, bias, and feature importance. Organizations often want to introduce additional compliance checks that validate that the model aligns with their organizational standards before it is deployed. These frequent manual checks can create long lead times to deliver value to customers. Automating these checks allows them to be repeated regularly and consistently rather than organizations having to rely on infrequent manual point- in-time checks.

This post illustrates how to use common architecture principles to transition from a manual monitoring process to one that is automated. You can use these principles and existing AWS services such as Amazon SageMaker Model Registry and Amazon SageMaker Pipelines to deliver innovative solutions to your customers while maintaining compliance for your ML workloads.

Challenge

As AI becomes ubiquitous, it’s increasingly used to process information and interact with customers in a sensitive context. Suppose a tax agency is interacting with its users through a chatbot. It’s important that this new system aligns with organizational guidelines by allowing developers to have a high degree of confidence that it responds accurately and without bias. At maturity, an organization may have tens or even hundreds of models in production. How can you make sure every model is properly vetted before it’s deployed and on each deployment?

Traditionally, organizations have created manual review processes to keep updated code from becoming available to the public through mechanisms such as an Enterprise Review Committee (ERC), Enterprise Review Board (ERB), or a Change Advisory Board (CAB).

Just as mechanisms have evolved with the rise of continuous integration and continuous delivery (CI/CD), MLOps can reduce the need for manual processes while increasing the frequency and thoroughness of quality checks. Through automation, you can scale in-demand skillsets, such as model and data analysis, introducing and enforcing in-depth analysis of your models at scale across diverse product teams.

In this post, we use SageMaker Pipelines to define the required compliance checks as code. This allows you to introduce analysis of arbitrary complexity while not being limited by the busy schedules of highly technical individuals. Because the automation takes care of repetitive analytics tasks, technical resources can focus on relentlessly improving the quality and thoroughness of the MLOps pipeline to improve compliance posture, and make sure checks are performing as expected.

Deployment of an ML model to production generally requires at least two artifacts to be approved: the model and the endpoint. In our example, the organization is willing to approve a model for deployment if it passes their checks for model quality, bias, and feature importance prior to deployment. Secondly, the endpoint can be approved for production if it performs as expected when deployed into a production-like environment. In a subsequent post, we walk you through how to deploy a model and implement sample compliance checks. In this post, we discuss how you can extend this process to large language models (LLMs), which produce a varied set of outputs and introduce complexities regarding automated quality assurance checks.

Aligning with AWS multi-account best practices

The solution outlined in this post spans across several accounts in a given AWS organization. For a deeper look at the various components required for an AWS organization multi-account enterprise ML environment, see MLOps foundation roadmap for enterprises with Amazon SageMaker. In this post, we refer to the advanced analytics governance account as the AI/ML governance account. We focus on the development of the enforcement mechanism for the centralized automated model approval within this account.

This account houses centralized components such as a model registry on SageMaker Model Registry, ML project templates on SageMaker Projects, model cards on Amazon SageMaker Model Cards, and container images on Amazon Elastic Container Registry (Amazon ECR).

We use an isolated environment (in this case, a separate AWS environment) to deploy and promote across various environments. You can modify the strategies discussed in this post along the spectrum of centralized vs. decentralized depending on the posture of your organization. For this example, we provide a centralized model. You can also extend this model to align with strict compliance requirements. For example, the AI/ML governance team trusts the development teams are sending the correct bias and explainability reports for a given model. Additional checks could be included to “trust by verify” to further bolster the posture of this organization. Additional complexities such as this are not addressed in this post. To dive further into the topic of MLOps secure implementations, refer to Amazon SageMaker MLOps: from idea to production in six steps.

Solution overview

The following diagram illustrates the solution architecture using SageMaker Pipelines to automate model approval.

The workflow comprises a comprehensive process for model building, training, evaluation, and approval within an organization containing different AWS accounts, integrating various AWS services. The detailed steps are as follows:

- Data scientists from the product team use Amazon SageMaker Studio to create Jupyter notebooks used to facilitate data preprocessing and model pre-building. The code is committed to AWS CodeCommit, a managed source control service. Optionally, you can commit to third-party version control systems such as GitHub, GitLab, or Enterprise Git.

- The commit to CodeCommit invokes the SageMaker pipeline, which runs several steps, including model building and training, and running processing jobs using Amazon SageMaker Clarify to generate bias and explainability reports.

- SageMaker Clarify processes and stores its outputs, including model artifacts and reports in JSON format, in an Amazon Simple Storage Service (Amazon S3) bucket.

- A model is registered in the SageMaker model registry with a model version.

- The Amazon S3 PUT action invokes an AWS Lambda

- This Lambda function copies all the artifacts from the S3 bucket in the development account to another S3 bucket in the AI/ML governance account, providing restricted access and data integrity. This post assumes your accounts and S3 buckets are in the same AWS Region. For cross-Region copying, see Copy data from an S3 bucket to another account and Region by using the AWS CLI.

- Registering the model invokes a default Amazon CloudWatch event associated with SageMaker model registry actions.

- The CloudWatch event is consumed by Amazon EventBridge, which invokes another Lambda

- This Lambda function is tasked with starting the SageMaker approval pipeline.

- The SageMaker approval pipeline evaluates the artifacts against predefined benchmarks to determine if they meet the approval criteria.

- Based on the evaluation, the pipeline updates the model status to approved or rejected accordingly.

This workflow provides a robust, automated process for model approval using AWS’s secure, scalable infrastructure and services. Each step is designed to make sure that only models meeting the set criteria are approved, maintaining high standards for model performance and fairness.

Prerequisites

To implement this solution, you need to first create and register an ML model in the SageMaker model registry with the necessary SageMaker Clarify artifacts. You can create and run the pipeline by following the example provided in the following GitHub repository.

The following sections assume that a model package version has been registered with status Pending Manual Approval. This status allows you to build an approval workflow. You can either have a manual approver or set up an automated approval workflow based on metrics checks in the aforementioned reports.

Build your pipeline

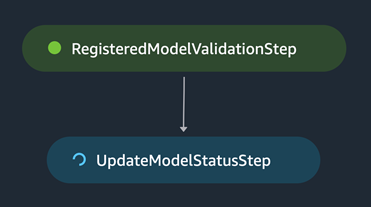

SageMaker Pipelines allows you to define a series of interconnected steps defined as code using the Pipelines SDK. You can extend the pipeline to help meet your organizational needs with both automated and manual approval steps. In this example, we build the pipeline to include two major steps. The first step evaluates artifacts uploaded to the AI/ML governance account by the model build pipeline against threshold values set by model registry administrators for model quality, bias, and feature importance. The second step receives the evaluation and updates the model’s status and metadata based on the values received. The pipeline is represented in SageMaker Pipelines by the following DAG.

Next, we dive into the code required for the pipeline and its steps. First, we define a pipeline session to help manage AWS service integration as we define our pipeline. This can be done as follows:

Each step runs as a SageMaker Processor for which we specify a small instance type due to the minimal compute requirements of our pipeline. The processor can be defined as follows:

We then define the pipeline steps using step_processor.run(…) as the input parameter to run our custom script inside the defined environment.

Validate model package artifacts

The first step takes two arguments: default_bucket and model_package_group_name. It outputs the results of the checks in JSON format stored in Amazon S3. The step is defined as follows:

This step runs the custom script passed to the code parameter. We now explore this script in more detail.

Values passed to arguments can be parsed using standard methods like argparse and will be used throughout the script. We use these values to retrieve the model package. We then parse the model package’s metadata to find the location of the model quality, bias, and explainability reports. See the following code:

The reports retrieved are simple JSON files we can then parse. In the following example, we retrieve the treatment equity and compare to our threshold in order to return a True or False result. Treatment equity is defined as the difference in the ratio of false negatives to false positives for the advantaged vs. disadvantaged group. We arbitrarily set the optimal threshold to be 0.8.

After running through the measures of interest, we return the true/false checks to a JSON file that will be copied to Amazon S3 as per the output variable of the ProcessingStep.

Update the model package status in the model registry

When the initial step is complete, we use the JSON file created in Amazon S3 as input to update the model package’s status and metadata. See the following code:

This step runs the custom script passed to the code parameter. We now explore this script in more detail. First, parse the values in checks.json to evaluate if the model passed all checks or review the reasons for failure:

After we know if the model should be approved or rejected, we update the model status and metadata as follows:

This step produces a model with a status of Approved or Rejected based on the set of checks specified in the first step.

Orchestrate the steps as a SageMaker pipeline

We orchestrate the previous steps as a SageMaker pipeline with two parameter inputs passed as arguments to the various steps:

It’s straightforward to extend this pipeline by adding elements into the list passed to the steps parameter. In the next section, we explore how to run this pipeline as new model packages are registered to our model registry.

Run the event-driven pipeline

In this section, we outline how to invoke the pipeline using an EventBridge rule and Lambda function.

Create a Lambda function and select the Python 3.9 runtime. The following function retrieves the model package ARN, the model package group name, and the S3 bucket where the artifacts are stored based on the event. It then starts running the pipeline using these values:

After defining the Lambda function, we create the EventBridge rule to automatically invoke the function when a new model package is registered with PendingManualApproval into the model registry. You can use AWS CloudFormation and the following template to create the rule:

We now have a SageMaker pipeline consisting of two steps being invoked when a new model is registered to evaluate model quality, bias, and feature importance metrics and update the model status accordingly.

Applying this approach to generative AI models

In this section, we explore how the complexities introduced by LLMs change the automated monitoring workflow.

Traditional ML models typically produce concise outputs with obvious ground truths in their training dataset. In contrast, LLMs can generate long, nuanced sequences that may have little to no ground truth due to the autoregressive nature of training this segment of model. This strongly influences various components of the governance pipeline we’ve described.

For instance, in traditional ML models, bias is detected by looking at the distributions of labels over different population subsets (for example, male vs. female). The labels (often a single number or a few numbers) are a clear and simple signal used to measure bias. In contrast, generative models produce lengthy and complex answers, which don’t provide an obvious signal to be used for monitoring. HELM (a holistic framework for evaluating foundation models) allows you to simplify monitoring by untangling the evaluation process into metrics of concern. This includes accuracy, calibration and uncertainty, robustness, fairness, bias and stereotypes, toxicity, and efficiency. We then apply downstream processes to measure for these metrics independently. This is generally done using standardized datasets composed of examples and a variety of accepted responses.

We concretely evaluate four metrics of interest to any governance pipelines for LLMs: memorization and copyright, disinformation, bias, and toxicity, as described in HELM. This is done by collecting inference results from the model pushed to the model registry. The benchmarks include:

- Memorization and copyright with books from

bookscorpus, which uses popular books from a bestseller list and source code of the Linux kernel. This can be quickly extended to include a number of copyrighted works. - Disinformation with headlines from the

MisinfoReactionFramesdataset, which has false headlines across a number of topics. - Bias with Bias Benchmark for Question Answering (BBQ). This QA dataset works to highlight biases affecting various social groups.

- Toxicity with Bias in Open-ended Language Generation Dataset (BOLD), which benchmarks across profession, gender, race, religion, and political ideology.

Each of these datasets is publicly available. They each allow complex aspects of a generative model’s behavior to be isolated and distilled down to a single number. This flow is described in the following architecture.

For a detailed view of this topic along with important mechanisms to scale in production, refer to Operationalize LLM Evaluation at Scale using Amazon SageMaker Clarify and MLOps services.

Conclusion

In this post, we discussed a sample solution to begin automating your compliance checks for models going into production. As AI/ML becomes increasingly common, organizations require new tools to codify the expertise of their highly skilled employees in the AI/ML space. By embedding your expertise as code and running these automated checks against models using event-driven architectures, you can increase both the speed and quality of models by empowering yourself to run these checks as needed rather than relying on the availability of individuals for manual compliance or quality assurance reviews By using well-known CI/CD techniques in the application development lifecycle and applying them to the ML modeling lifecycle, organizations can scale in the era of generative AI.

If you have any thoughts or questions, please leave them in the comments section.

About the Authors

Jayson Sizer McIntosh is a Senior Solutions Architect at Amazon Web Services (AWS) in the World Wide Public Sector (WWPS) based in Ottawa (Canada) where he primarily works with public sector customers as an IT generalist with a focus on Dev(Sec)Ops/CICD. Bringing his experience implementing cloud solutions in high compliance environments, he is passionate about helping customers successfully deliver modern cloud-based services to their users.

Jayson Sizer McIntosh is a Senior Solutions Architect at Amazon Web Services (AWS) in the World Wide Public Sector (WWPS) based in Ottawa (Canada) where he primarily works with public sector customers as an IT generalist with a focus on Dev(Sec)Ops/CICD. Bringing his experience implementing cloud solutions in high compliance environments, he is passionate about helping customers successfully deliver modern cloud-based services to their users.

Nicolas Bernier is an AI/ML Solutions Architect, part of the Canadian Public Sector team at AWS. He is currently conducting research in Federated Learning and holds five AWS certifications, including the ML Specialty Certification. Nicolas is passionate about helping customers deepen their knowledge of AWS by working with them to translate their business challenges into technical solutions.

Nicolas Bernier is an AI/ML Solutions Architect, part of the Canadian Public Sector team at AWS. He is currently conducting research in Federated Learning and holds five AWS certifications, including the ML Specialty Certification. Nicolas is passionate about helping customers deepen their knowledge of AWS by working with them to translate their business challenges into technical solutions.

Pooja Ayre is a seasoned IT professional with over 9 years of experience in product development, having worn multiple hats throughout her career. For the past two years, she has been with AWS as a Solutions Architect, specializing in AI/ML. Pooja is passionate about technology and dedicated to finding innovative solutions that help customers overcome their roadblocks and achieve their business goals through the strategic use of technology. Her deep expertise and commitment to excellence make her a trusted advisor in the IT industry.

Pooja Ayre is a seasoned IT professional with over 9 years of experience in product development, having worn multiple hats throughout her career. For the past two years, she has been with AWS as a Solutions Architect, specializing in AI/ML. Pooja is passionate about technology and dedicated to finding innovative solutions that help customers overcome their roadblocks and achieve their business goals through the strategic use of technology. Her deep expertise and commitment to excellence make her a trusted advisor in the IT industry.

Author: Jason Sizer McIntosh