Automating multi-AZ high availability for WebLogic administration server

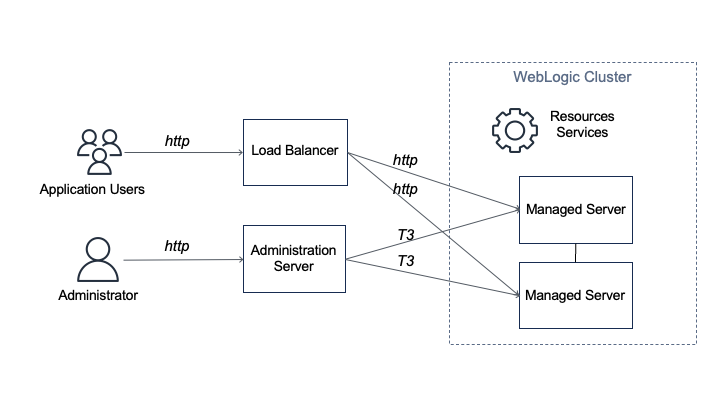

Customer applications are deployed to WebLogic Server instances (managed servers) and managed using an administration server (admin server) within a logical organization unit, called a domain… Clusters of managed servers provide application availability and horizontal scalability, while the sing…

Oracle WebLogic Server is used by enterprises to power production workloads, including Oracle E-Business Suite (EBS) and Oracle Fusion Middleware applications.

Customer applications are deployed to WebLogic Server instances (managed servers) and managed using an administration server (admin server) within a logical organization unit, called a domain. Clusters of managed servers provide application availability and horizontal scalability, while the single-instance admin server does not host applications.

There are various architectures detailing WebLogic-managed server high availability (HA). In this post, we demonstrate using Availability Zones (AZ) and a floating IP address to achieve a “stretch cluster” (Oracle’s terminology).

Overview of problem

The WebLogic admin server is important for domain configuration, management, and monitoring both application performance and system health. Historically, WebLogic was configured using IP addresses, with managed servers caching the admin server IP to reconnect if the connection was lost.

This can cause issues in a dynamic Cloud setup, as replacing the admin server from a template changes its IP address, causing two connectivity issues:

- Communication within the domain: the admin and managed servers communicate via the T3 protocol, which is based on Java RMI.

- Remote access to admin server console: allowing internet admin access and what additional security controls may be required is beyond the scope of this post.

Here, we will explore how to minimize downtime and achieve HA for your admin server.

Solution overview

For this solution, there are three approaches customers tend to follow:

- Use a floating virtual IP to keep the address static. This solution is familiar to WebLogic administrators as it replicates historical on-premise HA implementations. The remainder of this post dives into this practical implementation.

- Use DNS to resolve the admin server IP address. This is also a supported configuration.

- Run in a “headless configuration” and not (normally) run the admin server.

- Use WebLogic Scripting Tool to issues commands

- Collect and observe metrics through other toolsRunning “headless” requires a high level of operational maturity. It may not be compatible for certain vendor packaged applications deployed to WebLogic.

Using a floating IP address for WebLogic admin server

Here, we discuss the reference WebLogic deployment architecture on AWS, as depicted in Figure 2.

In this example, a WebLogic domain resides in a virtual private cloud’s (VPC) private subnet. The admin server is on its own Amazon Elastic Compute Cloud (Amazon EC2) instance. It’s bound to the private IP 10.0.11.8 that floats across AZs within the VPC. There are two ways to achieve this:

- Create a “dummy” subnet in the VPC (in any AZ), with the smallest allowed subnet size of /28. Excluding the first “4” and the last IP of the subnet because they’re reserved, choose an address. For a 10.0.11.0/28 subnet, we will use 10.0.11.8 and configure WebLogic admin server to bind to that.

- Use an IP outside of the VPC. We discuss this second way and compare both processes in the later section “Alternate solution for multi-AZ floating IP”.

This example Amazon Web Services stretch architecture with one WebLogic domain and one admin server:

- Create a VPC across two or more AZs, with one private subnet in each AZ for managed servers and an additional “dummy” subnet.

- Create two EC2 instances, one for each of the WebLogic Managed Servers (distributed across the private subnets).

- Use an Auto Scaling group to ensure a single admin server running.

- Create an Amazon EC2 launch template for the admin server.

- Associate the launch template and an Auto Scaling group with minimum, maximum, and desired capacity of 1. The Auto Scaling Group (ASG) detects EC2 and/or AZ degradation and launches a new instance in a different AZ if the current fails.

- Create an AWS Lambda function (example to follow) to be called by the Auto Scale group lifecycle hook to update the route tables.

- Update the user data commands (example to follow) of the launch template to:

- Add the floating IP address to the network interface

- Start the admin server using the floating IP

To route traffic to the floating IP, we update route tables for both public and private subnets.

We create a Lambda function launched by the Auto Scale group lifecycle hook pending:InService when a new admin instance is created. This Lambda code updates routing rules in both route tables mapping the dummy subnet CIDR (10.0.11.0/28) of the “floating” IP to the admin Amazon EC2. This updates routes in both the public and private subnets for the dynamically launched admin server, enabling managed servers to connect.

Enabling internet access to the admin server

If enabling internet access to the admin server, create an internet-facing Application Load Balancer (ALB) attached to the public subnets. With the route to the admin server, the ALB can forward traffic to it.

- Create an IP-based target group that points to the floating IP.

- Add a forwarding rule in the ALB to route WebLogic admin traffic to the admin server.

User data commands in the launch template to make admin server accessible upon ASG scale out

In the admin server EC2 launch template, add user data code to monitor the ASG lifecycle state. When it reaches InService state, a Lambda function is invoked to update route tables. Then, the script starts the WebLogic admin server Java process (and associated NodeManager, if used).

The admin server instance’s SourceDestCheck attribute needs to be set to false, enabling it to bind to the logical IP. This change can also be done in the Lambda function.

When a user accesses the admin server from the internet:

- Traffic flows to the elastic IP address associated to the internet-facing ALB.

- The ALB forwards to the configured target group.

- The ALB uses the updated routes to reach 10.0.11.8 (admin server).

When managed servers communicate with the admin server, they use the updated route table to reach 10.0.11.8 (admin server).

The Lambda function

Here, we present a Lambda function example that sets the EC2 instance SourceDeskCheck attribute to false and updates the route rules for the dummy subnet CIDR (the “floating” IP on the admin server EC2) in both public and private route tables.

import { AutoScalingClient, CompleteLifecycleActionCommand } from "@aws-sdk/client-auto-scaling";

import { EC2Client, DeleteRouteCommand, CreateRouteCommand, ModifyInstanceAttributeCommand } from "@aws-sdk/client-ec2";

export const handler = async (event, context, callback) => {

console.log('LogAutoScalingEvent');

console.log('Received event:', JSON.stringify(event, null, 2));

// IMPORTANT: replace with your dummy subnet CIDR that the floating IP resides in

const destCIDR = "10.0.11.0/28";

// IMPORTANT: replace with your route table IDs

const rtTables = ["rtb-**************ff0", "rtb-**************af5"];

const asClient = new AutoScalingClient({region: event.region});

const eventDetail = event.detail;

const ec2client = new EC2Client({region: event.region});

const inputModifyAttr = {

"SourceDestCheck": {

"Value": false

},

"InstanceId": eventDetail['EC2InstanceId'],

};

const commandModifyAttr = new ModifyInstanceAttributeCommand(inputModifyAttr);

await ec2client.send(commandModifyAttr);

// modify route in two route tables

for (const rt of rtTables) {

const inputDelRoute = { // DeleteRouteRequest

DestinationCidrBlock: destCIDR,

DryRun: false,

RouteTableId: rt, // required

};

const cmdDelRoute = new DeleteRouteCommand(inputDelRoute);

try {

const response = await ec2client.send(cmdDelRoute);

console.log(response);

} catch (error) {

console.log(error);

}

const inputCreateRoute = { // addRouteRequest

DestinationCidrBlock: destCIDR,

DryRun: false,

InstanceId: eventDetail['EC2InstanceId'],

RouteTableId: rt, // required

};

const cmdCreateRoute = new CreateRouteCommand(inputCreateRoute);

await ec2client.send(cmdCreateRoute);

}

// continue on ASG lifecycle

const params = {

AutoScalingGroupName: eventDetail['AutoScalingGroupName'], /* required */

LifecycleActionResult: 'CONTINUE', /* required */

LifecycleHookName: eventDetail['LifecycleHookName'], /* required */

InstanceId: eventDetail['EC2InstanceId'],

LifecycleActionToken: eventDetail['LifecycleActionToken']

};

const cmdCompleteLifecycle = new CompleteLifecycleActionCommand(params);

const response = await asClient.send(cmdCompleteLifecycle);

console.log(response);

return response;

};Amazon EC2 user data

The following code in Amazon EC2 user data shows how to add logical secondary IP address to the Amazon EC2 primary ENI, keep polling the ASG lifecycle state, and start the admin server Java process upon Amazon EC2 entering the InService state.

Content-Type: text/x-shellscript; charset="us-ascii"

MIME-Version: 1.0

Content-Transfer-Encoding: 7bit

Content-Disposition: attachment; filename="userdata.txt"

#!/bin/bash

ip addr add 10.0.11.8/28 br 10.0.11.255 dev eth0

TOKEN=$(curl -X PUT "http://169.254.169.254/latest/api/token" -H "X-aws-ec2-metadata-token-ttl-seconds: 21600")

for x in {1..30}

do

target_state=$(curl -H "X-aws-ec2-metadata-token: $TOKEN" -v http://169.254.169.254/latest/meta-data/autoscaling/target-lifecycle-state)

if [ "$target_state" = "InService" ]; then

su -c 'nohup /mnt/efs/wls/fmw/install/Oracle/Middleware/Oracle_Home/user_projects/domains/domain1/bin/startWebLogic.sh &' ec2-user

break

fi

sleep 10

doneAlternate solution for multi-AZ floating IP

An alternative solution for the floating IP is to use an IP external to the VPC. The configurations for ASG, Amazon EC2 launch template, and ASG lifecycle hook Lambda function remain the same. However, the ALB cannot access the WebLogic admin console webapp from the internet due to its requirement for a VPC-internal subnet. To access the webapp in this scenario, stand up a bastion host in a public subnet.

While this approach “saves” 16 VPC IP addresses by avoiding a dummy subnet, there are disadvantages:

- Bastion hosts are not AZ-failure resilient.

- Missing true multi-AZ resilience like the first solution.

- Requires additional cost and complexity in managing multiple bastion hosts across AZs or a VPN.

Conclusion

AWS has a track record of efficiently running Oracle applications, Oracle EBS, PeopleSoft, and mission critical JEE workloads. In this post, we delved into a HA solution using a multi-AZ floating IP for the WebLogic admin server, and using ASG to ensure a singular admin server. We showed how to use ASG lifecycle hooks and Lambda to automate route updates for the floating IP and configuring an ALB to allow Internet access for the admin server. This solution achieves multi-AZ resilience for WebLogic admin server with automated recovery, transforming a traditional WebLogic admin server from a pet to cattle.

Author: Jack Zhou