Build a generative AI image description application with Anthropic’s Claude 3.5 Sonnet on Amazon Bedrock and AWS CDK

Generating image descriptions is a common requirement for applications across many industries… One common use case is tagging images with descriptive metadata to improve discoverability within an organization’s content repositories… Ecommerce platforms also use automatically generated image de…

Generating image descriptions is a common requirement for applications across many industries. One common use case is tagging images with descriptive metadata to improve discoverability within an organization’s content repositories. Ecommerce platforms also use automatically generated image descriptions to provide customers with additional product details. Descriptive image captions also improve accessibility for users with visual impairments.

With advances in generative artificial intelligence (AI) and multimodal models, producing image descriptions is now more straightforward. Amazon Bedrock provides access to the Anthropic’s Claude 3 family of models, which incorporates new computer vision capabilities enabling Anthropic’s Claude to comprehend and analyze images. This unlocks new possibilities for multimodal interaction. However, building an end-to-end application often requires substantial infrastructure and slows development.

The Generative AI CDK Constructs coupled with Amazon Bedrock offer a powerful combination to expedite application development. This integration provides reusable infrastructure patterns and APIs, enabling seamless access to cutting-edge foundation models (FMs) from Amazon and leading startups. Amazon Bedrock is a fully managed service that offers a choice of high-performing FMs from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API, along with a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI. Generative AI CDK Constructs can accelerate application development by providing reusable infrastructure patterns, allowing you to focus your time and effort on the unique aspects of your application.

In this post, we delve into the process of building and deploying a sample application capable of generating multilingual descriptions for multiple images with a Streamlit UI, AWS Lambda powered with the Amazon Bedrock SDK, and AWS AppSync driven by the open source Generative AI CDK Constructs.

Multimodal models

Multimodal AI systems are an advanced type of AI that can process and analyze data from multiple modalities at once, including text, images, audio, and video. Unlike traditional AI models trained on a single data type, multimodal AI integrates diverse data sources to develop a more comprehensive understanding of complex information.

Anthropic’s Claude 3 on Amazon Bedrock is a leading multimodal model with computer vision capabilities to analyze images and generate descriptive text outputs. Anthropic’s Claude 3 excels at interpreting complex visual assets like charts, graphs, diagrams, reports, and more. The model combines its computer vision with language processing to provide nuanced text summaries of key information extracted from images. This allows Anthropic’s Claude 3 to develop a deeper understanding of visual data than traditional single-modality AI.

In March 2024, Amazon Bedrock provided access to the Anthropic’s Claude 3 family. The three models in the family are Anthropic’s Claude 3 Haiku, the fastest and most compact model for near-instant responsiveness, Anthropic’s Claude 3 Sonnet, the ideal balanced model between skills and speed, and Anthropic’s Claude 3 Opus, the most intelligent offering for top-level performance on highly complex tasks. In June 2024, Amazon Bedrock announced support for Anthropic’s Claude 3.5 as well. The sample application in this post supports Claude 3.5 Sonnet and all the three Claude 3 models.

Generative AI CDK Constructs

Generative AI CDK Constructs, an extension to the AWS Cloud Development Kit (AWS CDK), is an open source development framework for defining cloud infrastructure as code (IaC) and deploying it through AWS CloudFormation.

Constructs are the fundamental building blocks of AWS CDK applications. The AWS Construct Library categorizes constructs into three levels: Level 1 (the lowest-level construct with no abstraction), Level 2 (mapping directly to single AWS CloudFormation resources), and Level 3 (patterns with the highest level of abstraction).

The Generative AI CDK Constructs Library provides modular building blocks to seamlessly integrate AWS services and resources into solutions using generative AI capabilities. By using Amazon Bedrock to access FMs and combining with serverless AWS services such as Lambda and AWS AppSync, these AWS CDK constructs streamline the process of assembling cloud infrastructure for generative AI. You can rapidly configure and deploy solutions to generate content using intuitive abstractions. This approach boosts productivity and reduces time-to-market for delivering innovative applications powered by the latest advances in generative AI on the AWS Cloud.

Solution overview

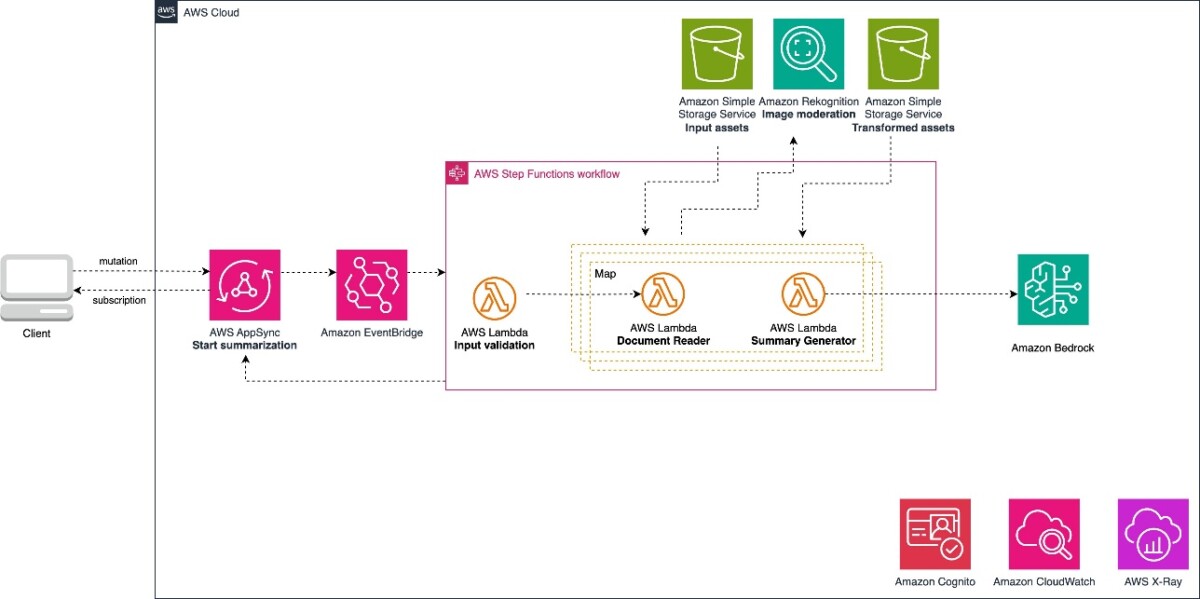

The sample application in this post uses the aws-summarization-appsync-stepfn construct from the Generative AI CDK Constructs Library. The aws-summarization-appsync-stepfn construct provides a serverless architecture that uses AWS AppSync, AWS Step Functions, and Amazon EventBridge to deliver an asynchronous image summarization service. This construct offers a scalable and event-driven solution for processing and generating descriptions for image assets.

AWS AppSync acts as the entry point, exposing a GraphQL API that enables clients to initiate image summarization and description requests. The API utilizes subscription mutations, allowing for asynchronous runs of the requests. This decoupling promotes best practices for event-driven, loosely coupled systems.

EventBridge serves as the event bus, facilitating the communication between AWS AppSync and Step Functions. When a client submits a request through the GraphQL API, an event is emitted to EventBridge, invoking a run of the Step Functions workflow.

Step Functions orchestrates the run of three Lambda functions, each responsible for a specific task in the image summarization process:

- Input validator – This Lambda function performs input validation, making sure the provided requests adhere to the expected format. It also handles the upload of the input image assets to an Amazon Simple Storage Service (Amazon S3) bucket designated for raw assets.

- Document reader – This Lambda function retrieves the raw image assets from the input asset bucket, performs image moderation checks using Amazon Rekognition, and uploads the processed assets to an S3 bucket designated for transformed files. This separation of raw and processed assets facilitates auditing and versioning.

- Generate summary – This Lambda function generates a textual summary or description for the processed image assets, using machine learning (ML) models or other image analysis techniques.

The Step Functions workflow orchestrator employs a Map state, enabling parallel runs of multiple image assets. This concurrent processing capability provides optimal resource utilization and minimizes latency, delivering a highly scalable and efficient image summarization solution.

User authentication and authorization are handled by Amazon Cognito, providing secure access management and identity services for the application’s users. This makes sure only authenticated and authorized users can access and interact with the image summarization service. The solution incorporates observability features through integration with Amazon CloudWatch and AWS X-Ray.

The UI for the application is implemented using the Streamlit open source framework, providing a modern and responsive experience for interacting with the image summarization service. You can access the source code for the project in the public GitHub repository.

The following diagram shows the architecture to deliver this use case.

The workflow to generate image descriptions includes the following steps:

- The user uploads the input image to an S3 bucket designated for input assets.

- The upload invokes the image summarization mutation API exposed by AWS AppSync. This will initiate the serverless workflow.

- AWS AppSync publishes an event to EventBridge to invoke the next step in the workflow.

- EventBridge routes the event to a Step Functions state machine.

- The Step Functions state machine invokes a Lambda function that validates the input request parameters.

- Upon successful validation, the Step Functions state machine invokes a document reader Lambda function. This function runs an image moderation check using Amazon Rekognition. If no unsafe or explicit content is detected, it pushes the image to a transformed assets S3 bucket.

- A summary generator Lambda function is invoked, which reads the transformed image. It uses the Amazon Bedrock library to invoke the Anthropic’s Claude 3 Sonnet model, passing the image bytes as input.

- Anthropic’s Claude 3 Sonnet generates a textual description for the input image.

- The summary generator publishes the generated description through an AWS AppSync subscription. The Streamlit UI application listens for events from this subscription and displays the generated description to the user once received.

The following figure illustrates the workflow of the Step Functions state machine.

Prerequisites

To implement this solution, you should have the following prerequisites:

- An AWS account

- The AWS Command Line Interface (AWS CLI) installed and your credentials configured:

- js version v18.12.1

- Python version 3.9 of higher

- AWS CDK version 2.68.0

- jq version jq-1.6

- Docker desktop installed

Build and deploy the solution

Complete the following steps to set up the solution:

- Clone the GitHub repository.

If using HTTPS, use the following code:If using SSH, use the following code:

- Change the directory to the sample solution:

- Update the stage variable to a unique value:

- Open

image-description-stack.ts - Install all dependencies:

- Bootstrap AWS CDK resources on the AWS account. Replace ACCOUNT_ID and REGION with your own values:

- Deploy the solution:

The preceding command deploys the stack in your account. The deployment will take approximately 5 minutes to complete.

- Configure

client_app: - Within the

/client_appdirectory, create a new file named.envwith the following content. Replace the property values with the values retrieved from the stack outputs.

COGNITO_CLIENT_SECRET is a secret value that can be retrieved from the Amazon Cognito console. Navigate to the user pool created by the stack. Under App integration, navigate to App clients and analytics, and choose App client name. Under App client information, choose Show client secret and copy the value of the client secret.

- Run

client_app:

When the client application is up and running, it will open the browser 8501 port (http://localhost:8501/Home).

Make sure your virtual environment is free from SSL certificate issues. If any SSL certificate issues are present, reinstall the CA certificates and OpenSSL package using the following command:

Test the solution

To test the solution, we upload some sample images and generate descriptions in different applications. Complete the following steps:

- In the Streamlit UI, choose Log In and register the user for the first time

- After the user is registered and logged in, choose Image Description in the navigation pane.

- Upload multiple images and select the preferred model configuration ( Anthropic’s Claude 3.5 Sonnet or Anthropic’s Claude 3), then choose Submit.

The uploaded image and the generated description are shown in the center pane.

The image description is generated in French.

Clean up

To avoid incurring unintended charges, delete the resources you created:

- Remove all data from the S3 buckets.

- Run the

CDK destroy - Delete the S3 buckets.

Conclusion

In this post, we discussed how to integrate Amazon Bedrock with Generative AI CDK Constructs. This solution enables the rapid development and deployment of cloud infrastructure tailored for an image description application by using the power of generative AI, specifically Anthropic’s Claude 3. The Generative AI CDK Constructs abstract the intricate complexities of infrastructure, thereby accelerating development timelines.

The Generative AI CDK Constructs Library offers a comprehensive suite of constructs, empowering developers to augment and enhance generative AI capabilities within their applications, unlocking a myriad of possibilities for innovation. Try out the Generative AI CDK Constructs Library for your own use cases, and share your feedback and questions in the comments.

About the Authors

Dinesh Sajwan is a Senior Solutions Architect with the Prototyping Acceleration team at Amazon Web Services. He helps customers to drive innovation and accelerate their adoption of cutting-edge technologies, enabling them to stay ahead of the curve in an ever-evolving technological landscape. Beyond his professional endeavors, Dinesh enjoys a quiet life with his wife and three children.

Dinesh Sajwan is a Senior Solutions Architect with the Prototyping Acceleration team at Amazon Web Services. He helps customers to drive innovation and accelerate their adoption of cutting-edge technologies, enabling them to stay ahead of the curve in an ever-evolving technological landscape. Beyond his professional endeavors, Dinesh enjoys a quiet life with his wife and three children.

Justin Lewis leads the Emerging Technology Accelerator at AWS. Justin and his team help customers build with emerging technologies like generative AI by providing open source software examples to inspire their own innovation. He lives in the San Francisco Bay Area with his wife and son.

Justin Lewis leads the Emerging Technology Accelerator at AWS. Justin and his team help customers build with emerging technologies like generative AI by providing open source software examples to inspire their own innovation. He lives in the San Francisco Bay Area with his wife and son.

Alain Krok is a Senior Solutions Architect with a passion for emerging technologies. His past experience includes designing and implementing IIoT solutions for the oil and gas industry and working on robotics projects. He enjoys pushing the limits and indulging in extreme sports when he is not designing software.

Alain Krok is a Senior Solutions Architect with a passion for emerging technologies. His past experience includes designing and implementing IIoT solutions for the oil and gas industry and working on robotics projects. He enjoys pushing the limits and indulging in extreme sports when he is not designing software.

Michael Tran is a Sr. Solutions Architect with Prototyping Acceleration team at Amazon Web Services. He provides technical guidance and helps customers innovate by showing the art of the possible on AWS. He specializes in building prototypes in the AI/ML space. You can contact him @Mike_Trann on Twitter.

Michael Tran is a Sr. Solutions Architect with Prototyping Acceleration team at Amazon Web Services. He provides technical guidance and helps customers innovate by showing the art of the possible on AWS. He specializes in building prototypes in the AI/ML space. You can contact him @Mike_Trann on Twitter.

Author: Dinesh Sajwan