Build generative AI applications with Amazon Titan Text Premier, Amazon Bedrock, and AWS CDK

Amazon Titan Text Premier, the latest addition to the Amazon Titan family of large language models (LLMs), is now generally available in Amazon Bedrock… Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading artificial intelligence (A…

Amazon Titan Text Premier, the latest addition to the Amazon Titan family of large language models (LLMs), is now generally available in Amazon Bedrock. Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading artificial intelligence (AI) companies like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon through a single API, along with a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI.

Amazon Titan Text Premier is an advanced, high-performance, and cost-effective LLM engineered to deliver superior performance for enterprise-grade text generation applications, including optimized performance for Retrieval Augmented Generation (RAG) and agents. The model is built from the ground up following safe, secure, and trustworthy responsible AI practices, and excels in delivering exceptional generative AI text capabilities at scale.

Exclusive to Amazon Bedrock, Amazon Titan Text models support a wide range of text-related tasks, including summarization, text generation, classification, question-answering, and information extraction. With Amazon Titan Text Premier, you can unlock new levels of efficiency and productivity for your text generation needs.

In this post, we explore building and deploying two sample applications powered by Amazon Titan Text Premier. To accelerate development and deployment, we use the open source AWS Generative AI CDK Constructs (launched by Werner Vogels at AWS re:Invent 2023). AWS Cloud Development Kit (AWS CDK) constructs accelerate application development by providing developers with reusable infrastructure patterns you can seamlessly incorporate into your applications, freeing you to focus on what differentiates your application.

Document Explorer sample application

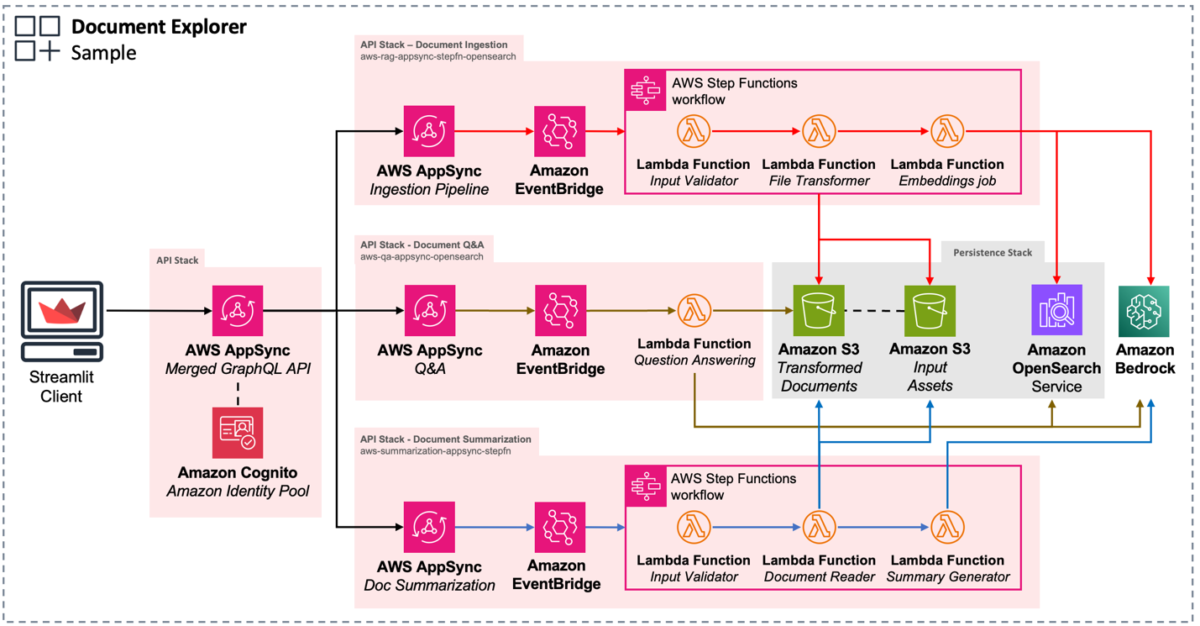

The Document Explorer sample generative AI application can help you quickly understand how to build end-to-end generative AI applications on AWS. It includes examples of key components needed in generative AI applications, such as:

- Data ingestion pipeline – Ingests documents, converts them to text, and stores them in a knowledge base for retrieval. This enables use cases like RAG to tailor generative AI applications to your data.

- Document summarization – Summarizes PDF documents using Amazon Titan Premier through Amazon Bedrock.

- Question answering – Answers natural language questions by retrieving relevant documents from the knowledge base and using LLMs like Amazon Titan Premier through Amazon Bedrock.

Follow the steps in the README to clone and deploy the application in your account. The application deploys all the required infrastructure, as shown in the following architecture diagram.

After you deploy the application, upload a sample PDF file to the input Amazon Simple Storage Service (Amazon S3) bucket by choosing Select Document in the navigation pane. For example, you can download Amazon’s Annual Letters to Shareholders from 1997–2023 and upload using the web interface. On the Amazon S3 console, you can see that the files you uploaded are now found in the S3 bucket whose name begins with persistencestack-inputassets.

After you have uploaded a file, open a document to see it rendered in the browser.

Choose Q&A in the navigation pane, and choose your preferred model (for this example, Amazon Titan Premier). You can now ask a question against the document you uploaded.

The following diagram illustrates a sample workflow in Document Explorer.

Don’t forget to delete the AWS CloudFormation stacks to avoid unexpected charges. First make sure to remove all data from the S3 buckets, specifically anything in the buckets whose names begin with persistencestack. Then run the following command from a terminal:

Amazon Bedrock Agent and Custom Knowledge Base sample application

The Amazon Bedrock Agent and Custom Knowledge Base sample generative AI application is a chat assistant designed to answer questions about literature using RAG from a selection of books from Project Gutenberg.

This app deploys an Amazon Bedrock agent that can consult an Amazon Bedrock knowledge base backed by Amazon OpenSearch Serverless as a vector store. An S3 bucket is created to store the books for the knowledge base.

Follow the steps in the README to clone the sample application in your account. The following diagram illustrates the deployed solution architecture.

Update the file defining which foundation model to use when creating the agent:

Follow the steps in the README to deploy the code sample in your account and ingest the example documents.

Navigate to the Agents page on the Amazon Bedrock console in your AWS Region and find your newly created agent. The AgentId can be found in the CloudFormation stack outputs section.

Now you can ask some questions. You may need to tell the agent what book you want to ask about or refresh the session when asking about different books. The following are some examples of questions you may ask:

- What are the most popular books in the library?

- Who is Mr. Bingley quite taken with at the ball in Meryton?

The following screenshot shows an example of the workflow.

Don’t forget to delete the CloudFormation stack to avoid unexpected charges. Remove all the data from the S3 buckets, then run the following command from a terminal:

Conclusion

Amazon Titan Text Premier is available today in the US East (N. Virginia) Region. Custom fine-tuning for Amazon Titan Text Premier is also available today in preview in the US East (N. Virginia) Region. Check the full Region list for future updates.

To learn more about the Amazon Titan family of models, visit the Amazon Titan product page. For pricing details, review Amazon Bedrock Pricing. Visit the AWS Generative AI CDK Constructs GitHub repository for more details on available constructs and additional documentation. For practical examples to get started, check out the AWS samples repository.

About the authors

Alain Krok is a Senior Solutions Architect with a passion for emerging technologies. His past experience includes designing and implementing IIoT solutions for the oil and gas industry and working on robotics projects. He enjoys pushing the limits and indulging in extreme sports when he is not designing software.

Alain Krok is a Senior Solutions Architect with a passion for emerging technologies. His past experience includes designing and implementing IIoT solutions for the oil and gas industry and working on robotics projects. He enjoys pushing the limits and indulging in extreme sports when he is not designing software.

Laith Al-Saadoon is a Principal Prototyping Architect on the Prototyping and Cloud Engineering (PACE) team. He builds prototypes and solutions using generative AI, machine learning, data analytics, IoT & edge computing, and full-stack development to solve real-world customer challenges. In his personal time, Laith enjoys the outdoors–fishing, photography, drone flights, and hiking.

Laith Al-Saadoon is a Principal Prototyping Architect on the Prototyping and Cloud Engineering (PACE) team. He builds prototypes and solutions using generative AI, machine learning, data analytics, IoT & edge computing, and full-stack development to solve real-world customer challenges. In his personal time, Laith enjoys the outdoors–fishing, photography, drone flights, and hiking.

Justin Lewis leads the Emerging Technology Accelerator at AWS. Justin and his team help customers build with emerging technologies like generative AI by providing open source software examples to inspire their own innovation. He lives in the San Francisco Bay Area with his wife and son.

Justin Lewis leads the Emerging Technology Accelerator at AWS. Justin and his team help customers build with emerging technologies like generative AI by providing open source software examples to inspire their own innovation. He lives in the San Francisco Bay Area with his wife and son.

Anupam Dewan is a Senior Solutions Architect with a passion for Generative AI and its applications in real life. He and his team enable Amazon Builders who build customer facing application using generative AI. He lives in Seattle area, and outside of work loves to go on hiking and enjoy nature.

Anupam Dewan is a Senior Solutions Architect with a passion for Generative AI and its applications in real life. He and his team enable Amazon Builders who build customer facing application using generative AI. He lives in Seattle area, and outside of work loves to go on hiking and enjoy nature.

Author: Alain Krok