Build RAG applications with MongoDB Atlas, now available in Knowledge Bases for Amazon Bedrock

Today, we are announcing the availability of MongoDB Atlas as a vector store in Knowledge Bases for Amazon Bedrock… With MongoDB Atlas vector store integration, you can build RAG solutions to securely connect your organization’s private data sources to FMs in Amazon Bedrock… This integration…

Foundational models (FMs) are trained on large volumes of data and use billions of parameters. However, in order to answer customers’ questions related to domain-specific private data, they need to reference an authoritative knowledge base outside of the model’s training data sources. This is commonly achieved using a technique known as Retrieval Augmented Generation (RAG). By fetching data from the organization’s internal or proprietary sources, RAG extends the capabilities of FMs to specific domains, without needing to retrain the model. It is a cost-effective approach to improving model output so it remains relevant, accurate, and useful in various contexts.

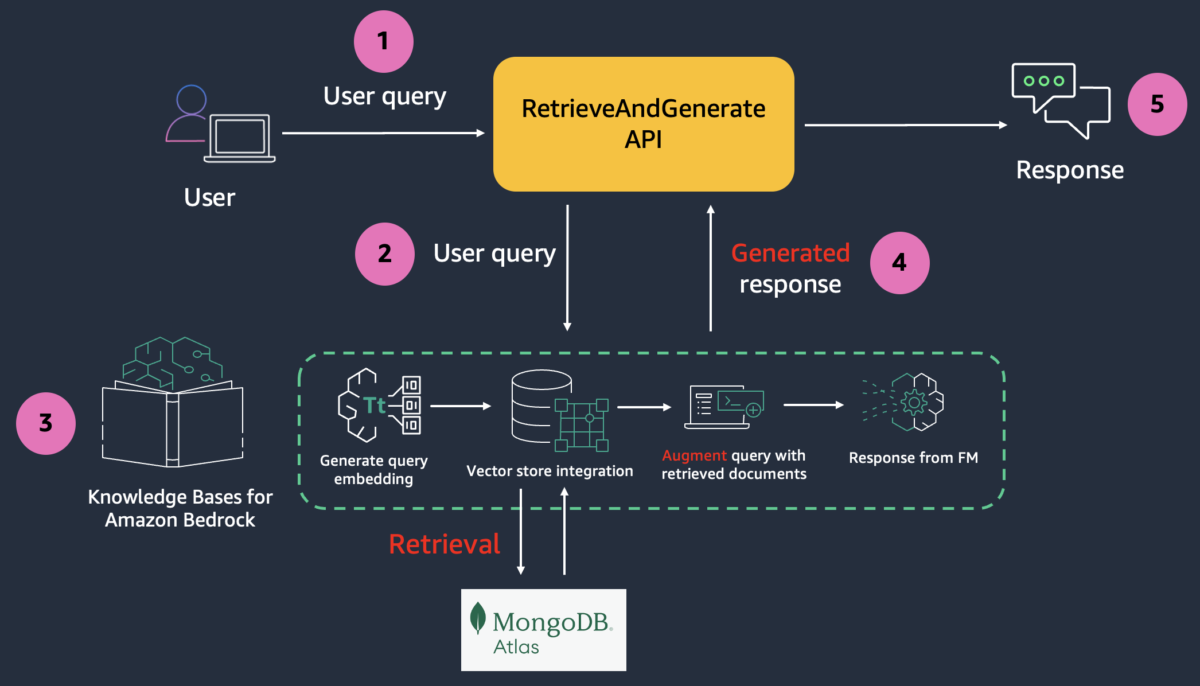

Knowledge Bases for Amazon Bedrock is a fully managed capability that helps you implement the entire RAG workflow from ingestion to retrieval and prompt augmentation without having to build custom integrations to data sources and manage data flows.

Today, we are announcing the availability of MongoDB Atlas as a vector store in Knowledge Bases for Amazon Bedrock. With MongoDB Atlas vector store integration, you can build RAG solutions to securely connect your organization’s private data sources to FMs in Amazon Bedrock. This integration adds to the list of vector stores supported by Knowledge Bases for Amazon Bedrock, including Amazon Aurora PostgreSQL-Compatible Edition, vector engine for Amazon OpenSearch Serverless, Pinecone, and Redis Enterprise Cloud.

Build RAG applications with MongoDB Atlas and Knowledge Bases for Amazon Bedrock

Vector Search in MongoDB Atlas is powered by the vectorSearch index type. In the index definition, you must specify the field that contains the vector data as the vector type. Before using MongoDB Atlas vector search in your application, you will need to create an index, ingest source data, create vector embeddings and store them in a MongoDB Atlas collection. To perform queries, you will need to convert the input text into a vector embedding, and then use an aggregation pipeline stage to perform vector search queries against fields indexed as the vector type in a vectorSearch type index.

Thanks to the MongoDB Atlas integration with Knowledge Bases for Amazon Bedrock, most of the heavy lifting is taken care of. Once the vector search index and knowledge base are configured, you can incorporate RAG into your applications. Behind the scenes, Amazon Bedrock will convert your input (prompt) into embeddings, query the knowledge base, augment the FM prompt with the search results as contextual information and return the generated response.

Let me walk you through the process of setting up MongoDB Atlas as a vector store in Knowledge Bases for Amazon Bedrock.

Configure MongoDB Atlas

Start by creating a MongoDB Atlas cluster on AWS. Choose an M10 dedicated cluster tier. Once the cluster is provisioned, create a database and collection. Next, create a database user and grant it the Read and write to any database role. Select Password as the Authentication Method. Finally, configure network access to modify the IP Access List – add IP address 0.0.0.0/0 to allow access from anywhere.

Use the following index definition to create the Vector Search index:

{

"fields": [

{

"numDimensions": 1536,

"path": "AMAZON_BEDROCK_CHUNK_VECTOR",

"similarity": "cosine",

"type": "vector"

},

{

"path": "AMAZON_BEDROCK_METADATA",

"type": "filter"

},

{

"path": "AMAZON_BEDROCK_TEXT_CHUNK",

"type": "filter"

}

]

}Configure the knowledge base

Create an AWS Secrets Manager secret to securely store the MongoDB Atlas database user credentials. Choose Other as the Secret type. Create an Amazon Simple Storage Service (Amazon S3) storage bucket and upload the Amazon Bedrock documentation user guide PDF. Later, you will use the knowledge base to ask questions about Amazon Bedrock.

You can also use another document of your choice because Knowledge Base supports multiple file formats (including text, HTML, and CSV).

Navigate to the Amazon Bedrock console and refer to the Amzaon Bedrock User Guide to configure the knowledge base. In the Select embeddings model and configure vector store, choose Titan Embeddings G1 – Text as the embedding model. From the list of databases, choose MongoDB Atlas.

Enter the basic information for the MongoDB Atlas cluster (Hostname, Database name, etc.) as well as the ARN of the AWS Secrets Manager secret you had created earlier. In the Metadata field mapping attributes, enter the vector store specific details. They should match the vector search index definition you used earlier.

Initiate the knowledge base creation. Once complete, synchronise the data source (S3 bucket data) with the MongoDB Atlas vector search index.

Once the synchronization is complete, navigate to MongoDB Atlas to confirm that the data has been ingested into the collection you created.

Notice the following attributes in each of the MongoDB Atlas documents:

AMAZON_BEDROCK_TEXT_CHUNK– Contains the raw text for each data chunk.AMAZON_BEDROCK_CHUNK_VECTOR– Contains the vector embedding for the data chunk.AMAZON_BEDROCK_METADATA– Contains additional data for source attribution and rich query capabilities.

Test the knowledge base

It’s time to ask questions about Amazon Bedrock by querying the knowledge base. You will need to choose a foundation model. I picked Claude v2 in this case and used “What is Amazon Bedrock” as my input (query).

If you are using a different source document, adjust the questions accordingly.

You can also change the foundation model. For example, I switched to Claude 3 Sonnet. Notice the difference in the output and select Show source details to see the chunks cited for each footnote.

Integrate knowledge base with applications

To build RAG applications on top of Knowledge Bases for Amazon Bedrock, you can use the RetrieveAndGenerate API which allows you to query the knowledge base and get a response.

Here is an example using the AWS SDK for Python (Boto3):

import boto3

bedrock_agent_runtime = boto3.client(

service_name = "bedrock-agent-runtime"

)

def retrieveAndGenerate(input, kbId):

return bedrock_agent_runtime.retrieve_and_generate(

input={

'text': input

},

retrieveAndGenerateConfiguration={

'type': 'KNOWLEDGE_BASE',

'knowledgeBaseConfiguration': {

'knowledgeBaseId': kbId,

'modelArn': 'arn:aws:bedrock:us-east-1::foundation-model/anthropic.claude-3-sonnet-20240229-v1:0'

}

}

)

response = retrieveAndGenerate("What is Amazon Bedrock?", "BFT0P4NR1U")["output"]["text"]If you want to further customize your RAG solutions, consider using the Retrieve API, which returns the semantic search responses that you can use for the remaining part of the RAG workflow.

import boto3

bedrock_agent_runtime = boto3.client(

service_name = "bedrock-agent-runtime"

)

def retrieve(query, kbId, numberOfResults=5):

return bedrock_agent_runtime.retrieve(

retrievalQuery= {

'text': query

},

knowledgeBaseId=kbId,

retrievalConfiguration= {

'vectorSearchConfiguration': {

'numberOfResults': numberOfResults

}

}

)

response = retrieve("What is Amazon Bedrock?", "BGU0Q4NU0U")["retrievalResults"]Things to know

- MongoDB Atlas cluster tier – This integration requires requires an Atlas cluster tier of at least M10.

- AWS PrivateLink – For the purposes of this demo, MongoDB Atlas database IP Access List was configured to allow access from anywhere. For production deployments, AWS PrivateLink is the recommended way to have Amazon Bedrock establish a secure connection to your MongoDB Atlas cluster. Refer to the Amazon Bedrock User guide (under MongoDB Atlas) for details.

- Vector embedding size – The dimension size of the vector index and the embedding model should be the same. For example, if you plan to use Cohere Embed (which has a dimension size of

1024) as the embedding model for the knowledge base, make sure to configure the vector search index accordingly. - Metadata filters – You can add metadata for your source files to retrieve a well-defined subset of the semantically relevant chunks based on applied metadata filters. Refer to the documentation to learn more about how to use metadata filters.

Now available

MongoDB Atlas vector store in Knowledge Bases for Amazon Bedrock is available in the US East (N. Virginia) and US West (Oregon) Regions. Be sure to check the full Region list for future updates.

Learn more

- MongoDB Atlas on AWS Marketplace

- Knowledge Bases for Amazon Bedrock product page

- Knowledge Bases for Amazon Bedrock documentation

Try out the MongoDB Atlas integration with Knowledge Bases for Amazon Bedrock! Send feedback to AWS re:Post for Amazon Bedrock or through your usual AWS contacts and engage with the generative AI builder community at community.aws.

— Abhishek

Author: Abhishek Gupta