Configure and use defaults for Amazon SageMaker resources with the SageMaker Python SDK

To ensure the SageMaker training and deployment of ML models follow these guardrails, it’s a common practice to set restrictions at the account or AWS Organizations level through service control policies and AWS Identity and Access Management (IAM) policies to enforce the usage of specific IAM ro…

The Amazon SageMaker Python SDK is an open-source library for training and deploying machine learning (ML) models on Amazon SageMaker. Enterprise customers in tightly controlled industries such as healthcare and finance set up security guardrails to ensure their data is encrypted and traffic doesn’t traverse the internet. To ensure the SageMaker training and deployment of ML models follow these guardrails, it’s a common practice to set restrictions at the account or AWS Organizations level through service control policies and AWS Identity and Access Management (IAM) policies to enforce the usage of specific IAM roles, Amazon Virtual Private Cloud (Amazon VPC) configurations, and AWS Key Management Service (AWS KMS) keys. In such cases, data scientists have to provide these parameters to their ML model training and deployment code manually, by noting down subnets, security groups, and KMS keys. This puts the onus on the data scientists to remember to specify these configurations, to successfully run their jobs, and avoid getting Access Denied errors.

Starting with SageMaker Python SDK version 2.148.0, you can now configure default values for parameters such as IAM roles, VPCs, and KMS keys. Administrators and end-users can initialize AWS infrastructure primitives with defaults specified in a configuration file in YAML format. Once configured, the Python SDK automatically inherits these values and propagates them to the underlying SageMaker API calls such as CreateProcessingJob(), CreateTrainingJob(), and CreateEndpointConfig(), with no additional actions needed. The SDK also supports multiple configuration files, allowing admins to set a configuration file for all users, and users can override it via a user-level configuration that can be stored in Amazon Simple Storage Service (Amazon S3), Amazon Elastic File System (Amazon EFS) for Amazon SageMaker Studio, or the user’s local file system.

In this post, we show you how to create and store the default configuration file in Studio and use the SDK defaults feature to create your SageMaker resources.

Solution overview

We demonstrate this new feature with an end-to-end AWS CloudFormation template that creates the required infrastructure, and creates a Studio domain in the deployed VPC. In addition, we create KMS keys for encrypting the volumes used in training and processing jobs. The steps are as follows:

- Launch the CloudFormation stack in your account. Alternatively, if you want to explore this feature on an existing SageMaker domain or notebook, skip this step.

- Populate the

config.yamlfile and save the file in the default location. - Run a sample notebook with an end-to-end ML use case, including data processing, model training, and inference.

- Override the default configuration values.

Prerequisites

Before you get started, make sure you have an AWS account and an IAM user or role with administrator privileges. If you are a data scientist currently passing infrastructure parameters to resources in your notebook, you can skip the next step of setting up your environment and start creating the configuration file.

To use this feature, make sure to upgrade your SageMaker SDK version by running pip install --upgrade sagemaker.

Set up the environment

To deploy a complete infrastructure including networking and a Studio domain, complete the following steps:

- Clone the GitHub repository.

- Log in to your AWS account and open the AWS CloudFormation console.

- To deploy the networking resources, choose Create stack.

- Upload the template under

setup/vpc_mode/01_networking.yaml. - Provide a name for the stack (for example,

networking-stack), and complete the remaining steps to create the stack. - To deploy the Studio domain, choose Create stack again.

- Upload the template under

setup/vpc_mode/02_sagemaker_studio.yaml. - Provide a name for the stack (for example,

sagemaker-stack), and provide the name of the networking stack when prompted for theCoreNetworkingStackNameparameter. - Proceed with the remaining steps, select the acknowledgements for IAM resources, and create the stack.

When the status of both stacks update to CREATE_COMPLETE, proceed to the next step.

Create the configuration file

To use the default configuration for the SageMaker Python SDK, you create a config.yaml file in the format that the SDK expects. For the format for the config.yaml file, refer to Configuration file structure. Depending on your work environment, such as Studio notebooks, SageMaker notebook instances, or your local IDE, you can either save the configuration file at the default location or override the defaults by passing a config file location. For the default locations for other environments, refer to Configuration file locations. The following steps showcase the setup for a Studio notebook environment.

To easily create the config.yaml file, run the following cells in your Studio system terminal, replacing the placeholders with the CloudFormation stack names from the previous step:

This script automatically populates the YAML file, replacing the placeholders with the infrastructure defaults, and saves the file in the home folder. Then it copies the file into the default location for Studio notebooks. The resulting config file should look similar to the following format:

If you have an existing domain and networking configuration set up, create the config.yaml file in the required format and save it in the default location for Studio notebooks.

Note that these defaults simply auto-populate the configuration values for the appropriate SageMaker SDK calls, and don’t enforce the user to any specific VPC, subnet, or role. As an administrator, if you want your users to use a specific configuration or role, use IAM condition keys to enforce the default values.

Additionally, each API call can have its own configurations. For example, in the preceding config file sample, you can specify vpc-a and subnet-a for training jobs, and specify vpc-b and subnet-c, subnet-d for processing jobs.

Run a sample notebook

Now that you have set the configuration file, you can start running your model building and training notebooks as usual, without the need to explicitly set networking and encryption parameters, for most SDK functions. See Supported APIs and parameters for a complete list of supported API calls and parameters.

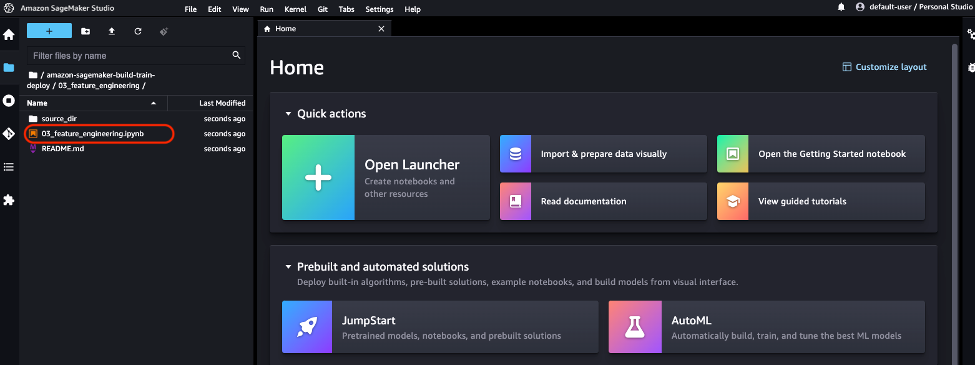

In Studio, choose the File Explorer icon in the navigation pane and open 03_feature_engineering/03_feature_engineering.ipynb, as shown in the following screenshot.

Run the notebook cells one by one, and notice that you are not specifying any additional configuration. When you create the processor object, you will see the cell outputs like the following example.

As you can see in the output, the default configuration is automatically applied to the processing job, without needing any additional input from the user.

When you run the next cell to run the processor, you can also verify the defaults are set by viewing the job on the SageMaker console. Choose Processing jobs under Processing in the navigation pane, as shown in the following screenshot.

Choose the processing job with the prefix end-to-end-ml-sm-proc, and you should be able to view the networking and encryption already configured.

You can continue running the remaining notebooks to train and deploy the model, and you will notice that the infrastructure defaults are automatically applied for both training jobs and models.

Override the default configuration file

There could be cases where a user needs to override the default configuration, for example, to experiment with public internet access, or update the networking configuration if the subnet runs out of IP addresses. In such cases, the Python SDK also allows you to provide a custom location for the configuration file, either on local storage, or you can point to a location in Amazon S3. In this section, we explore an example.

Open the user-configs.yaml file on your home directory and update the EnableNetworkIsolation value to True, under the TrainingJob section.

Now, open the same notebook, and add the following cell to the beginning of the notebook:

With this cell, you point the location of the config file to the SDK. Now, when you create the processor object, you’ll notice that the default config has been overridden to enable network isolation, and the processing job will fail in network isolation mode.

You can use the same override environment variable to set the location of the configuration file if you’re using your local environment such as VSCode.

Debug and retrieve defaults

For quick troubleshooting if you run into any errors when running API calls from your notebook, the cell output displays the applied default configurations as shown in the previous section. To view the exact Boto3 call created to view the attribute values passed from default config file, you can debug by turning on Boto3 logging. To turn on logging, run the following cell at the top of the notebook:

Any subsequent Boto3 calls will be logged with the complete request, visible under the body section in the log.

You can also view the collection of default configurations using the session.sagemaker_config value as shown in the following example.

Finally, if you’re using Boto3 to create your SageMaker resources, you can retrieve the default configuration values using the sagemaker_config variable. For example, to run the processing job in 03_feature_engineering.ipynb using Boto3, you can enter the contents of the following cell in the same notebook and run the cell:

Automate config file creation

For administrators, having to create the config file and save the file to each SageMaker notebook instance or Studio user profile can be a daunting task. Although you can recommend that users use a common file stored in a default S3 location, it puts the additional overhead of specifying the override on the data scientists.

To automate this, administrators can use SageMaker Lifecycle Configurations (LCC). For Studio user profiles or notebook instances, you can attach the following sample LCC script as a default LCC for the user’s default Jupyter Server app:

See Use Lifecycle Configurations for Amazon SageMaker Studio or Customize a Notebook Instance for instructions on creating and setting a default lifecycle script.

Clean up

When you’re done experimenting with this feature, clean up your resources to avoid paying additional costs. If you have provisioned new resources as specified in this post, complete the following steps to clean up your resources:

- Shut down your Studio apps for the user profile. See Shut Down and Update SageMaker Studio and Studio Apps for instructions. Ensure that all apps are deleted before deleting the stack.

- Delete the EFS volume created for the Studio domain. You can view the EFS volume attached with the domain by using a DescribeDomain API call.

- Delete the Studio domain stack.

- Delete the security groups created for the Studio domain. You can find them on the Amazon Elastic Compute Cloud (Amazon EC2) console, with the names security-group-for-inbound-nfs-d-xxx and security-group-for-outbound-nfs-d-xxx

- Delete the networking stack.

Conclusion

In this post, we discussed configuring and using default values for key infrastructure parameters using the SageMaker Python SDK. This allows administrators to set default configurations for data scientists, thereby saving time for users and admins, eliminating the burden of repetitively specifying parameters, and resulting in leaner and more manageable code. For the full list of supported parameters and APIs, see Configuring and using defaults with the SageMaker Python SDK. For any questions and discussions, join the Machine Learning & AI community.

About the Authors

Giuseppe Angelo Porcelli is a Principal Machine Learning Specialist Solutions Architect for Amazon Web Services. With several years software engineering an ML background, he works with customers of any size to deeply understand their business and technical needs and design AI and Machine Learning solutions that make the best use of the AWS Cloud and the Amazon Machine Learning stack. He has worked on projects in different domains, including MLOps, Computer Vision, NLP, and involving a broad set of AWS services. In his free time, Giuseppe enjoys playing football.

Giuseppe Angelo Porcelli is a Principal Machine Learning Specialist Solutions Architect for Amazon Web Services. With several years software engineering an ML background, he works with customers of any size to deeply understand their business and technical needs and design AI and Machine Learning solutions that make the best use of the AWS Cloud and the Amazon Machine Learning stack. He has worked on projects in different domains, including MLOps, Computer Vision, NLP, and involving a broad set of AWS services. In his free time, Giuseppe enjoys playing football.

Bruno Pistone is an AI/ML Specialist Solutions Architect for AWS based in Milan. He works with customers of any size on helping them to deeply understand their technical needs and design AI and Machine Learning solutions that make the best use of the AWS Cloud and the Amazon Machine Learning stack. His field of expertise are Machine Learning end to end, Machine Learning Industrialization and MLOps. He enjoys spending time with his friends and exploring new places, as well as travelling to new destinations.

Bruno Pistone is an AI/ML Specialist Solutions Architect for AWS based in Milan. He works with customers of any size on helping them to deeply understand their technical needs and design AI and Machine Learning solutions that make the best use of the AWS Cloud and the Amazon Machine Learning stack. His field of expertise are Machine Learning end to end, Machine Learning Industrialization and MLOps. He enjoys spending time with his friends and exploring new places, as well as travelling to new destinations.

Durga Sury is an ML Solutions Architect on the Amazon SageMaker Service SA team. She is passionate about making machine learning accessible to everyone. In her 4 years at AWS, she has helped set up AI/ML platforms for enterprise customers. When she isn’t working, she loves motorcycle rides, mystery novels, and long walks with her 5-year-old husky.

Durga Sury is an ML Solutions Architect on the Amazon SageMaker Service SA team. She is passionate about making machine learning accessible to everyone. In her 4 years at AWS, she has helped set up AI/ML platforms for enterprise customers. When she isn’t working, she loves motorcycle rides, mystery novels, and long walks with her 5-year-old husky.

Author: Giuseppe Angelo Porcelli