DeepSeek-R1 now available as a fully managed serverless model in Amazon Bedrock

As of January 30, DeepSeek-R1 models became available in Amazon Bedrock through the Amazon Bedrock Marketplace and Amazon Bedrock Custom Model Import… Since then, thousands of customers have deployed these models in Amazon Bedrock… The fully managed DeepSeek-R1 model is now generally available …

As of January 30, DeepSeek-R1 models became available in Amazon Bedrock through the Amazon Bedrock Marketplace and Amazon Bedrock Custom Model Import. Since then, thousands of customers have deployed these models in Amazon Bedrock. Customers value the robust guardrails and comprehensive tooling for safe AI deployment. Today, we’re making it even easier to use DeepSeek in Amazon Bedrock through an expanded range of options, including a new serverless solution.

The fully managed DeepSeek-R1 model is now generally available in Amazon Bedrock. Amazon Web Services (AWS) is the first cloud service provider (CSP) to deliver DeepSeek-R1 as a fully managed, generally available model. You can accelerate innovation and deliver tangible business value with DeepSeek on AWS without having to manage infrastructure complexities. You can power your generative AI applications with DeepSeek-R1’s capabilities using a single API in the Amazon Bedrock’s fully managed service and get the benefit of its extensive features and tooling.

According to DeepSeek, their model is publicly available under MIT license and offers strong capabilities in reasoning, coding, and natural language understanding. These capabilities power intelligent decision support, software development, mathematical problem-solving, scientific analysis, data insights, and comprehensive knowledge management systems.

As is the case for all AI solutions, give careful consideration to data privacy requirements when implementing in your production environments, check for bias in output, and monitor your results. When implementing publicly available models like DeepSeek-R1, consider the following:

- Data security – You can access the enterprise-grade security, monitoring, and cost control features of Amazon Bedrock that are essential for deploying AI responsibly at scale, all while retaining complete control over your data. Users’ inputs and model outputs aren’t shared with any model providers. You can use these key security features by default, including data encryption at rest and in transit, fine-grained access controls, secure connectivity options, and download various compliance certifications while communicating with the DeepSeek-R1 model in Amazon Bedrock.

- Responsible AI – You can implement safeguards customized to your application requirements and responsible AI policies with Amazon Bedrock Guardrails. This includes key features of content filtering, sensitive information filtering, and customizable security controls to prevent hallucinations using contextual grounding and Automated Reasoning checks. This means you can control the interaction between users and the DeepSeek-R1 model in Bedrock with your defined set of policies by filtering undesirable and harmful content in your generative AI applications.

- Model evaluation – You can evaluate and compare models to identify the optimal model for your use case, including DeepSeek-R1, in a few steps through either automatic or human evaluations by using Amazon Bedrock model evaluation tools. You can choose automatic evaluation with predefined metrics such as accuracy, robustness, and toxicity. Alternatively, you can choose human evaluation workflows for subjective or custom metrics such as relevance, style, and alignment to brand voice. Model evaluation provides built-in curated datasets, or you can bring in your own datasets.

We strongly recommend integrating Amazon Bedrock Guardrails and using Amazon Bedrock model evaluation features with your DeepSeek-R1 model to add robust protection for your generative AI applications. To learn more, visit Protect your DeepSeek model deployments with Amazon Bedrock Guardrails and Evaluate the performance of Amazon Bedrock resources.

Get started with the DeepSeek-R1 model in Amazon Bedrock

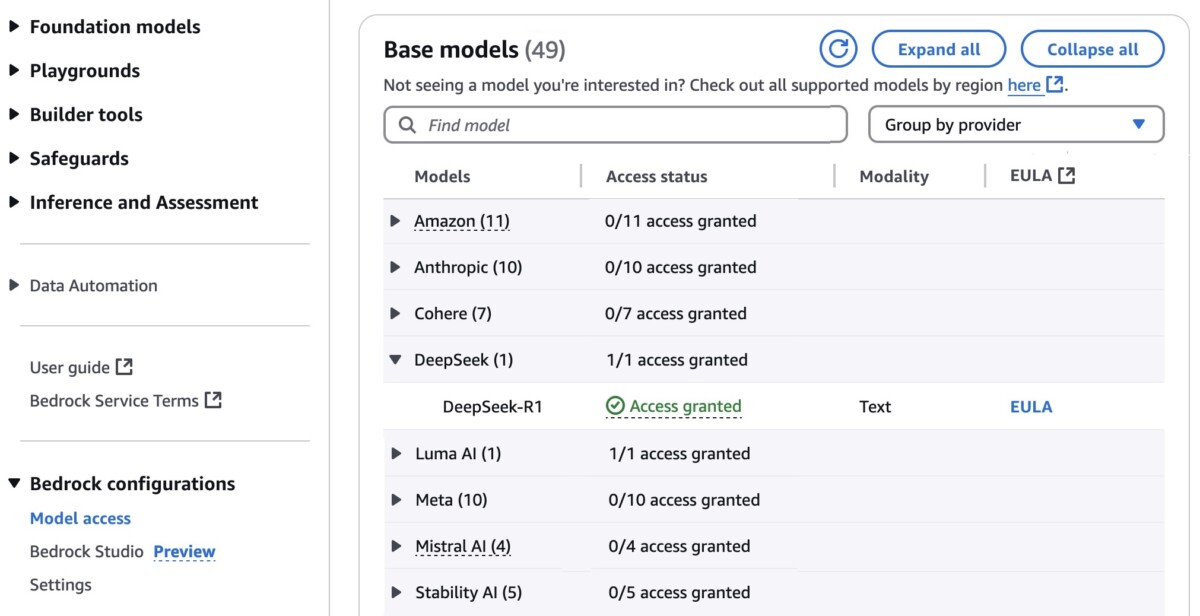

If you’re new to using DeepSeek-R1 models, go to the Amazon Bedrock console, choose Model access under Bedrock configurations in the left navigation pane. To access the fully managed DeepSeek-R1 model, request access for DeepSeek-R1 in DeepSeek. You’ll then be granted access to the model in Amazon Bedrock.

Next, to test the DeepSeek-R1 model in Amazon Bedrock, choose Chat/Text under Playgrounds in the left menu pane. Then choose Select model in the upper left, and select DeepSeek as the category and DeepSeek-R1 as the model. Then choose Apply.

Using the selected DeepSeek-R1 model, I run the following prompt example:

A family has $5,000 to save for their vacation next year. They can place the money in a savings account earning 2% interest annually or in a certificate of deposit earning 4% interest annually but with no access to the funds until the vacation. If they need $1,000 for emergency expenses during the year, how should they divide their money between the two options to maximize their vacation fund?

This prompt requires a complex chain of thought and produces very precise reasoning results.

To learn more about usage recommendations for prompts, refer to the README of the DeepSeek-R1 model in its GitHub repository.

By choosing View API request, you can also access the model using code examples in the AWS Command Line Interface (AWS CLI) and AWS SDK. You can use us.deepseek.r1-v1:0 as the model ID.

Here is a sample of the AWS CLI command:

aws bedrock-runtime invoke-model

--model-id us.deepseek-r1-v1:0

--body "{"messages":[{"role":"user","content":[{"type":"text","text":"[n"}]}],max_tokens":2000,"temperature":0.6,"top_k":250,"top_p":0.9,"stop_sequences":["\n\nHuman:"]}"

--cli-binary-format raw-in-base64-out

--region us-west-2

invoke-model-output.txtThe model supports both the InvokeModel and Converse API. The following Python code examples show how to send a text message to the DeepSeek-R1 model using the Amazon Bedrock Converse API for text generation.

import boto3

from botocore.exceptions import ClientError

# Create a Bedrock Runtime client in the AWS Region you want to use.

client = boto3.client("bedrock-runtime", region_name="us-west-2")

# Set the model ID, e.g., Llama 3 8b Instruct.

model_id = "us.deepseek.r1-v1:0"

# Start a conversation with the user message.

user_message = "Describe the purpose of a 'hello world' program in one line."

conversation = [

{

"role": "user",

"content": [{"text": user_message}],

}

]

try:

# Send the message to the model, using a basic inference configuration.

response = client.converse(

modelId=model_id,

messages=conversation,

inferenceConfig={"maxTokens": 2000, "temperature": 0.6, "topP": 0.9},

)

# Extract and print the response text.

response_text = response["output"]["message"]["content"][0]["text"]

print(response_text)

except (ClientError, Exception) as e:

print(f"ERROR: Can't invoke '{model_id}'. Reason: {e}")

exit(1)To enable Amazon Bedrock Guardrails on the DeepSeek-R1 model, select Guardrails under Safeguards in the left navigation pane, and create a guardrail by configuring as many filters as you need. For example, if you filter for “politics” word, your guardrails will recognize this word in the prompt and show you the blocked message.

You can test the guardrail with different inputs to assess the guardrail’s performance. You can refine the guardrail by setting denied topics, word filters, sensitive information filters, and blocked messaging until it matches your needs.

To learn more about Amazon Bedrock Guardrails, visit Stop harmful content in models using Amazon Bedrock Guardrails in the AWS documentation or other deep dive blog posts about Amazon Bedrock Guardrails on the AWS Machine Learning Blog channel.

Here’s a demo walkthrough highlighting how you can take advantage of the fully managed DeepSeek-R1 model in Amazon Bedrock:

Now available

DeepSeek-R1 is now available fully managed in Amazon Bedrock in the US East (N. Virginia), US East (Ohio), and US West (Oregon) AWS Regions through cross-Region inference. Check the full Region list for future updates. To learn more, check out the DeepSeek in Amazon Bedrock product page and the Amazon Bedrock pricing page.

Give the DeepSeek-R1 model a try in the Amazon Bedrock console today and send feedback to AWS re:Post for Amazon Bedrock or through your usual AWS Support contacts.

— Channy

Updated on March 10, 2025 — Fixed a screenshot of model selection and model ID.

Author: Channy Yun (윤석찬)