Efficient continual pre-training LLMs for financial domains

Large language models (LLMs) are generally trained on large publicly available datasets that are domain agnostic… For example, Meta’s Llama models are trained on datasets such as CommonCrawl, C4, Wikipedia, and ArXiv… Although the resulting models yield amazingly good results for general tasks…

Large language models (LLMs) are generally trained on large publicly available datasets that are domain agnostic. For example, Meta’s Llama models are trained on datasets such as CommonCrawl, C4, Wikipedia, and ArXiv. These datasets encompass a broad range of topics and domains. Although the resulting models yield amazingly good results for general tasks, such as text generation and entity recognition, there is evidence that models trained with domain-specific datasets can further improve LLM performance. For example, the training data used for BloombergGPT is 51% domain-specific documents, including financial news, filings, and other financial materials. The resulting LLM outperforms LLMs trained on non-domain-specific datasets when tested on finance-specific tasks. The authors of BloombergGPT concluded that their model outperforms all other models tested for four of the five financial tasks. The model provided even better performance when tested for Bloomberg’s internal financial tasks by a wide margin—as much as 60 points better (out of 100). Although you can learn more about the comprehensive evaluation results in the paper, the following sample captured from the BloombergGPT paper can give you a glimpse of the benefit of training LLMs using financial domain-specific data. As shown in the example, the BloombergGPT model provided correct answers while other non-domain-specific models struggled:

This post provides a guide to training LLMs specifically for the financial domain. We cover the following key areas:

- Data collection and preparation – Guidance on sourcing and curating relevant financial data for effective model training

- Continual pre-training vs. fine-tuning – When to use each technique to optimize your LLM’s performance

- Efficient continual pre-training – Strategies to streamline the continual pre-training process, saving time and resources

This post brings together the expertise of the applied science research team within Amazon Finance Technology and the AWS Worldwide Specialist team for the Global Financial Industry. Some of the content is based on the paper Efficient Continual Pre-training for Building Domain Specific Large Language Models.

Collecting and preparing finance data

Domain continual pre-training necessities a large-scale, high-quality, domain-specific dataset. The following are the main steps for domain dataset curation:

- Identify data sources – Potential data sources for domain corpus include open web, Wikipedia, books, social media, and internal documents.

- Domain data filters – Because the ultimate goal is to curate domain corpus, you might need apply additional steps to filter out samples that irrelevant to the target domain. This reduces useless corpus for continual pre-training and reduces training cost.

- Preprocessing – You might consider a series of preprocessing steps to improve data quality and training efficiency. For example, certain data sources can contain a fair number of noisy tokens; deduplication is considered a useful step to improve data quality and reduce training cost.

To develop financial LLMs, you can use two important data sources: News CommonCrawl and SEC filings. An SEC filing is a financial statement or other formal document submitted to the US Securities and Exchange Commission (SEC). Publicly listed companies are required to file various documents regularly. This creates a large number of documents over the years. News CommonCrawl is a dataset released by CommonCrawl in 2016. It contains news articles from news sites all over the world.

News CommonCrawl is available on Amazon Simple Storage Service (Amazon S3) in the commoncrawl bucket at crawl-data/CC-NEWS/. You can get the listings of files using the AWS Command Line Interface (AWS CLI) and the following command:

In Efficient Continual Pre-training for Building Domain Specific Large Language Models, the authors use a URL and keyword-based approach to filter financial news articles from generic news. Specifically, the authors maintain a list of important financial news outlets and a set of keywords related to financial news. We identify an article as financial news if either it comes from financial news outlets or any keywords show up in the URL. This simple yet effective approach enables you to identify financial news from not only financial news outlets but also finance sections of generic news outlets.

SEC filings are available online through the SEC’s EDGAR (Electronic Data Gathering, Analysis, and Retrieval) database, which provides open data access. You can scrape the filings from EDGAR directly, or use APIs in Amazon SageMaker with a few lines of code, for any period of time and for a large number of tickers (i.e., the SEC assigned identifier). To learn more, refer to SEC Filing Retrieval.

The following table summarizes the key details of both data sources.

| . | News CommonCrawl | SEC Filing |

| Coverage | 2016-2022 | 1993-2022 |

| Size | 25.8 billion words | 5.1 billion words |

The authors go through a few extra preprocessing steps before the data is fed into a training algorithm. First, we observe that SEC filings contain noisy text due to the removal of tables and figures, so the authors remove short sentences that are deemed to be table or figure labels. Secondly, we apply a locality sensitive hashing algorithm to deduplicate the new articles and filings. For SEC filings, we deduplicate at the section level instead of the document level. Lastly, we concatenate documents into a long string, tokenize it, and chunk the tokenization into pieces of max input length supported by the model to be trained. This improves the throughput of continual pre-training and reduces the training cost.

Continual pre-training vs. fine-tuning

Most available LLMs are general purpose and lack domain-specific abilities. Domain LLMs have shown considerable performance in medical, finance, or scientific domains. For an LLM to acquire domain-specific knowledge, there are four methods: training from scratch, continual pre-training, instruction fine-tuning on domain tasks, and Retrieval Augmented Generation (RAG).

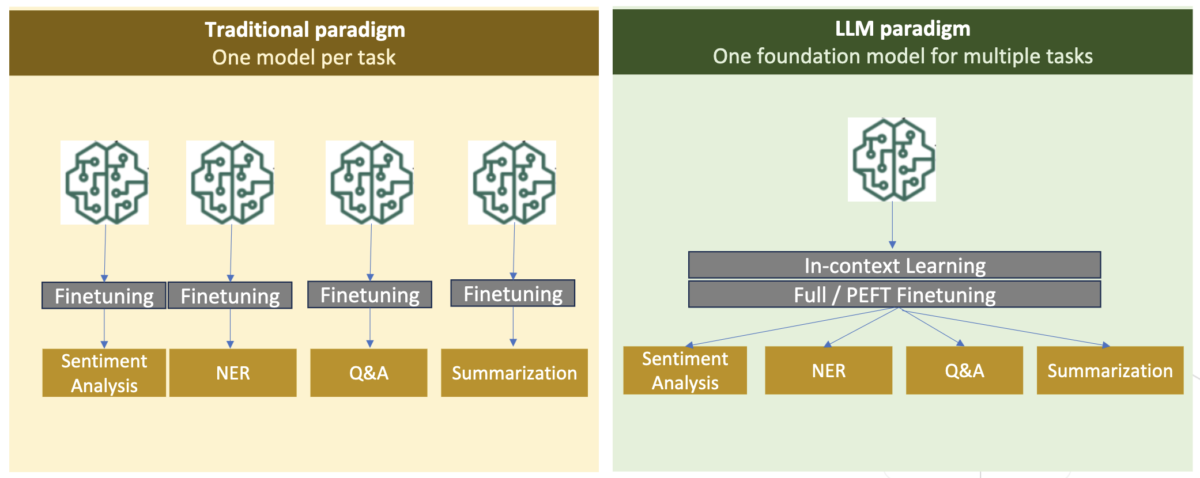

In traditional models, fine-tuning is usually used to create task-specific models for a domain. This means maintaining multiple models for multiple tasks like entity extraction, intent classification, sentiment analysis, or question answering. With the advent of LLMs, the need to maintain separate models has become obsolete by using techniques like in-context learning or prompting. This saves the effort required to maintain a stack of models for related but distinct tasks.

Intuitively, you can train LLMs from scratch with domain-specific data. Although most of the work to create domain LLMs has focused on training from scratch, it is prohibitively expensive. For example, the GPT-4 model costs over $100 million to train. These models are trained on a mix of open domain data and domain data. Continual pre-training can help models acquire domain-specific knowledge without incurring the cost of pre-training from scratch because you pre-train an existing open domain LLM on only the domain data.

With instruction fine-tuning on a task, you can’t make the model acquire domain knowledge because the LLM only acquires domain information contained in the instruction fine-tuning dataset. Unless a very large dataset for instruction fine-tuning is used, it is not enough to acquire domain knowledge. Sourcing high-quality instruction datasets is usually challenging and is the reason to use LLMs in first place. Also, instruction fine-tuning on one task can affect performance on other tasks (as seen in this paper). However, instruction fine-tuning is more cost-effective than either of the pre-training alternatives.

The following figure compares traditional task-specific fine-tuning. vs in-context learning paradigm with LLMs.

RAG is the most effective way of guiding an LLM to generate responses grounded in a domain. Although it can guide a model to generate responses by providing facts from the domain as auxiliary information, it doesn’t acquire the domain-specific language because the LLM is still relying on non-domain language style to generate the responses.

RAG is the most effective way of guiding an LLM to generate responses grounded in a domain. Although it can guide a model to generate responses by providing facts from the domain as auxiliary information, it doesn’t acquire the domain-specific language because the LLM is still relying on non-domain language style to generate the responses.

Continual pre-training is a middle ground between pre-training and instruction fine-tuning in terms of cost while being a strong alternative to gaining domain-specific knowledge and style. It can provide a general model over which further instruction fine-tuning on limited instruction data can be performed. Continual pre-training can be a cost-effective strategy for specialized domains where set of downstream tasks is large or unknown and labeled instruction tuning data is limited. In other scenarios, instruction fine-tuning or RAG might be more suitable.

To learn more about fine-tuning, RAG, and model training, refer to Fine-tune a foundation model, Retrieval Augmented Generation (RAG), and Train a Model with Amazon SageMaker, respectively. For this post, we focus on efficient continual pre-training.

Methodology of efficient continual pre-training

Continual pre-training consists of the following methodology:

- Domain-Adaptive Continual Pre-training (DACP) – In the paper Efficient Continual Pre-training for Building Domain Specific Large Language Models, the authors continually pre-train the Pythia language model suite on the financial corpus to adapt it to the finance domain. The objective is to create financial LLMs by feeding data from the whole financial domain into an open-sourced model. Because the training corpus contains all the curated datasets in the domain, the resultant model should acquire finance-specific knowledge, thereby becoming a versatile model for various financial tasks. This results in FinPythia models.

- Task-Adaptive Continual Pre-training (TACP) – The authors pre-train the models further on labeled and unlabeled task data to tailor them for specific tasks. In certain circumstances, developers may prefer models delivering better performance on a group of in-domain tasks rather than a domain-generic model. TACP is designed as continual pre-training aiming to enhance performance on targeted tasks, without requirements for labeled data. Specifically, the authors continually pre-train the open sourced models on the task tokens (without labels). The primary limitation of TACP lies in constructing task-specific LLMs instead of foundation LLMs, owing to the sole use of unlabeled task data for training. Although DACP uses a much larger corpus, it is prohibitively expensive. To balance these limitations, the authors propose two approaches that aim to build domain-specific foundation LLMs while preserving superior performance on target tasks:

- Efficient Task-Similar DACP (ETS-DACP) – The authors propose selecting a subset of financial corpus that is highly similar to the task data using embedding similarity. This subset is used for continual pre-training to make it more efficient. Specifically, the authors continually pre-train the open sourced LLM on a small corpus extracted from the financial corpus that is close to the target tasks in distribution. This can help improve task performance because we adopt the model to the distribution of task tokens despite labeled data not being required.

- Efficient Task-Agnostic DACP (ETA-DACP) – The authors propose using metrics like perplexity and token type entropy that don’t require task data to select samples from financial corpus for efficient continual pre-training. This approach is designed to deal with scenarios where task data is unavailable or more versatile domain models for the broader domain are preferred. The authors adopt two dimensions to select data samples that are important for obtaining domain information from a subset of pre-training domain data: novelty and diversity. Novelty, measured by the perplexity recorded by the target model, refers to the information that was unseen by the LLM before. Data with high novelty indicates novel knowledge for the LLM, and such data is viewed as more difficult to learn. This updates generic LLMs with intensive domain knowledge during continual pre-training. Diversity, on the other hand, captures the diversity of distributions of token types in the domain corpus, which has been documented as a useful feature in the research of curriculum learning on language modeling.

The following figure compares an example of ETS-DACP (left) vs. ETA-DACP (right).

We adopt two sampling schemes to actively select data points from curated financial corpus: hard sampling and soft sampling. The former is done by first ranking the financial corpus by corresponding metrics and then selecting the top-k samples, where k is predetermined according to the training budget. For the latter, the authors assign sampling weights for each data points according the metric values, and then randomly sample k data points to meet the training budget.

Result and analysis

The authors evaluate the resulting financial LLMs on an array of financial tasks to investigate the efficacy of continual pre-training:

- Financial Phrase Bank – A sentiment classification task on financial news.

- FiQA SA – An aspect-based sentiment classification task based on financial news and headlines.

- Headline – A binary classification task on whether a headline on a financial entity contains certain information.

- NER – A financial named entity extraction task based on credit risk assessment section of SEC reports. Words in this task are annotated with PER, LOC, ORG, and MISC.

Because financial LLMs are instruction fine-tuned, the authors evaluate models in a 5-shot setting for each task for the sake of robustness. On average, the FinPythia 6.9B outperforms Pythia 6.9B by 10% across four tasks, which demonstrates the efficacy of domain-specific continual pre-training. For the 1B model, the improvement is less profound, but performance still improves 2% on average.

The following figure illustrates the performance difference before and after DACP on both models.

The following figure showcases two qualitative examples generated by Pythia 6.9B and FinPythia 6.9B. For two finance-related questions regarding an investor manager and a financial term, Pythia 6.9B doesn’t understand the term or recognize the name, whereas FinPythia 6.9B generates detailed answers correctly. The qualitative examples demonstrate that continual pre-training enables the LLMs to acquire domain knowledge during the process.

The following table compares various efficient continual pre-training approaches. ETA-DACP-ppl is ETA-DACP based on perplexity (novelty), and ETA-DACP-ent is based on entropy (diversity). ETS-DACP-com is similar to DACP with data selection by averaging all three metrics. The following are a few takeaways from the results:

- Data selection methods are efficient – They surpass standard continual pre-training with just 10% of training data. Efficient continual pre-training including Task-Similar DACP (ETS-DACP), Task-Agnostic DACP based on entropy (ESA-DACP-ent) and Task-Similar DACP based on all three metrics (ETS-DACP-com) outperforms standard DACP on average despite the fact that they are trained on only 10% of financial corpus.

- Task-aware data selection works the best in line with small language models research – ETS-DACP records the best average performance among all the methods and, based on all three metrics, records the second-best task performance. This suggests that using unlabeled task data is still an effective approach to boost task performance in the case of LLMs.

- Task-agnostic data selection is close second – ESA-DACP-ent follows the performance of the task-aware data selection approach, implying that we could still boost task performance by actively selecting high-quality samples not tied to specific tasks. This paves the way to build financial LLMs for the whole domain while achieving superior task performance.

One critical question regarding continual pre-training is whether it negatively affects the performance on non-domain tasks. The authors also evaluate the continually pre-trained model on four widely used generic tasks: ARC, MMLU, TruthQA, and HellaSwag, which measure the ability of question answering, reasoning, and completion. The authors find that continual pre-training does not adversely affect non-domain performance. For more details, refer to Efficient Continual Pre-training for Building Domain Specific Large Language Models.

Conclusion

This post offered insights into data collection and continual pre-training strategies for training LLMs for financial domain. You can start training your own LLMs for financial tasks using Amazon SageMaker Training or Amazon Bedrock today.

About the Authors

Yong Xie is an applied scientist in Amazon FinTech. He focuses on developing large language models and Generative AI applications for finance.

Yong Xie is an applied scientist in Amazon FinTech. He focuses on developing large language models and Generative AI applications for finance.

Karan Aggarwal is a Senior Applied Scientist with Amazon FinTech with a focus on Generative AI for finance use-cases. Karan has extensive experience in time-series analysis and NLP, with particular interest in learning from limited labeled data

Karan Aggarwal is a Senior Applied Scientist with Amazon FinTech with a focus on Generative AI for finance use-cases. Karan has extensive experience in time-series analysis and NLP, with particular interest in learning from limited labeled data

Aitzaz Ahmad is an Applied Science Manager at Amazon where he leads a team of scientists building various applications of Machine Learning and Generative AI in Finance. His research interests are in NLP, Generative AI, and LLM Agents. He received his PhD in Electrical Engineering from Texas A&M University.

Aitzaz Ahmad is an Applied Science Manager at Amazon where he leads a team of scientists building various applications of Machine Learning and Generative AI in Finance. His research interests are in NLP, Generative AI, and LLM Agents. He received his PhD in Electrical Engineering from Texas A&M University.

Qingwei Li is a Machine Learning Specialist at Amazon Web Services. He received his Ph.D. in Operations Research after he broke his advisor’s research grant account and failed to deliver the Nobel Prize he promised. Currently he helps customers in financial service build machine learning solutions on AWS.

Qingwei Li is a Machine Learning Specialist at Amazon Web Services. He received his Ph.D. in Operations Research after he broke his advisor’s research grant account and failed to deliver the Nobel Prize he promised. Currently he helps customers in financial service build machine learning solutions on AWS.

Raghvender Arni leads the Customer Acceleration Team (CAT) within AWS Industries. The CAT is a global cross-functional team of customer facing cloud architects, software engineers, data scientists, and AI/ML experts and designers that drives innovation via advanced prototyping, and drives cloud operational excellence via specialized technical expertise.

Raghvender Arni leads the Customer Acceleration Team (CAT) within AWS Industries. The CAT is a global cross-functional team of customer facing cloud architects, software engineers, data scientists, and AI/ML experts and designers that drives innovation via advanced prototyping, and drives cloud operational excellence via specialized technical expertise.

Author: Yong Xie