Expedite the Amazon Lex chatbot development lifecycle with Test Workbench

Amazon Lex is excited to announce Test Workbench, a new bot testing solution that provides tools to simplify and automate the bot testing process… Amazon Lex is a fully managed service for building conversational voice and text interfaces… Amazon Lex helps you build and deploy chatbots and virt…

Amazon Lex is excited to announce Test Workbench, a new bot testing solution that provides tools to simplify and automate the bot testing process. During bot development, testing is the phase where developers check whether a bot meets the specific requirements, needs and expectations by identifying errors, defects, or bugs in the system before scaling. Testing helps validate bot performance on several fronts such as conversational flow (understanding user queries and responding accurately), intent overlap handling, and consistency across modalities. However, testing is often manual, error-prone, and non-standardized. Test Workbench standardizes automated test management by allowing chatbot development teams to generate, maintain, and execute test sets with a consistent methodology and avoid custom scripting and ad-hoc integrations. In this post, you will learn how Test Workbench streamlines automated testing of a bot’s voice and text modalities and provides accuracy and performance measures for parameters such as audio transcription, intent recognition, and slot resolution for both single utterance inputs and multi-turn conversations. This allows you to quickly identify bot improvement areas and maintain a consistent baseline to measure accuracy over time and observe any accuracy regression due to bot updates.

Amazon Lex is a fully managed service for building conversational voice and text interfaces. Amazon Lex helps you build and deploy chatbots and virtual assistants on websites, contact center services, and messaging channels. Amazon Lex bots help increase interactive voice response (IVR) productivity, automate simple tasks, and drive operational efficiencies across the organization. Test Workbench for Amazon Lex standardizes and simplifies the bot testing lifecycle, which is critical to improving bot design.

Features of Test Workbench

Test Workbench for Amazon Lex includes the following features:

- Generate test datasets automatically from a bot’s conversation logs

- Upload manually built test set baselines

- Perform end-to-end testing of single input or multi-turn conversations

- Test both audio and text modalities of a bot

- Review aggregated and drill-down metrics for bot dimensions:

- Speech transcription

- Intent recognition

- Slot resolution (including multi-valued slots or composite slots)

- Context tags

- Session attributes

- Request attributes

- Runtime hints

- Time delay in seconds

Prerequisites

To test this feature, you should have the following:

- An AWS account with administrator access

- A sample retail bot imported via the Amazon Lex console (for more information, refer to importing a bot)

- A test set source, either from:

- Conversation logs enabled for the bot to store bot interactions, or

- A sample retail test set that can be imported following the instructions provided in this post

In addition, you should have knowledge and understanding of the following services and features:

Create a test set

To create your test set, complete the following steps:

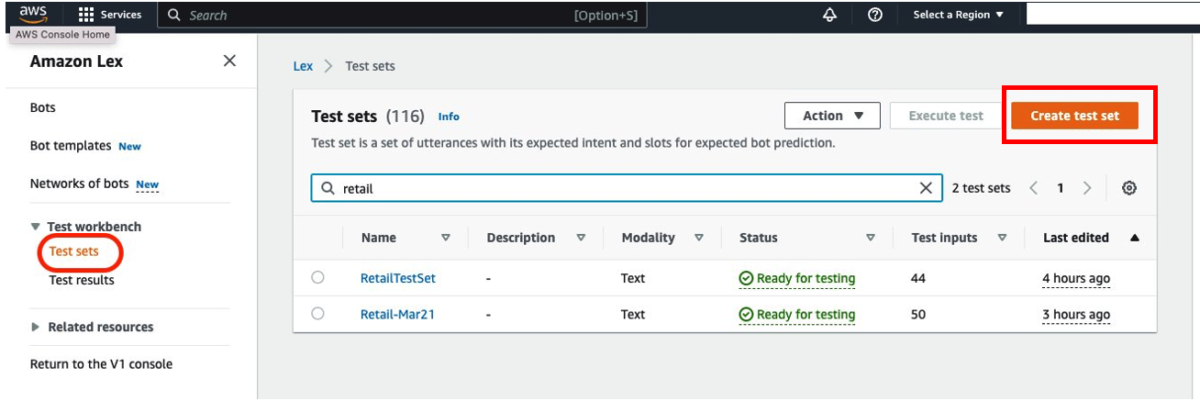

- On the Amazon Lex console, under Test workbench in the navigation pane, choose Test sets.

You can review a list of existing test sets, including basic information such as name, description, number of test inputs, modality, and status. In the following steps, you can choose between generating a test set from the conversation logs associated with the bot or uploading an existing manually built test set in a CSV file format.

- Choose Create test set.

- Generating test sets from conversation logs allows you to do the following:

- Include real multi-turn conversations from the bot’s logs in CloudWatch

- Include audio logs and conduct tests that account for real speech nuances, background noises, and accents

- Speed up the creation of test sets

- Uploading a manually built test set allows you to do the following:

- Test new bots for which there is no production data

- Perform regression tests on existing bots for any new or modified intents, slots, and conversation flows

- Test carefully crafted and detailed scenarios that specify session attributes and request attributes

To generate a test set, complete the following steps. To upload a manually built test set, skip to step 7.

- Choose Generate a baseline test set.

- Choose your options for Bot name, Bot alias, and Language.

- For Time range, set a time range for the logs.

- For Existing IAM role, choose a role.

Ensure that the IAM role is able to grant you access to retrieve information from the conversation logs. Refer to Creating IAM roles to create an IAM role with the appropriate policy.

- If you prefer to use a manually created test set, select Upload a file to this test set.

- For Upload a file to this test set, choose from the following options:

- Select Upload from S3 bucket to upload a CSV file from an Amazon Simple Storage Service (Amazon S3) bucket.

- Select Upload a file to this test set to upload a CSV file from your computer.

You can use the sample test set provided in this post. For more information about templates, choose the CSV Template link on the page.

- For Modality, select the modality of your test set, either Text or Audio.

Test Workbench provides testing support for audio and text input formats.

- For S3 location, enter the S3 bucket location where the results will be stored.

- Optionally, choose an AWS Key Management Service (AWS KMS) key to encrypt output transcripts.

- Choose Create.

Your newly created test set will be listed on the Test sets page with one of the following statuses:

- Ready for annotation – For test sets generated from Amazon Lex bot conversation logs, the annotation step serves as a manual gating mechanism to ensure quality test inputs. By annotating values for expected intents and expected slots for each test line item, you indicate the “ground truth” for that line. The test results from the bot run are collected and compared against the ground truth to mark test results as pass or fail. This line level comparison then allows for creating aggregated measures.

- Ready for testing – This indicates that the test set is ready to be executed against an Amazon Lex bot.

- Validation error – Uploaded test files are checked for errors such as exceeding maximum supported length, invalid characters in intent names, or invalid Amazon S3 links containing audio files. If the test set is in the Validation error state, download the file showing the validation details to see test input issues or errors on a line-by-line basis. Once they are addressed, you can manually upload the corrected test set CSV into the test set.

Executing a test set

A test set is de-coupled from a bot. The same test set can be executed against a different bot or bot alias in the future as your business use case evolves. To report performance metrics of a bot against the baseline test data, complete the following steps:

- Import the sample bot definition and build the bot (refer to Importing a bot for guidance).

- On the Amazon Lex console, choose Test sets in the navigation pane.

- Choose your validated test set.

Here you can review basic information about the test set and the imported test data.

- Choose Execute test.

- Choose the appropriate options for Bot name, Bot alias, and Language.

- For Test type, select Audio or Text.

- For Endpoint selection, select either Streaming or Non-streaming.

- Choose Validate discrepancy to validate your test dataset.

Before executing a test set, you can validate test coverage, including identifying intents and slots present in the test set but not in the bot. This early warning serves to set tester expectation for unexpected test failures. If discrepancies between your test dataset and your bot are detected, the Execute test page will update with the View details button.

Intents and slots found in the test data set but not in the bot alias are listed as shown in the following screenshots.

- After you validate the discrepancies, choose Execute to run the test.

Review results

The performance measures generated after executing a test set help you identify areas of bot design that need improvements and are useful for expediting bot development and delivery to support your customers. Test Workbench provides insights on intent classification and slot resolution in end-to-end conversation and single-line input level. The completed test runs are stored with timestamps in your S3 bucket, and can be used for future comparative reviews.

- On the Amazon Lex console, choose Test results in the navigation pane.

- Choose the test result ID for the results you want to review.

On the next page, the test results will include a breakdown of results organized in four main tabs: Overall results, Conversation results, Intent and slot results, and Detailed results.

Overall results

The Overall results tab contains three main sections:

- Test set input breakdown — A chart showing the total number of end-to-end conversations and single input utterances in the test set.

- Single input breakdown — A chart showing the number of passed or failed single inputs.

- Conversation breakdown — A chart showing the number of passed or failed multi-turn inputs.

For test sets run in audio modality, speech transcription charts are provided to show the number of passed or failed speech transcriptions on both single input and conversation types. In audio modality, a single input or multi-turn conversation could pass the speech transcription test, yet fail the overall end-to-end test. This can be caused, for instance, by a slot resolution or an intent recognition issue.

Conversation results

Test Workbench helps you drill down into conversation failures that can be attributed to specific intents or slots. The Conversation results tab is organized into three main areas, covering all intents and slots used in the test set:

- Conversation pass rates — A table used to visualize which intents and slots are responsible for possible conversation failures.

- Conversation intent failure metrics — A bar graph showing the top five worst performing intents in the test set, if any.

- Conversation slot failure metrics — A bar graph showing the top five worst performing slots in the test set, if any.

Intent and slot results

The Intent and slot results tab provides drill-down metrics for bot dimensions such as intent recognition and slot resolution.

- Intent recognition metrics — A table showing the intent recognition success rate.

- Slot resolution metrics — A table showing the slot resolution success rate, by

Detailed results

You can access a detailed report of the executed test run on the Detailed results tab. A table is displayed to show the actual transcription, output intent, and slot values in a test set. The report can be downloaded as a CSV for further analysis.

The line-level output provides insights to help improve the bot design and boost accuracy. For instance, misrecognized or missed speech inputs such as branded words can be added to custom vocabulary of an intent or as utterances under an intent.

In order to further improve conversation design, you can refer to this post, outlining best practices on using ML to create a bot that will delight your customers by accurately understanding them.

Conclusion

In this post, we presented the Test Workbench for Amazon Lex, a native capability that standardizes a chatbot automated testing process and allows developers and conversation designers to streamline and iterate quickly through bot design and development.

We look forward to hearing how you use this new functionality of Amazon Lex and welcome feedback! For any questions, bugs, or feature requests, please reach us through AWS re:Post for Amazon Lex or your AWS Support contacts.

To learn more, see Amazon Lex FAQs and the Amazon Lex V2 Developer Guide.

About the authors

Sandeep Srinivasan is a Product Manager on the Amazon Lex team. As a keen observer of human behavior, he is passionate about customer experience. He spends his waking hours at the intersection of people, technology, and the future.

Sandeep Srinivasan is a Product Manager on the Amazon Lex team. As a keen observer of human behavior, he is passionate about customer experience. He spends his waking hours at the intersection of people, technology, and the future.

Grazia Russo Lassner is a Senior Consultant with the AWS Professional Services Natural Language AI team. She specializes in designing and developing conversational AI solutions using AWS technologies for customers in various industries. Outside of work, she enjoys beach weekends, reading the latest fiction books, and family.

Grazia Russo Lassner is a Senior Consultant with the AWS Professional Services Natural Language AI team. She specializes in designing and developing conversational AI solutions using AWS technologies for customers in various industries. Outside of work, she enjoys beach weekends, reading the latest fiction books, and family.

Author: Grazia Russo Lassner