Fast and cost-effective LLaMA 2 fine-tuning with AWS Trainium

Large language models (LLMs) have captured the imagination and attention of developers, scientists, technologists, entrepreneurs, and executives across several industries… These models can be used for question answering, summarization, translation, and more in applications such as conversational a…

Large language models (LLMs) have captured the imagination and attention of developers, scientists, technologists, entrepreneurs, and executives across several industries. These models can be used for question answering, summarization, translation, and more in applications such as conversational agents for customer support, content creation for marketing, and coding assistants.

Recently, Meta released Llama 2 for both researchers and commercial entities, adding to the list of other LLMs, including MosaicML MPT and Falcon. In this post, we walk through how to fine-tune Llama 2 on AWS Trainium, a purpose-built accelerator for LLM training, to reduce training times and costs. We review the fine-tuning scripts provided by the AWS Neuron SDK (using NeMo Megatron-LM), the various configurations we used, and the throughput results we saw.

About the Llama 2 model

Similar to the previous Llama 1 model and other models like GPT, Llama 2 uses the Transformer’s decoder-only architecture. It comes in three sizes: 7 billion, 13 billion, and 70 billion parameters. Compared to Llama 1, Llama 2 doubles context length from 2,000 to 4,000, and uses grouped-query attention (only for 70B). Llama 2 pre-trained models are trained on 2 trillion tokens, and its fine-tuned models have been trained on over 1 million human annotations.

Distributed training of Llama 2

To accommodate Llama 2 with 2,000 and 4,000 sequence length, we implemented the script using NeMo Megatron for Trainium that supports data parallelism (DP), tensor parallelism (TP), and pipeline parallelism (PP). To be specific, with the new implementation of some features like untie word embedding, rotary embedding, RMSNorm, and Swiglu activation, we use the generic script of GPT Neuron Megatron-LM to support the Llama 2 training script.

Our high-level training procedure is as follows: for our training environment, we use a multi-instance cluster managed by the SLURM system for distributed training and scheduling under the NeMo framework.

First, download the Llama 2 model and training datasets and preprocess them using the Llama 2 tokenizer. For example, to use the RedPajama dataset, use the following command:

For detailed guidance of downloading models and the argument of the preprocessing script, refer to Download LlamaV2 dataset and tokenizer.

Next, compile the model:

After the model is compiled, launch the training job with the following script that is already optimized with the best configuration and hyperparameters for Llama 2 (included in the example code):

Lastly, we monitor TensorBoard to keep track of training progress:

For the complete example code and scripts we mentioned, refer to the Llama 7B tutorial and NeMo code in the Neuron SDK to walk through more detailed steps.

Fine-tuning experiments

We fine-tuned the 7B model on the OSCAR (Open Super-large Crawled ALMAnaCH coRpus) and QNLI (Question-answering NLI) datasets in a Neuron 2.12 environment (PyTorch). For each 2,000 and 4,000 sequence length, we optimized some configurations, such as batchsize and gradient_accumulation, for training efficiency. As a fine-tuning strategy, we adopted full fine-tuning of all parameters (about 500 steps), which can be extended to pre-training with longer steps and larger datasets (for example, 1T RedPajama). Sequence parallelism can also be enabled to allow NeMo Megatron to successfully fine-tune models with a larger sequence length of 4,000. The following table shows the configuration and throughput results of the Llama 7B fine-tuning experiment. The throughput scales almost linearly as the number of instances increase up to 4.

| Distributed Library | Datasets | Sequence Length | Number of Instances | Tensor Parallel | Data Parallel | Pipeline Parellel | Global Batch size | Throughput (seq/s) |

| Neuron NeMo Megatron | OSCAR | 4096 | 1 | 8 | 4 | 1 | 256 | 3.7 |

| . | . | 4096 | 2 | 8 | 4 | 1 | 256 | 7.4 |

| . | . | 4096 | 4 | 8 | 4 | 1 | 256 | 14.6 |

| . | QNLI | 4096 | 4 | 8 | 4 | 1 | 256 | 14.1 |

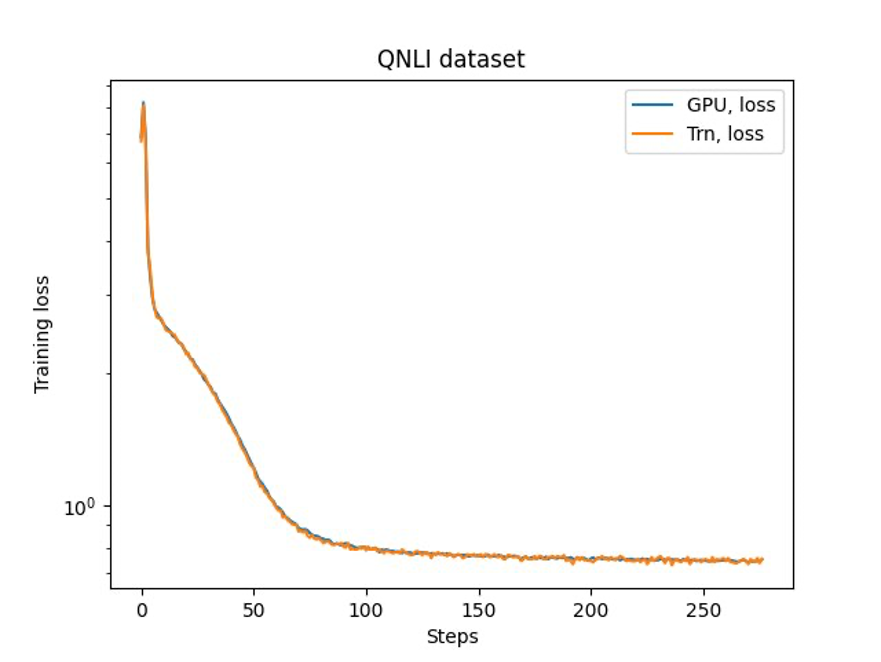

The last step is to verify the accuracy with the base model. We implemented a reference script for GPU experiments and confirmed the training curves for GPU and Trainium matched as shown in the following figure. The figure illustrates loss curves over the number of training steps on the QNLI dataset. Mixed-precision was adopted for GPU (blue), and bf16 with default stochastic rounding for Trainium (orange).

Conclusion

In this post, we showed that Trainium delivers high performance and cost-effective fine-tuning of Llama 2. For more resources on using Trainium for distributed pre-training and fine-tuning your generative AI models using NeMo Megatron, refer to AWS Neuron Reference for NeMo Megatron.

About the Authors

Hao Zhou is a Research Scientist with Amazon SageMaker. Before that, he worked on developing machine learning methods for fraud detection for Amazon Fraud Detector. He is passionate about applying machine learning, optimization, and generative AI techniques to various real-world problems. He holds a PhD in Electrical Engineering from Northwestern University.

Hao Zhou is a Research Scientist with Amazon SageMaker. Before that, he worked on developing machine learning methods for fraud detection for Amazon Fraud Detector. He is passionate about applying machine learning, optimization, and generative AI techniques to various real-world problems. He holds a PhD in Electrical Engineering from Northwestern University.

Karthick Gopalswamy is an Applied Scientist with AWS. Before AWS, he worked as a scientist in Uber and Walmart Labs with a major focus on mixed integer optimization. At Uber, he focused on optimizing the public transit network with on-demand SaaS products and shared rides. At Walmart Labs, he worked on pricing and packing optimizations. Karthick has a PhD in Industrial and Systems Engineering with a minor in Operations Research from North Carolina State University. His research focuses on models and methodologies that combine operations research and machine learning.

Karthick Gopalswamy is an Applied Scientist with AWS. Before AWS, he worked as a scientist in Uber and Walmart Labs with a major focus on mixed integer optimization. At Uber, he focused on optimizing the public transit network with on-demand SaaS products and shared rides. At Walmart Labs, he worked on pricing and packing optimizations. Karthick has a PhD in Industrial and Systems Engineering with a minor in Operations Research from North Carolina State University. His research focuses on models and methodologies that combine operations research and machine learning.

Xin Huang is a Senior Applied Scientist for Amazon SageMaker JumpStart and Amazon SageMaker built-in algorithms. He focuses on developing scalable machine learning algorithms. His research interests are in the area of natural language processing, explainable deep learning on tabular data, and robust analysis of non-parametric space-time clustering. He has published many papers in ACL, ICDM, KDD conferences, and Royal Statistical Society: Series A.

Xin Huang is a Senior Applied Scientist for Amazon SageMaker JumpStart and Amazon SageMaker built-in algorithms. He focuses on developing scalable machine learning algorithms. His research interests are in the area of natural language processing, explainable deep learning on tabular data, and robust analysis of non-parametric space-time clustering. He has published many papers in ACL, ICDM, KDD conferences, and Royal Statistical Society: Series A.

Youngsuk Park is a Sr. Applied Scientist at AWS Annapurna Labs, working on developing and training foundation models on AI accelerators. Prior to that, Dr. Park worked on R&D for Amazon Forecast in AWS AI Labs as a lead scientist. His research lies in the interplay between machine learning, foundational models, optimization, and reinforcement learning. He has published over 20 peer-reviewed papers in top venues, including ICLR, ICML, AISTATS, and KDD, with the service of organizing workshop and presenting tutorials in the area of time series and LLM training. Before joining AWS, he obtained a PhD in Electrical Engineering from Stanford University.

Youngsuk Park is a Sr. Applied Scientist at AWS Annapurna Labs, working on developing and training foundation models on AI accelerators. Prior to that, Dr. Park worked on R&D for Amazon Forecast in AWS AI Labs as a lead scientist. His research lies in the interplay between machine learning, foundational models, optimization, and reinforcement learning. He has published over 20 peer-reviewed papers in top venues, including ICLR, ICML, AISTATS, and KDD, with the service of organizing workshop and presenting tutorials in the area of time series and LLM training. Before joining AWS, he obtained a PhD in Electrical Engineering from Stanford University.

Yida Wang is a principal scientist in the AWS AI team of Amazon. His research interest is in systems, high-performance computing, and big data analytics. He currently works on deep learning systems, with a focus on compiling and optimizing deep learning models for efficient training and inference, especially large-scale foundation models. The mission is to bridge the high-level models from various frameworks and low-level hardware platforms including CPUs, GPUs, and AI accelerators, so that different models can run in high performance on different devices.

Yida Wang is a principal scientist in the AWS AI team of Amazon. His research interest is in systems, high-performance computing, and big data analytics. He currently works on deep learning systems, with a focus on compiling and optimizing deep learning models for efficient training and inference, especially large-scale foundation models. The mission is to bridge the high-level models from various frameworks and low-level hardware platforms including CPUs, GPUs, and AI accelerators, so that different models can run in high performance on different devices.

Jun (Luke) Huan is a Principal Scientist at AWS AI Labs. Dr. Huan works on AI and Data Science. He has published more than 160 peer-reviewed papers in leading conferences and journals and has graduated 11 PhD students. He was a recipient of the NSF Faculty Early Career Development Award in 2009. Before joining AWS, he worked at Baidu Research as a distinguished scientist and the head of Baidu Big Data Laboratory. He founded StylingAI Inc., an AI start-up, and worked as the CEO and Chief Scientist in 2019–2021. Before joining the industry, he was the Charles E. and Mary Jane Spahr Professor in the EECS Department at the University of Kansas. From 2015–2018, he worked as a program director at the US NSF in charge of its big data program.

Jun (Luke) Huan is a Principal Scientist at AWS AI Labs. Dr. Huan works on AI and Data Science. He has published more than 160 peer-reviewed papers in leading conferences and journals and has graduated 11 PhD students. He was a recipient of the NSF Faculty Early Career Development Award in 2009. Before joining AWS, he worked at Baidu Research as a distinguished scientist and the head of Baidu Big Data Laboratory. He founded StylingAI Inc., an AI start-up, and worked as the CEO and Chief Scientist in 2019–2021. Before joining the industry, he was the Charles E. and Mary Jane Spahr Professor in the EECS Department at the University of Kansas. From 2015–2018, he worked as a program director at the US NSF in charge of its big data program.

Shruti Koparkar is a Senior Product Marketing Manager at AWS. She helps customers explore, evaluate, and adopt Amazon EC2 accelerated computing infrastructure for their machine learning needs.

Shruti Koparkar is a Senior Product Marketing Manager at AWS. She helps customers explore, evaluate, and adopt Amazon EC2 accelerated computing infrastructure for their machine learning needs.

Author: Hao Zhou