Federated learning on AWS using FedML, Amazon EKS, and Amazon SageMaker

This post is co-written with Chaoyang He, Al Nevarez and Salman Avestimehr from FedML… In this post, we share an FL approach using FedML, Amazon Elastic Kubernetes Service (Amazon EKS), and Amazon SageMaker to improve patient outcomes while addressing data privacy and security concerns… FedML …

This post is co-written with Chaoyang He, Al Nevarez and Salman Avestimehr from FedML.

Many organizations are implementing machine learning (ML) to enhance their business decision-making through automation and the use of large distributed datasets. With increased access to data, ML has the potential to provide unparalleled business insights and opportunities. However, the sharing of raw, non-sanitized sensitive information across different locations poses significant security and privacy risks, especially in regulated industries such as healthcare.

To address this issue, federated learning (FL) is a decentralized and collaborative ML training technique that offers data privacy while maintaining accuracy and fidelity. Unlike traditional ML training, FL training occurs within an isolated client location using an independent secure session. The client only shares its output model parameters with a centralized server, known as the training coordinator or aggregation server, and not the actual data used to train the model. This approach alleviates many data privacy concerns while enabling effective collaboration on model training.

Although FL is a step towards achieving better data privacy and security, it’s not a guaranteed solution. Insecure networks lacking access control and encryption can still expose sensitive information to attackers. Additionally, locally trained information can expose private data if reconstructed through an inference attack. To mitigate these risks, the FL model uses personalized training algorithms and effective masking and parameterization before sharing information with the training coordinator. Strong network controls at local and centralized locations can further reduce inference and exfiltration risks.

In this post, we share an FL approach using FedML, Amazon Elastic Kubernetes Service (Amazon EKS), and Amazon SageMaker to improve patient outcomes while addressing data privacy and security concerns.

The need for federated learning in healthcare

Healthcare relies heavily on distributed data sources to make accurate predictions and assessments about patient care. Limiting the available data sources to protect privacy negatively affects result accuracy and, ultimately, the quality of patient care. Therefore, ML creates challenges for AWS customers who need to ensure privacy and security across distributed entities without compromising patient outcomes.

Healthcare organizations must navigate strict compliance regulations, such as the Health Insurance Portability and Accountability Act (HIPAA) in the United States, while implementing FL solutions. Ensuring data privacy, security, and compliance becomes even more critical in healthcare, requiring robust encryption, access controls, auditing mechanisms, and secure communication protocols. Additionally, healthcare datasets often contain complex and heterogeneous data types, making data standardization and interoperability a challenge in FL settings.

Use case overview

The use case outlined in this post is of heart disease data in different organizations, on which an ML model will run classification algorithms to predict heart disease in the patient. Because this data is across organizations, we use federated learning to collate the findings.

The Heart Disease dataset from the University of California Irvine’s Machine Learning Repository is a widely used dataset for cardiovascular research and predictive modeling. It consists of 303 samples, each representing a patient, and contains a combination of clinical and demographic attributes, as well as the presence or absence of heart disease.

This multivariate dataset has 76 attributes in the patient information, out of which 14 attributes are most commonly used for developing and evaluating ML algorithms to predict the presence of heart disease based on the given attributes.

FedML framework

There is a wide selection of FL frameworks, but we decided to use the FedML framework for this use case because it is open source and supports several FL paradigms. FedML provides a popular open source library, MLOps platform, and application ecosystem for FL. These facilitate the development and deployment of FL solutions. It provides a comprehensive suite of tools, libraries, and algorithms that enable researchers and practitioners to implement and experiment with FL algorithms in a distributed environment. FedML addresses the challenges of data privacy, communication, and model aggregation in FL, offering a user-friendly interface and customizable components. With its focus on collaboration and knowledge sharing, FedML aims to accelerate the adoption of FL and drive innovation in this emerging field. The FedML framework is model agnostic, including recently added support for large language models (LLMs). For more information, refer to Releasing FedLLM: Build Your Own Large Language Models on Proprietary Data using the FedML Platform.

FedML Octopus

System hierarchy and heterogeneity is a key challenge in real-life FL use cases, where different data silos may have different infrastructure with CPU and GPUs. In such scenarios, you can use FedML Octopus.

FedML Octopus is the industrial-grade platform of cross-silo FL for cross-organization and cross-account training. Coupled with FedML MLOps, it enables developers or organizations to conduct open collaboration from anywhere at any scale in a secure manner. FedML Octopus runs a distributed training paradigm inside each data silo and uses synchronous or asynchronous trainings.

FedML MLOps

FedML MLOps enables local development of code that can later be deployed anywhere using FedML frameworks. Before initiating training, you must create a FedML account, as well as create and upload the server and client packages in FedML Octopus. For more details, refer to steps and Introducing FedML Octopus: scaling federated learning into production with simplified MLOps.

Solution overview

We deploy FedML into multiple EKS clusters integrated with SageMaker for experiment tracking. We use Amazon EKS Blueprints for Terraform to deploy the required infrastructure. EKS Blueprints helps compose complete EKS clusters that are fully bootstrapped with the operational software that is needed to deploy and operate workloads. With EKS Blueprints, the configuration for the desired state of EKS environment, such as the control plane, worker nodes, and Kubernetes add-ons, is described as an infrastructure as code (IaC) blueprint. After a blueprint is configured, it can be used to create consistent environments across multiple AWS accounts and Regions using continuous deployment automation.

The content shared in this post reflects real-life situations and experiences, but it’s important to note that the deployment of these situations in different locations may vary. Although we utilize a single AWS account with separate VPCs, it’s crucial to understand that individual circumstances and configurations may differ. Therefore, the information provided should be used as a general guide and may require adaptation based on specific requirements and local conditions.

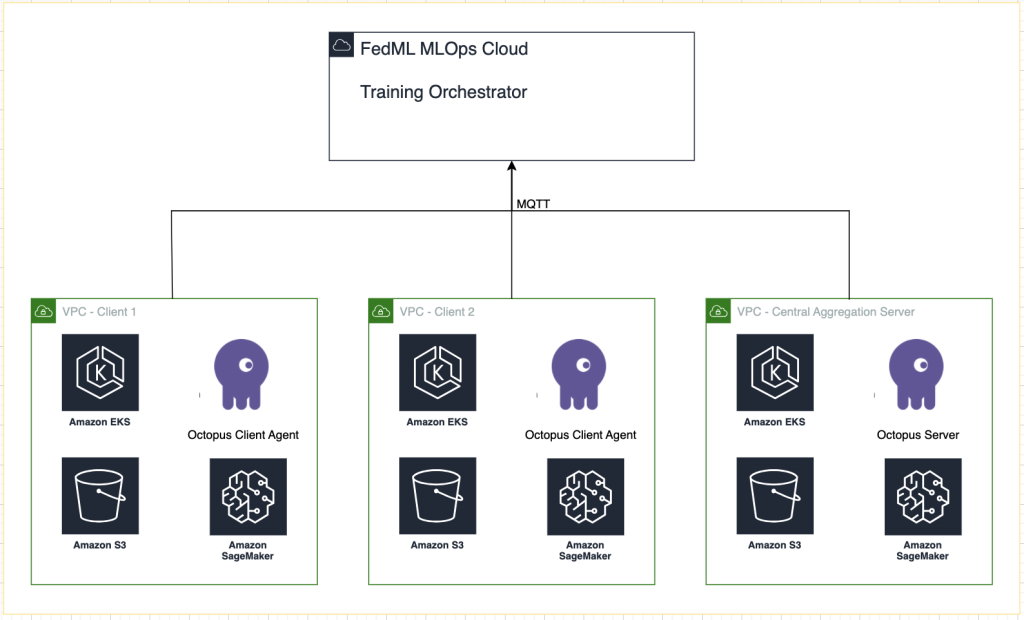

The following diagram illustrates our solution architecture.

In addition to the tracking provided by FedML MLOps for each training run, we use Amazon SageMaker Experiments to track the performance of each client model and the centralized (aggregator) model.

SageMaker Experiments is a capability of SageMaker that lets you create, manage, analyze, and compare your ML experiments. By recording experiment details, parameters, and results, researchers can accurately reproduce and validate their work. It allows for effective comparison and analysis of different approaches, leading to informed decision-making. Additionally, tracking experiments facilitates iterative improvement by providing insights into the progression of models and enabling researchers to learn from previous iterations, ultimately accelerating the development of more effective solutions.

We send the following to SageMaker Experiments for each run:

- Model evaluation metrics – Training loss and Area Under the Curve (AUC)

- Hyperparameters – Epoch, learning rate, batch size, optimizer, and weight decay

Prerequisites

To follow along with this post, you should have the following prerequisites:

- An AWS account

- Local access to the AWS Command Line Interface (AWS CLI) or usage of AWS CloudShell

- Terraform

- kubectl

- A FedML account ID

Deploy the solution

To begin, clone the repository hosting the sample code locally:

Then deploy the use case infrastructure using the following commands:

The Terraform template may take 20–30 minutes to fully deploy. After it’s deployed, follow the steps in the next sections to run the FL application.

Create an MLOps deployment package

As a part of the FedML documentation, we need to create the client and server packages, which the MLOps platform will distribute to the server and clients to begin training.

To create these packages, run the following script found in the root directory:

This will create the respective packages in the following directory in the project’s root directory:

Upload the packages to the FedML MLOps platform

Complete the following steps to upload the packages:

- On the FedML UI, choose My Applications in the navigation pane.

- Choose New Application.

- Upload the client and server packages from your workstation.

- You can also adjust the hyperparameters or create new ones.

Trigger federated training

To run federated training, complete the following steps:

- On the FedML UI, choose Project List in the navigation pane.

- Choose Create a new project.

- Enter a group name and a project name, then choose OK.

- Choose the newly created project and choose Create new run to trigger a training run.

- Select the edge client devices and the central aggregator server for this training run.

- Choose the application that you created in the previous steps.

- Update any of the hyperparameters or use the default settings.

- Choose Start to start training.

- Choose the Training Status tab and wait for the training run to complete. You can also navigate to the tabs available.

- When training is complete, choose the System tab to see the training time durations on your edge servers and aggregation events.

View results and experiment details

When the training is complete, you can view the results using FedML and SageMaker.

On the FedML UI, on the Models tab, you can see the aggregator and client model. You can also download these models from the website.

You can also log in to Amazon SageMaker Studio and choose Experiments in the navigation pane.

The following screenshot shows the logged experiments.

Experiment tracking code

In this section, we explore the code that integrates SageMaker experiment tracking with the FL framework training.

In an editor of your choice, open the following folder to see the edits to the code to inject SageMaker experiment tracking code as a part of the training:

For tracking the training, we create a SageMaker experiment with parameters and metrics logged using the log_parameter and log_metric command as outlined in the following code sample.

An entry in the config/fedml_config.yaml file declares the experiment prefix, which is referenced in the code to create unique experiment names: sm_experiment_name: "fed-heart-disease". You can update this to any value of your choice.

For example, see the following code for the heart_disease_trainer.py, which is used by each client to train the model on their own dataset:

For each client run, the experiment details are tracked using the following code in heart_disease_trainer.py:

Similarly, you can use the code in heart_disease_aggregator.py to run a test on local data after updating the model weights. The details are logged after each communication run with the clients.

Clean up

When you’re done with the solution, make sure to clean up the resources used to ensure efficient resource utilization and cost management, and avoid unnecessary expenses and resource wastage. Active tidying up the environment, such as deleting unused instances, stopping unnecessary services, and removing temporary data, contributes to a clean and organized infrastructure. You can use the following code to clean up your resources:

Summary

By using Amazon EKS as the infrastructure and FedML as the framework for FL, we are able to provide a scalable and managed environment for training and deploying shared models while respecting data privacy. With the decentralized nature of FL, organizations can collaborate securely, unlock the potential of distributed data, and improve ML models without compromising data privacy.

As always, AWS welcomes your feedback. Please leave your thoughts and questions in the comments section.

About the Authors

Randy DeFauw is a Senior Principal Solutions Architect at AWS. He holds an MSEE from the University of Michigan, where he worked on computer vision for autonomous vehicles. He also holds an MBA from Colorado State University. Randy has held a variety of positions in the technology space, ranging from software engineering to product management. He entered the big data space in 2013 and continues to explore that area. He is actively working on projects in the ML space and has presented at numerous conferences, including Strata and GlueCon.

Randy DeFauw is a Senior Principal Solutions Architect at AWS. He holds an MSEE from the University of Michigan, where he worked on computer vision for autonomous vehicles. He also holds an MBA from Colorado State University. Randy has held a variety of positions in the technology space, ranging from software engineering to product management. He entered the big data space in 2013 and continues to explore that area. He is actively working on projects in the ML space and has presented at numerous conferences, including Strata and GlueCon.

Arnab Sinha is a Senior Solutions Architect for AWS, acting as Field CTO to help organizations design and build scalable solutions supporting business outcomes across data center migrations, digital transformation and application modernization, big data, and machine learning. He has supported customers across a variety of industries, including energy, retail, manufacturing, healthcare, and life sciences. Arnab holds all AWS Certifications, including the ML Specialty Certification. Prior to joining AWS, Arnab was a technology leader and previously held architect and engineering leadership roles.

Arnab Sinha is a Senior Solutions Architect for AWS, acting as Field CTO to help organizations design and build scalable solutions supporting business outcomes across data center migrations, digital transformation and application modernization, big data, and machine learning. He has supported customers across a variety of industries, including energy, retail, manufacturing, healthcare, and life sciences. Arnab holds all AWS Certifications, including the ML Specialty Certification. Prior to joining AWS, Arnab was a technology leader and previously held architect and engineering leadership roles.

Prachi Kulkarni is a Senior Solutions Architect at AWS. Her specialization is machine learning, and she is actively working on designing solutions using various AWS ML, big data, and analytics offerings. Prachi has experience in multiple domains, including healthcare, benefits, retail, and education, and has worked in a range of positions in product engineering and architecture, management, and customer success.

Prachi Kulkarni is a Senior Solutions Architect at AWS. Her specialization is machine learning, and she is actively working on designing solutions using various AWS ML, big data, and analytics offerings. Prachi has experience in multiple domains, including healthcare, benefits, retail, and education, and has worked in a range of positions in product engineering and architecture, management, and customer success.

Tamer Sherif is a Principal Solutions Architect at AWS, with a diverse background in the technology and enterprise consulting services realm, spanning over 17 years as a Solutions Architect. With a focus on infrastructure, Tamer’s expertise covers a broad spectrum of industry verticals, including commercial, healthcare, automotive, public sector, manufacturing, oil and gas, media services, and more. His proficiency extends to various domains, such as cloud architecture, edge computing, networking, storage, virtualization, business productivity, and technical leadership.

Tamer Sherif is a Principal Solutions Architect at AWS, with a diverse background in the technology and enterprise consulting services realm, spanning over 17 years as a Solutions Architect. With a focus on infrastructure, Tamer’s expertise covers a broad spectrum of industry verticals, including commercial, healthcare, automotive, public sector, manufacturing, oil and gas, media services, and more. His proficiency extends to various domains, such as cloud architecture, edge computing, networking, storage, virtualization, business productivity, and technical leadership.

Hans Nesbitt is a Senior Solutions Architect at AWS based out of Southern California. He works with customers across the western US to craft highly scalable, flexible, and resilient cloud architectures. In his spare time, he enjoys spending time with his family, cooking, and playing guitar.

Hans Nesbitt is a Senior Solutions Architect at AWS based out of Southern California. He works with customers across the western US to craft highly scalable, flexible, and resilient cloud architectures. In his spare time, he enjoys spending time with his family, cooking, and playing guitar.

Chaoyang He is Co-founder and CTO of FedML, Inc., a startup running for a community building open and collaborative AI from anywhere at any scale. His research focuses on distributed and federated machine learning algorithms, systems, and applications. He received his PhD in Computer Science from the University of Southern California.

Chaoyang He is Co-founder and CTO of FedML, Inc., a startup running for a community building open and collaborative AI from anywhere at any scale. His research focuses on distributed and federated machine learning algorithms, systems, and applications. He received his PhD in Computer Science from the University of Southern California.

Al Nevarez is Director of Product Management at FedML. Before FedML, he was a group product manager at Google, and a senior manager of data science at LinkedIn. He has several data product-related patents, and he studied engineering at Stanford University.

Al Nevarez is Director of Product Management at FedML. Before FedML, he was a group product manager at Google, and a senior manager of data science at LinkedIn. He has several data product-related patents, and he studied engineering at Stanford University.

Salman Avestimehr is Co-founder and CEO of FedML. He has been a Dean’s Professor at USC, Director of the USC-Amazon Center on Trustworthy AI, and an Amazon Scholar in Alexa AI. He is an expert on federated and decentralized machine learning, information theory, security, and privacy. He is a Fellow of IEEE and received his PhD in EECS from UC Berkeley.

Salman Avestimehr is Co-founder and CEO of FedML. He has been a Dean’s Professor at USC, Director of the USC-Amazon Center on Trustworthy AI, and an Amazon Scholar in Alexa AI. He is an expert on federated and decentralized machine learning, information theory, security, and privacy. He is a Fellow of IEEE and received his PhD in EECS from UC Berkeley.

Samir Lad is an accomplished enterprise technologist with AWS who works closely with customers’ C-level executives. As a former C-suite executive who has driven transformations across multiple Fortune 100 companies, Samir shares his invaluable experiences to help his clients succeed in their own transformation journey.

Samir Lad is an accomplished enterprise technologist with AWS who works closely with customers’ C-level executives. As a former C-suite executive who has driven transformations across multiple Fortune 100 companies, Samir shares his invaluable experiences to help his clients succeed in their own transformation journey.

Stephen Kraemer is a Board and CxO advisor and former executive at AWS. Stephen advocates culture and leadership as the foundations of success. He professes security and innovation the drivers of cloud transformation enabling highly competitive, data-driven organizations.

Stephen Kraemer is a Board and CxO advisor and former executive at AWS. Stephen advocates culture and leadership as the foundations of success. He professes security and innovation the drivers of cloud transformation enabling highly competitive, data-driven organizations.

Author: Randy DeFauw