Generate synthetic data for evaluating RAG systems using Amazon Bedrock

However, this requires acquiring a high-quality dataset of real-world question-answer pairs, which can be a daunting task, especially in the early stages of development… When the user asks a question to the system, an embedding is generated from the questions and the top-k most relevant chunks …

Evaluating your Retrieval Augmented Generation (RAG) system to make sure it fulfils your business requirements is paramount before deploying it to production environments. However, this requires acquiring a high-quality dataset of real-world question-answer pairs, which can be a daunting task, especially in the early stages of development. This is where synthetic data generation comes into play. With Amazon Bedrock, you can generate synthetic datasets that mimic actual user queries, enabling you to evaluate your RAG system’s performance efficiently and at scale. With synthetic data, you can streamline the evaluation process and gain confidence in your system’s capabilities before unleashing it to the real world.

This post explains how to use Anthropic Claude on Amazon Bedrock to generate synthetic data for evaluating your RAG system. Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon through a single API, along with a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI.

Fundamentals of RAG evaluation

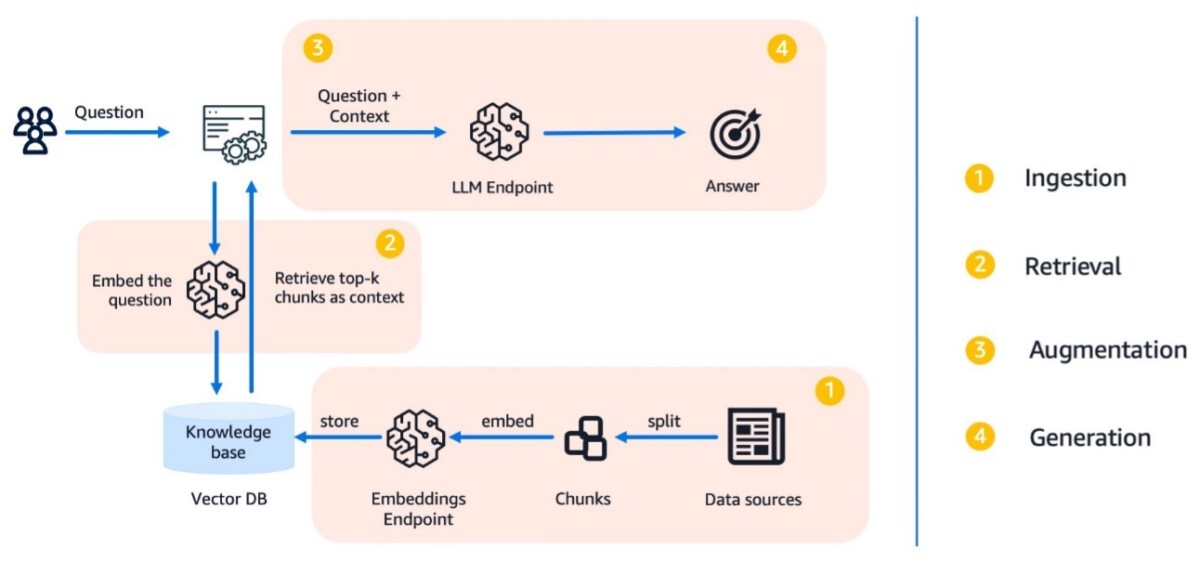

Before diving deep into how to evaluate a RAG application, let’s recap the basic building blocks of a naive RAG workflow, as shown in the following diagram.

The workflow consists of the following steps:

- In the ingestion step, which happens asynchronously, data is split into separate chunks. An embedding model is used to generate embeddings for each of the chunks, which are stored in a vector store.

- When the user asks a question to the system, an embedding is generated from the questions and the top-k most relevant chunks are retrieved from the vector store.

- The RAG model augments the user input by adding the relevant retrieved data in context. This step uses prompt engineering techniques to communicate effectively with the large language model (LLM). The augmented prompt allows the LLM to generate an accurate answer to user queries.

- An LLM is prompted to formulate a helpful answer based on the user’s questions and the retrieved chunks.

Amazon Bedrock Knowledge Bases offers a streamlined approach to implement RAG on AWS, providing a fully managed solution for connecting FMs to custom data sources. To implement RAG using Amazon Bedrock Knowledge Bases, you begin by specifying the location of your data, typically in Amazon Simple Storage Service (Amazon S3), and selecting an embedding model to convert the data into vector embeddings. Amazon Bedrock then creates and manages a vector store in your account, typically using Amazon OpenSearch Serverless, handling the entire RAG workflow, including embedding creation, storage, management, and updates. You can use the RetrieveAndGenerate API for a straightforward implementation, which automatically retrieves relevant information from your knowledge base and generates responses using a specified FM. For more granular control, the Retrieve API is available, allowing you to build custom workflows by processing retrieved text chunks and developing your own orchestration for text generation. Additionally, Amazon Bedrock Knowledge Bases offers customization options, such as defining chunking strategies and selecting custom vector stores like Pinecone or Redis Enterprise Cloud.

A RAG application has many moving parts, and on your way to production you’ll need to make changes to various components of your system. Without a proper automated evaluation workflow, you won’t be able to measure the effect of these changes and will be operating blindly regarding the overall performance of your application.

To evaluate such a system properly, you need to collect an evaluation dataset of typical user questions and answers.

Moreover, you need to make sure you evaluate not only the generation part of the process but also the retrieval. An LLM without relevant retrieved context can’t answer the user’s question if the information wasn’t present in the training data. This holds true even if it has exceptional generation capabilities.

As such, a typical RAG evaluation dataset consists of the following minimum components:

- A list of questions users will ask the RAG system

- A list of corresponding answers to evaluate the generation step

- The context or a list of contexts that contain the answer for each question to evaluate the retrieval

In an ideal world, you would take real user questions as a basis for evaluation. Although this is the optimal approach because it directly resembles end-user behavior, this is not always feasible, especially in the early stages of building a RAG system. As you progress, you should aim for incorporating real user questions into your evaluation set.

To learn more about how to evaluate a RAG application, see Evaluate the reliability of Retrieval Augmented Generation applications using Amazon Bedrock.

Solution overview

We use a sample use case to illustrate the process by building an Amazon shareholder letter chatbot that allows business analysts to gain insights about the company’s strategy and performance over the past years.

For the use case, we use PDF files of Amazon’s shareholder letters as our knowledge base. These letters contain valuable information about the company’s operations, initiatives, and future plans. In a RAG implementation, the knowledge retriever might use a database that supports vector searches to dynamically look up relevant documents that serve as the knowledge source.

The following diagram illustrates the workflow to generate the synthetic dataset for our RAG system.

The workflow includes the following steps:

- Load the data from your data source.

- Chunk the data as you would for your RAG application.

- Generate relevant questions from each document.

- Generate an answer by prompting an LLM.

- Extract the relevant text that answers the question.

- Evolve the question according to a specific style.

- Filter questions and improve the dataset either using domain experts or LLMs using critique agents.

We use a model from the Anthropic’s Claude 3 model family to extract questions and answers from our knowledge source, but you can experiment with other LLMs as well. Amazon Bedrock makes this effortless by providing standardized API access to many FMs.

For the orchestration and automation steps in this process, we use LangChain. LangChain is an open source Python library designed to build applications with LLMs. It provides a modular and flexible framework for combining LLMs with other components, such as knowledge bases, retrieval systems, and other AI tools, to create powerful and customizable applications.

The next sections walk you through the most important parts of the process. If you want to dive deeper and run it yourself, refer to the notebook on GitHub.

Load and prepare the data

First, load the shareholder letters using LangChain’s PyPDFDirectoryLoader and use the RecursiveCharacterTextSplitter to split the PDF documents into chunks. The RecursiveCharacterTextSplitter divides the text into chunks of a specified size while trying to preserve context and meaning of the content. It’s a good way to start when working with text-based documents. You don’t have to split your documents to create your evaluation dataset if your LLM supports a context window that is large enough to fit your documents, but you could potentially end up with a lower quality of generated questions due to the larger size of the task. You want to have the LLM generate multiple questions per document in this case.

To demonstrate the process of generating a corresponding question and answer and iteratively refining them, we use an example chunk from the loaded shareholder letters throughout this post:

Generate an initial question

To facilitate prompting the LLM using Amazon Bedrock and LangChain, you first configure the inference parameters. To accurately extract more extensive contexts, set the max_tokens parameter to 4096, which corresponds to the maximum number of tokens the LLM will generate in its output. Additionally, define the temperature parameter as 0.2 because the goal is to generate responses that adhere to the specified rules while still allowing for a degree of creativity. This value differs for different use cases and can be determined by experimentation.

You use each generated chunk to create synthetic questions that mimic those a real user might ask. By prompting the LLM to analyze a portion of the shareholder letter data, you generate relevant questions based on the information presented in the context. We use the following sample prompt to generate a single question for a specific context. For simplicity, the prompt is hardcoded to generate a single question, but you can also instruct the LLM to generate multiple questions with a single prompt.

The rules can be adapted to better guide the LLM in generating questions that reflect the types of queries your users would pose, tailoring the approach to your specific use case.

The following is the generated question from our example chunk:

What is the price-performance improvement of AWS Graviton2 chip over x86 processors?

Generate answers

To use the questions for evaluation, you need to generate a reference answer for each of the questions to test against. With the following prompt template, you can generate a reference answer to the created question based on the question and the original source chunk:

The following is the generated answer based on the example chunk:

“The AWS revenue grew 37% year-over-year in 2021.”

Extract relevant context

To make the dataset verifiable, we use the following prompt to extract the relevant sentences from the given context to answer the generated question. Knowing the relevant sentences, you can check whether the question and answer are correct.

The following is the relevant source sentence extracted using the preceding prompt:

“This shift by so many companies (along with the economy recovering) helped re-accelerate AWS's revenue growth to 37% Y oY in 2021.”

Refine questions

When generating question and answer pairs from the same prompt for the whole dataset, it might appear that the questions are repetitive and similar in form, and therefore don’t mimic real end-user behavior. To prevent this, take the previously created questions and prompt the LLM to modify them according to the rules and guidance established in the prompt. By doing so, a more diverse dataset is synthetically generated. The prompt for generating questions tailored to your specific use case heavily depends on that particular use case. Therefore, your prompt must accurately reflect your end-users by setting appropriate rules or providing relevant examples. The process of refining questions can be repeated as many times as necessary.

Users of your application might not always use your solution in the same way, for instance using abbreviations when asking questions. This is why it’s crucial to develop a diverse dataset:

“AWS rev YoY growth in ’21?”

Automate dataset generation

To scale the process of the dataset generation, we iterate over all the chunks in our knowledge base; generate questions, answers, relevant sentences, and refinements for each question; and save them to a pandas data frame to prepare the full dataset.

To provide a clearer understanding of the structure of the dataset, the following table presents a sample row based on the example chunk used throughout this post.

| Chunk | Our AWS and Consumer businesses have had different demand trajectories during the pandemic. In thenfirst year of the pandemic, AWS revenue continued to grow at a rapid clip—30% year over year (“Y oY”) in2020 on a $35 billion annual revenue base in 2019—but slower than the 37% Y oY growth in 2019. […] This shift by so many companies (along with the economy recovering) helped re-accelerate AWS’s revenue growth to 37% Y oY in 2021.nConversely, our Consumer revenue grew dramatically in 2020. In 2020, Amazon’s North America andnInternational Consumer revenue grew 39% Y oY on the very large 2019 revenue base of $245 billion; and,this extraordinary growth extended into 2021 with revenue increasing 43% Y oY in Q1 2021. These areastounding numbers. We realized the equivalent of three years’ forecasted growth in about 15 months.nAs the world opened up again starting in late Q2 2021, and more people ventured out to eat, shop, and travel,” |

| Question | “What was the YoY growth of AWS revenue in 2021?” |

| Answer | “The AWS revenue grew 37% year-over-year in 2021.” |

| Source Sentence | “This shift by so many companies (along with the economy recovering) helped re-accelerate AWS’s revenue growth to 37% Y oY in 2021.” |

| Evolved Question | “AWS rev YoY growth in ’21?” |

On average, the generation of questions with a given context of 1,500–2,000 tokens results in an average processing time of 2.6 seconds for a set of initial question, answer, evolved question, and source sentence discovery using Anthropic Claude 3 Haiku. The generation of 1,000 sets of questions and answers costs approximately $2.80 USD using Anthropic Claude 3 Haiku. The pricing page gives a detailed overview of the cost structure. This results in a more time- and cost-efficient generation of datasets for RAG evaluation compared to the manual generation of these questions sets.

Improve your dataset using critique agents

Although using synthetic data is a good starting point, the next step should be to review and refine the dataset, filtering out or modifying questions that aren’t relevant to your specific use case. One effective approach to accomplish this is by using critique agents.

Critique agents are a technique used in natural language processing (NLP) to evaluate the quality and suitability of questions in a dataset for a particular task or application using a machine learning model. In our case, the critique agents are employed to assess whether the questions in the dataset are valid and appropriate for our RAG system.

The two main metrics evaluated by the critique agents in our example are question relevance and answer groundedness. Question relevance determines how relevant the generated question is for a potential user of our system, and groundedness assesses whether the generated answers are indeed based on the given context.

Evaluating the generated questions helps with assessing the quality of a dataset and eventually the quality of the evaluation. The generated question was rated very well:

Best practices for generating synthetic datasets

Although generating synthetic datasets offers numerous benefits, it’s essential to follow best practices to maintain the quality and representativeness of the generated data:

- Combine with real-world data – Although synthetic datasets can mimic real-world scenarios, they might not fully capture the nuances and complexities of actual human interactions or edge cases. Combining synthetic data with real-world data can help address this limitation and create more robust datasets.

- Choose the right model – Choose different LLMs for dataset creation than used for RAG generation in order to avoid self-enhancement bias.

- Implement robust quality assurance – You can employ multiple quality assurance mechanisms, such as critique agents, human evaluation, and automated checks, to make sure the generated datasets meet the desired quality standards and accurately represent the target use case.

- Iterate and refine – You should treat synthetic dataset generation as an iterative process. Continuously refine and improve the process based on feedback and performance metrics, adjusting parameters, prompts, and quality assurance mechanisms as needed.

- Domain-specific customization – For highly specialized or niche domains, consider fine-tuning the LLM (such as with PEFT or RLHF) by injecting domain-specific knowledge to improve the quality and accuracy of the generated datasets.

Conclusion

The generation of synthetic datasets is a powerful technique that can significantly enhance the evaluation process of your RAG system, especially in the early stages of development when real-world data is scarce or difficult to obtain. By taking advantage of the capabilities of LLMs, this approach enables the creation of diverse and representative datasets that accurately mimic real human interactions, while also providing the scalability necessary to meet your evaluation needs.

Although this approach offers numerous benefits, it’s essential to acknowledge its limitations. Firstly, the quality of the synthetic dataset heavily relies on the performance and capabilities of the underlying language model, knowledge retrieval system, and quality of prompts used for generation. Being able to understand and adjust the prompts for generation is crucial in this process. Biases and limitations present in these components may be reflected in the generated dataset. Additionally, capturing the full complexity and nuances of real-world interactions can be challenging because synthetic datasets may not account for all edge cases or unexpected scenarios.

Despite these limitations, generating synthetic datasets remains a valuable tool for accelerating the development and evaluation of RAG systems. By streamlining the evaluation process and enabling iterative development cycles, this approach can contribute to the creation of better-performing AI systems.

We encourage developers, researchers, and enthusiasts to explore the techniques mentioned in this post and the accompanying GitHub repository and experiment with generating synthetic datasets for your own RAG applications. Hands-on experience with this technique can provide valuable insights and contribute to the advancement of RAG systems in various domains.

About the Authors

Johannes Langer is a Senior Solutions Architect at AWS, working with enterprise customers in Germany. Johannes is passionate about applying machine learning to solve real business problems. In his personal life, Johannes enjoys working on home improvement projects and spending time outdoors with his family.

is a Senior Solutions Architect at AWS, working with enterprise customers in Germany. Johannes is passionate about applying machine learning to solve real business problems. In his personal life, Johannes enjoys working on home improvement projects and spending time outdoors with his family.

Lukas Wenzel is a Solutions Architect at Amazon Web Services in Hamburg, Germany. He focuses on supporting software companies building SaaS architectures. In addition to that, he engages with AWS customers on building scalable and cost-efficient generative AI features and applications. In his free-time, he enjoys playing basketball and running.

Lukas Wenzel is a Solutions Architect at Amazon Web Services in Hamburg, Germany. He focuses on supporting software companies building SaaS architectures. In addition to that, he engages with AWS customers on building scalable and cost-efficient generative AI features and applications. In his free-time, he enjoys playing basketball and running.

David Boldt is a Solutions Architect at Amazon Web Services. He helps customers build secure and scalable solutions that meet their business needs. He is specialized in machine learning to address industry-wide challenges, using technologies to drive innovation and efficiency across various sectors.

David Boldt is a Solutions Architect at Amazon Web Services. He helps customers build secure and scalable solutions that meet their business needs. He is specialized in machine learning to address industry-wide challenges, using technologies to drive innovation and efficiency across various sectors.

Author: Lukas Wenzel