Guardrails for Amazon Bedrock now available with new safety filters and privacy controls

” In the preview post, Antje showed you how to use guardrails to configure thresholds to filter content across harmful categories and define a set of topics that need to be avoided in the context of your application… The Content filters feature now has two additional safety categories: Miscondu…

Today, I am happy to announce the general availability of Guardrails for Amazon Bedrock, first released in preview at re:Invent 2023. With Guardrails for Amazon Bedrock, you can implement safeguards in your generative artificial intelligence (generative AI) applications that are customized to your use cases and responsible AI policies. You can create multiple guardrails tailored to different use cases and apply them across multiple foundation models (FMs), improving end-user experiences and standardizing safety controls across generative AI applications. You can use Guardrails for Amazon Bedrock with all large language models (LLMs) in Amazon Bedrock, including fine-tuned models.

Guardrails for Bedrock offers industry-leading safety protection on top of the native capabilities of FMs, helping customers block as much as 85% more harmful content than protection natively provided by some foundation models on Amazon Bedrock today. Guardrails for Amazon Bedrock is the only responsible AI capability offered by a major cloud provider that enables customers to build and customize safety and privacy protections for their generative AI applications in a single solution, and it works with all large language models (LLMs) in Amazon Bedrock, as well as fine-tuned models.

Aha! is a software company that helps more than 1 million people bring their product strategy to life. “Our customers depend on us every day to set goals, collect customer feedback, and create visual roadmaps,” said Dr. Chris Waters, co-founder and Chief Technology Officer at Aha!. “That is why we use Amazon Bedrock to power many of our generative AI capabilities. Amazon Bedrock provides responsible AI features, which enable us to have full control over our information through its data protection and privacy policies, and block harmful content through Guardrails for Bedrock. We just built on it to help product managers discover insights by analyzing feedback submitted by their customers. This is just the beginning. We will continue to build on advanced AWS technology to help product development teams everywhere prioritize what to build next with confidence.”

In the preview post, Antje showed you how to use guardrails to configure thresholds to filter content across harmful categories and define a set of topics that need to be avoided in the context of your application. The Content filters feature now has two additional safety categories: Misconduct for detecting criminal activities and Prompt Attack for detecting prompt injection and jailbreak attempts. We also added important new features, including sensitive information filters to detect and redact personally identifiable information (PII) and word filters to block inputs containing profane and custom words (for example, harmful words, competitor names, and products).

Guardrails for Amazon Bedrock sits in between the application and the model. Guardrails automatically evaluates everything going into the model from the application and coming out of the model to the application to detect and help prevent content that falls into restricted categories.

You can recap the steps in the preview release blog to learn how to configure Denied topics and Content filters. Let me show you how the new features work.

New features

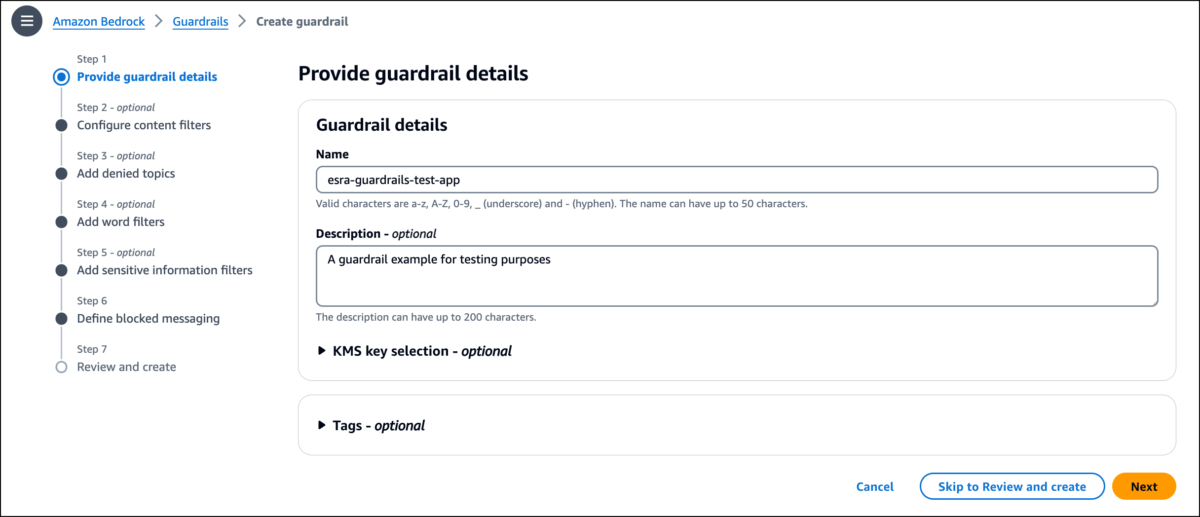

To start using Guardrails for Amazon Bedrock, I go to the AWS Management Console for Amazon Bedrock, where I can create guardrails and configure the new capabilities. In the navigation pane in the Amazon Bedrock console, I choose Guardrails, and then I choose Create guardrail.

I enter the guardrail Name and Description. I choose Next to move to the Add sensitive information filters step.

I use Sensitive information filters to detect sensitive and private information in user inputs and FM outputs. Based on the use cases, I can select a set of entities to be either blocked in inputs (for example, a FAQ-based chatbot that doesn’t require user-specific information) or redacted in outputs (for example, conversation summarization based on chat transcripts). The sensitive information filter supports a set of predefined PII types. I can also define custom regex-based entities specific to my use case and needs.

I add two PII types (Name, Email) from the list and add a regular expression pattern using Booking ID as Name and [0-9a-fA-F]{8} as the Regex pattern.

I choose Next and enter custom messages that will be displayed if my guardrail blocks the input or the model response in the Define blocked messaging step. I review the configuration at the last step and choose Create guardrail.

I navigate to the Guardrails Overview page and choose the Anthropic Claude Instant 1.2 model using the Test section. I enter the following call center transcript in the Prompt field and choose Run.

Please summarize the below call center transcript. Put the name, email and the booking ID to the top:

Agent: Welcome to ABC company. How can I help you today?

Customer: I want to cancel my hotel booking.

Agent: Sure, I can help you with the cancellation. Can you please provide your booking ID?

Customer: Yes, my booking ID is 550e8408.

Agent: Thank you. Can I have your name and email for confirmation?

Customer: My name is Jane Doe and my email is jane.doe@gmail.com

Agent: Thank you for confirming. I will go ahead and cancel your reservation.

Guardrail action shows there are three instances where the guardrails came in to effect. I use View trace to check the details. I notice that the guardrail detected the Name, Email and Booking ID and masked them in the final response.

I use Word filters to block inputs containing profane and custom words (for example, competitor names or offensive words). I check the Filter profanity box. The profanity list of words is based on the global definition of profanity. Additionally, I can specify up to 10,000 phrases (with a maximum of three words per phrase) to be blocked by the guardrail. A blocked message will show if my input or model response contain these words or phrases.

Now, I choose Custom words and phrases under Word filters and choose Edit. I use Add words and phrases manually to add a custom word CompetitorY. Alternatively, I can use Upload from a local file or Upload from S3 object if I need to upload a list of phrases. I choose Save and exit to return to my guardrail page.

I enter a prompt containing information about a fictional company and its competitor and add the question What are the extra features offered by CompetitorY?. I choose Run.

I use View trace to check the details. I notice that the guardrail intervened according to the policies I configured.

Now available

Guardrails for Amazon Bedrock is now available in US East (N. Virginia) and US West (Oregon) Regions.

For pricing information, visit the Amazon Bedrock pricing page.

To get started with this feature, visit the Guardrails for Amazon Bedrock web page.

For deep-dive technical content and to learn how our Builder communities are using Amazon Bedrock in their solutions, visit our community.aws website.

— EsraAuthor: Esra Kayabali