How Veriff decreased deployment time by 80% using Amazon SageMaker multi-model endpoints

As an AI-powered solution, Veriff needs to create and run dozens of machine learning (ML) models in a cost-effective way… These models range from lightweight tree-based models to deep learning computer vision models, which need to run on GPUs to achieve low latency and improve the user experienc…

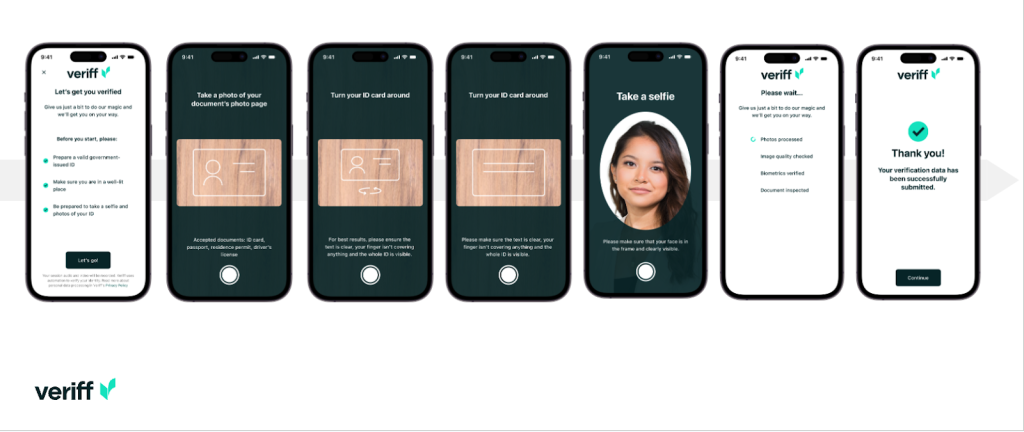

Veriff is an identity verification platform partner for innovative growth-driven organizations, including pioneers in financial services, FinTech, crypto, gaming, mobility, and online marketplaces. They provide advanced technology that combines AI-powered automation with human feedback, deep insights, and expertise.

Veriff delivers a proven infrastructure that enables their customers to have trust in the identities and personal attributes of their users across all the relevant moments in their customer journey. Veriff is trusted by customers such as Bolt, Deel, Monese, Starship, Super Awesome, Trustpilot, and Wise.

As an AI-powered solution, Veriff needs to create and run dozens of machine learning (ML) models in a cost-effective way. These models range from lightweight tree-based models to deep learning computer vision models, which need to run on GPUs to achieve low latency and improve the user experience. Veriff is also currently adding more products to its offering, targeting a hyper-personalized solution for its customers. Serving different models for different customers adds to the need for a scalable model serving solution.

In this post, we show you how Veriff standardized their model deployment workflow using Amazon SageMaker, reducing costs and development time.

Infrastructure and development challenges

Veriff’s backend architecture is based on a microservices pattern, with services running on different Kubernetes clusters hosted on AWS infrastructure. This approach was initially used for all company services, including microservices that run expensive computer vision ML models.

Some of these models required deployment on GPU instances. Conscious of the comparatively higher cost of GPU-backed instance types, Veriff developed a custom solution on Kubernetes to share a given GPU’s resources between different service replicas. A single GPU typically has enough VRAM to hold multiple of Veriff’s computer vision models in memory.

Although the solution did alleviate GPU costs, it also came with the constraint that data scientists needed to indicate beforehand how much GPU memory their model would require. Furthermore, DevOps were burdened with manually provisioning GPU instances in response to demand patterns. This caused an operational overhead and overprovisioning of instances, which resulted in a suboptimal cost profile.

Apart from GPU provisioning, this setup also required data scientists to build a REST API wrapper for each model, which was needed to provide a generic interface for other company services to consume, and to encapsulate preprocessing and postprocessing of model data. These APIs required production-grade code, which made it challenging for data scientists to productionize models.

Veriff’s data science platform team looked for alternative ways to this approach. The main objective was to support the company’s data scientists with a better transition from research to production by providing simpler deployment pipelines. The secondary objective was to reduce the operational costs of provisioning GPU instances.

Solution overview

Veriff required a new solution that solved two problems:

- Allow building REST API wrappers around ML models with ease

- Allow managing provisioned GPU instance capacity optimally and, if possible, automatically

Ultimately, the ML platform team converged on the decision to use Sagemaker multi-model endpoints (MMEs). This decision was driven by MME’s support for NVIDIA’s Triton Inference Server (an ML-focused server that makes it easy to wrap models as REST APIs; Veriff was also already experimenting with Triton), as well as its capability to natively manage the auto scaling of GPU instances via simple auto scaling policies.

Two MMEs were created at Veriff, one for staging and one for production. This approach allows them to run testing steps in a staging environment without affecting the production models.

SageMaker MMEs

SageMaker is a fully managed service that provides developers and data scientists the ability to build, train, and deploy ML models quickly. SageMaker MMEs provide a scalable and cost-effective solution for deploying a large number of models for real-time inference. MMEs use a shared serving container and a fleet of resources that can use accelerated instances such as GPUs to host all of your models. This reduces hosting costs by maximizing endpoint utilization compared to using single-model endpoints. It also reduces deployment overhead because SageMaker manages loading and unloading models in memory and scaling them based on the endpoint’s traffic patterns. In addition, all SageMaker real-time endpoints benefit from built-in capabilities to manage and monitor models, such as including shadow variants, auto scaling, and native integration with Amazon CloudWatch (for more information, refer to CloudWatch Metrics for Multi-Model Endpoint Deployments).

Custom Triton ensemble models

There were several reasons why Veriff decided to use Triton Inference Server, the main ones being:

- It allows data scientists to build REST APIs from models by arranging model artifact files in a standard directory format (no code solution)

- It’s compatible with all major AI frameworks (PyTorch, Tensorflow, XGBoost, and more)

- It provides ML-specific low-level and server optimizations such as dynamic batching of requests

Using Triton allows data scientists to deploy models with ease because they only need to build formatted model repositories instead of writing code to build REST APIs (Triton also supports Python models if custom inference logic is required). This decreases model deployment time and gives data scientists more time to focus on building models instead of deploying them.

Another important feature of Triton is that it allows you to build model ensembles, which are groups of models that are chained together. These ensembles can be run as if they were a single Triton model. Veriff currently employs this feature to deploy preprocessing and postprocessing logic with each ML model using Python models (as mentioned earlier), ensuring that there are no mismatches in the input data or model output when models are used in production.

The following is what a typical Triton model repository looks like for this workload:

The model.py file contains preprocessing and postprocessing code. The trained model weights are in the screen_detection_inferencer directory, under model version 1 (model is in ONNX format in this example, but can also be TensorFlow, PyTorch format, or others). The ensemble model definition is in the screen_detection_pipeline directory, where inputs and outputs between steps are mapped in a configuration file.

Additional dependencies needed to run the Python models are detailed in a requirements.txt file, and need to be conda-packed to build a Conda environment (python_env.tar.gz). For more information, refer to Managing Python Runtime and Libraries. Also, config files for Python steps need to point to python_env.tar.gz using the EXECUTION_ENV_PATH directive.

The model folder then needs to be TAR compressed and renamed using model_version.txt. Finally, the resulting <model_name>_<model_version>.tar.gz file is copied to the Amazon Simple Storage Service (Amazon S3) bucket connected to the MME, allowing SageMaker to detect and serve the model.

Model versioning and continuous deployment

As the previous section made apparent, building a Triton model repository is straightforward. However, running all the necessary steps to deploy it is tedious and error prone, if run manually. To overcome this, Veriff built a monorepo containing all models to be deployed to MMEs, where data scientists collaborate in a Gitflow-like approach. This monorepo has the following features:

- It’s managed using Pants.

- Code quality tools such as Black and MyPy are applied using Pants.

- Unit tests are defined for each model, which check that the model output is the expected output for a given model input.

- Model weights are stored alongside model repositories. These weights can be large binary files, so DVC is used to sync them with Git in a versioned manner.

This monorepo is integrated with a continuous integration (CI) tool. For every new push to the repo or new model, the following steps are run:

- Pass the code quality check.

- Download the model weights.

- Build the Conda environment.

- Spin up a Triton server using the Conda environment and use it to process requests defined in unit tests.

- Build the final model TAR file (

<model_name>_<model_version>.tar.gz).

These steps make sure that models have the quality required for deployment, so for every push to a repo branch, the resulting TAR file is copied (in another CI step) to the staging S3 bucket. When pushes are done in the main branch, the model file is copied to the production S3 bucket. The following diagram depicts this CI/CD system.

Cost and deployment speed benefits

Using MMEs allows Veriff to use a monorepo approach to deploy models to production. In summary, Veriff’s new model deployment workflow consists of the following steps:

- Create a branch in the monorepo with the new model or model version.

- Define and run unit tests in a development machine.

- Push the branch when the model is ready to be tested in the staging environment.

- Merge the branch into main when the model is ready to be used in production.

With this new solution in place, deploying a model at Veriff is a straightforward part of the development process. New model development time has decreased from 10 days to an average of 2 days.

The managed infrastructure provisioning and auto scaling features of SageMaker brought Veriff added benefits. They used the InvocationsPerInstance CloudWatch metric to scale according to traffic patterns, saving on costs without sacrificing reliability. To define the threshold value for the metric, they performed load testing on the staging endpoint to find the best trade-off between latency and cost.

After deploying seven production models to MMEs and analyzing spend, Veriff reported a 75% cost reduction in GPU model serving as compared to the original Kubernetes-based solution. Operational costs were reduced as well, because the burden of provisioning instances manually was lifted from the company’s DevOps engineers.

Conclusion

In this post, we reviewed why Veriff chose Sagemaker MMEs over self-managed model deployment on Kubernetes. SageMaker takes on the undifferentiated heavy lifting, allowing Veriff to decrease model development time, increase engineering efficiency, and dramatically lower the cost for real-time inference while maintaining the performance needed for their business-critical operations. Finally, we showcased Veriff’s simple yet effective model deployment CI/CD pipeline and model versioning mechanism, which can be used as a reference implementation of combining software development best practices and SageMaker MMEs. You can find code samples on hosting multiple models using SageMaker MMEs on GitHub.

About the Authors

Ricard Borràs is a Senior Machine Learning at Veriff, where he is leading MLOps efforts in the company. He helps data scientists to build faster and better AI / ML products by building a Data Science Platform at the company, and combining several open source solutions with AWS services.

Ricard Borràs is a Senior Machine Learning at Veriff, where he is leading MLOps efforts in the company. He helps data scientists to build faster and better AI / ML products by building a Data Science Platform at the company, and combining several open source solutions with AWS services.

João Moura is an AI/ML Specialist Solutions Architect at AWS, based in Spain. He helps customers with deep learning model large-scale training and inference optimization, and more broadly building large-scale ML platforms on AWS.

João Moura is an AI/ML Specialist Solutions Architect at AWS, based in Spain. He helps customers with deep learning model large-scale training and inference optimization, and more broadly building large-scale ML platforms on AWS.

Miguel Ferreira works as a Sr. Solutions Architect at AWS based in Helsinki, Finland. AI/ML has been a lifelong interest and he has helped multiple customers integrate Amazon SageMaker into their ML workflows.

Miguel Ferreira works as a Sr. Solutions Architect at AWS based in Helsinki, Finland. AI/ML has been a lifelong interest and he has helped multiple customers integrate Amazon SageMaker into their ML workflows.

Author: Ricard Borràs