Learn how Amazon Ads created a generative AI-powered image generation capability using Amazon SageMaker

To help advertisers more seamlessly address this challenge, Amazon Ads rolled out an image generation capability that quickly and easily develops lifestyle imagery, which helps advertisers bring their brand stories to life… In this blog post, we describe the architectural and operational details…

Amazon Ads helps advertisers and brands achieve their business goals by developing innovative solutions that reach millions of Amazon customers at every stage of their journey. At Amazon Ads, we believe that what makes advertising effective is delivering relevant ads in the right context and at the right moment within the consumer buying journey. With that goal, Amazon Ads has used artificial intelligence (AI), applied science, and analytics to help its customers drive desired business outcomes for nearly two decades.

In a March 2023 survey, Amazon Ads found that among advertisers who were unable to build successful campaigns, nearly 75 percent cited building the creative content as one of their biggest challenges. To help advertisers more seamlessly address this challenge, Amazon Ads rolled out an image generation capability that quickly and easily develops lifestyle imagery, which helps advertisers bring their brand stories to life. This blog post shares more about how generative AI solutions from Amazon Ads help brands create more visually rich consumer experiences.

In this blog post, we describe the architectural and operational details of how Amazon Ads implemented its generative AI-powered image creation solution on AWS. Before diving deeper into the solution, we start by highlighting the creative experience of an advertiser enabled by generative AI. Next, we present the solution architecture and process flows for machine learning (ML) model building, deployment, and inferencing. We end with lessons learned.

Advertiser creative experience

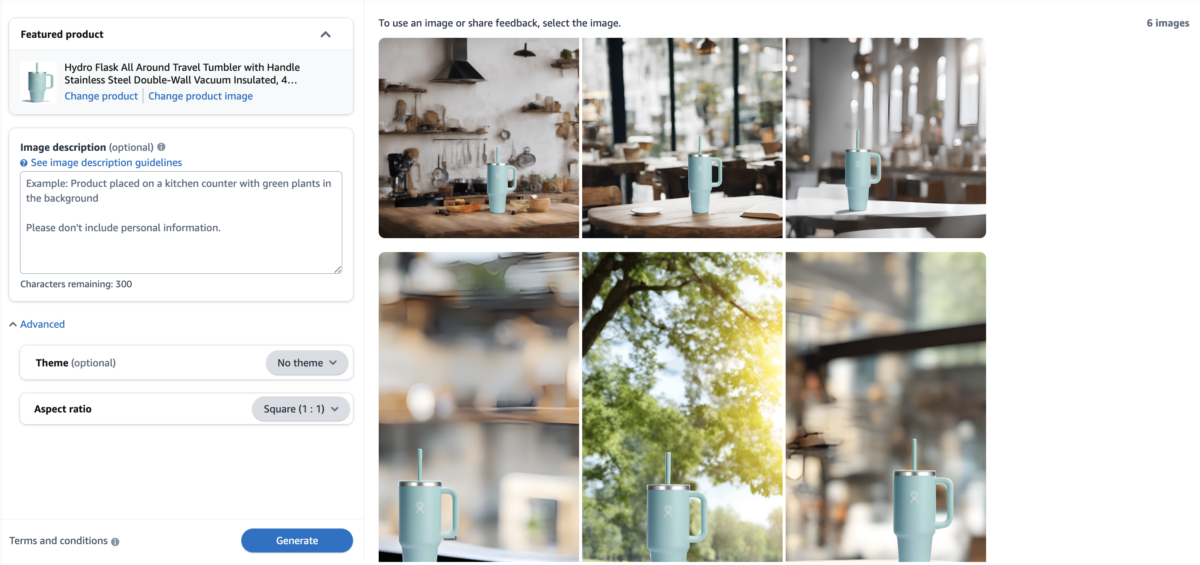

When building ad creative, advertisers prefer to customize the creative in a way that makes it relevant to their desired audiences. For example, an advertiser might have static images of their product against a white background. From an advertiser point of view, the process is handled in three steps:

- Image generation converts product-only images into rich, contextually relevant images using generative AI. The approach preserves the original product features, requiring no technical expertise.

- Anyone with access to the Amazon Ads console can create custom brand images without needing technical or design expertise.

- Advertisers can create multiple contextually relevant and engaging product images with no additional cost.

A benefit of the image-generation solution is the automatic creation of relevant product images based on product selection only, with no additional input required from the advertisers. While there are options to enhance background imagery such as prompts, themes, and custom product images, they are not necessary to generate compelling creative. If advertisers do not supply this information, the model will infer it based on information from their product listing on amazon.com.

Figure 1. An example from the image generation solution showing a hydro flask with various backgrounds.

Solution overview

Figure 2 shows a simplified solution architecture for inferencing and model deployment. The steps for the model development and deployment are shown in blue circles and depicted by roman-numerals (i,ii, … iv.) whereas inferencing steps are in orange with Hindu-Arabic numbers (1,2,… 8.).

Figure 2. Solution architecture for inferencing and model deployment.

Amazon SageMaker is at the center of model development and deployment. The team used Amazon SageMaker JumpStart to rapidly prototype and iterate under their desired conditions (step i). Acting as a model hub, JumpStart provided a large selection of foundation models and the team quickly ran their benchmarks on candidate models. After selecting candidate large language models (LLMs), the science teams can proceed with the remaining steps by adding more customization. Amazon Ads applied scientists use SageMaker Studio as the web-based interface to work with SageMaker (step ii). SageMaker has the appropriate access policies to view some intermediary model results, which can be used for further experimentation (step iii).

The Amazon Ads team manually reviewed images at scale through a human-in-the-loop process where the team ensured that the application provides high quality and responsible images. To do that, the team deployed testing endpoints using SageMaker and generated a large number of images spanning various scenarios and conditions (step iv). Here, Amazon SageMaker Ground Truth allowed ML engineers to easily build the human-in-the-loop workflow (step v). The workflow allowed the Amazon Ads team to experiment with different foundation models and configurations through blind A/B testing to ensure that feedback to the generated images is unbiased. After the chosen model is ready to be moved into production, the model is deployed (step vi) using the team’s own in-house Model Lifecycle Manager tool. Under the hood, this tool uses artifacts generated by SageMaker (step vii) which is then deployed into the production AWS account (step viii), using SageMaker SDKs .

Regarding the inference, customers using Amazon Ads now have a new API to receive these generated images. The Amazon API Gateway receives the PUT request (step 1). The request is then processed by AWS Lambda, which uses AWS Step Functions to orchestrate the process (step 2). The product image is fetched from an image repository, which is a part of an existing solution predating this creative feature. The next step is to process customer text prompts and customize the image through content ingestion guardrails. Amazon Comprehend is used to detect undesired context in the text prompt, whereas Amazon Rekognition processes images for content moderation purposes (step 3). If the inputs pass the inspection, then the text continues as a prompt, while the image is processed by removing the background (step 4). Then, the deployed text-to-image model is used for image generation using the prompt and the processed image (step 5). The image is then uploaded into an Amazon Simple Storage Services (Amazon S3) bucket for images and the metadata about the image is stored in an Amazon DynamoDB table (step 6). This whole process starting from step 2 is orchestrated by AWS Step Functions. Finally, the Lambda function receives the image and meta-data (step 7) which are then sent to the Amazon Ads client service through the API Gateway (step 8).

Conclusion

This post presented the technical solution for the Amazon Ads generative AI-powered image generation solution, which advertisers can use to create customized brand images without needing a dedicated design team. Advertisers have a series of features to generate and customize images such as writing text prompts, selecting different themes, swapping the featured product, or uploading a new image of the product from their device or asset library allowing them to create impactful images for advertising their products.

The architecture uses modular microservices with separate components for model development, registry, model lifecycle management (which is an orchestration and step function-based solution to process advertiser inputs), select the appropriate model, and track the job throughout the service, and a customer facing API. Here, Amazon SageMaker is at the center of the solution, starting from JumpStart to final SageMaker deployment.

If you plan to build your generative AI application on Amazon SageMaker, the fastest way is with SageMaker JumpStart. Watch this presentation to learn how you can start your project with JumpStart.

About the Authors

Anita Lacea is the Single-Threaded Leader of generative AI image ads at Amazon, enabling advertisers to create visually stunning ads with the click of a button. Anita pairs her broad expertise across the hardware and software industry with the latest innovations in generative AI to develop performant and cost-optimized solutions for her customers, revolutionizing the way businesses connect with their audiences. She is passionate about traditional visual arts and is an exhibiting printmaker.

Anita Lacea is the Single-Threaded Leader of generative AI image ads at Amazon, enabling advertisers to create visually stunning ads with the click of a button. Anita pairs her broad expertise across the hardware and software industry with the latest innovations in generative AI to develop performant and cost-optimized solutions for her customers, revolutionizing the way businesses connect with their audiences. She is passionate about traditional visual arts and is an exhibiting printmaker.

Burak Gozluklu is a Principal AI/ML Specialist Solutions Architect located in Boston, MA. He helps strategic customers adopt AWS technologies and specifically Generative AI solutions to achieve their business objectives. Burak has a PhD in Aerospace Engineering from METU, an MS in Systems Engineering, and a post-doc in system dynamics from MIT in Cambridge, MA. Burak is still a research affiliate in MIT. Burak is passionate about yoga and meditation.

Burak Gozluklu is a Principal AI/ML Specialist Solutions Architect located in Boston, MA. He helps strategic customers adopt AWS technologies and specifically Generative AI solutions to achieve their business objectives. Burak has a PhD in Aerospace Engineering from METU, an MS in Systems Engineering, and a post-doc in system dynamics from MIT in Cambridge, MA. Burak is still a research affiliate in MIT. Burak is passionate about yoga and meditation.

Christopher de Beer is a senior software development engineer at Amazon located in Edinburgh, UK. With a background in visual design. He works on creative building products for advertising, focusing on video generation, helping advertisers to reach their customers through visual communication. Building products that automate creative production, using traditional as well as generative techniques, to reduce friction and delight customers. Outside of his work as an engineer Christopher is passionate about Human-Computer Interaction (HCI) and interface design.

Christopher de Beer is a senior software development engineer at Amazon located in Edinburgh, UK. With a background in visual design. He works on creative building products for advertising, focusing on video generation, helping advertisers to reach their customers through visual communication. Building products that automate creative production, using traditional as well as generative techniques, to reduce friction and delight customers. Outside of his work as an engineer Christopher is passionate about Human-Computer Interaction (HCI) and interface design.

Yashal Shakti Kanungo is an Applied Scientist III at Amazon Ads. His focus is on generative foundational models that take a variety of user inputs and generate text, images, and videos. It’s a blend of research and applied science, constantly pushing the boundaries of what’s possible in generative AI. Over the years, he has researched and deployed a variety of these models in production across the online advertising spectrum ranging from ad sourcing, click-prediction, headline generation, image generation, and more.

Yashal Shakti Kanungo is an Applied Scientist III at Amazon Ads. His focus is on generative foundational models that take a variety of user inputs and generate text, images, and videos. It’s a blend of research and applied science, constantly pushing the boundaries of what’s possible in generative AI. Over the years, he has researched and deployed a variety of these models in production across the online advertising spectrum ranging from ad sourcing, click-prediction, headline generation, image generation, and more.

Sravan Sripada is a Senior Applied Scientist at Amazon located in Seattle, WA. His primary focus lies in developing generative AI models that enable advertisers to create engaging ad creatives (images, video, etc.) with minimal effort. Previously, he worked on utilizing machine learning for preventing fraud and abuse on the Amazon store platform. When not at work, He is passionate about engaging in outdoor activities and dedicating time to meditation.

Sravan Sripada is a Senior Applied Scientist at Amazon located in Seattle, WA. His primary focus lies in developing generative AI models that enable advertisers to create engaging ad creatives (images, video, etc.) with minimal effort. Previously, he worked on utilizing machine learning for preventing fraud and abuse on the Amazon store platform. When not at work, He is passionate about engaging in outdoor activities and dedicating time to meditation.

Cathy Willcock is a Principal Technical Business Development Manager located in Seattle, WA. Cathy leads the AWS technical account team supporting Amazon Ads adoption of AWS cloud technologies. Her team works across Amazon Ads enabling discovery, testing, design, analysis, and deployments of AWS services at scale, with a particular focus on innovation to shape the landscape across the AdTech and MarTech industry. Cathy has led engineering, product, and marketing teams and is an inventor of ground-to-air calling (1-800-RINGSKY).

Cathy Willcock is a Principal Technical Business Development Manager located in Seattle, WA. Cathy leads the AWS technical account team supporting Amazon Ads adoption of AWS cloud technologies. Her team works across Amazon Ads enabling discovery, testing, design, analysis, and deployments of AWS services at scale, with a particular focus on innovation to shape the landscape across the AdTech and MarTech industry. Cathy has led engineering, product, and marketing teams and is an inventor of ground-to-air calling (1-800-RINGSKY).

Author: Anita Lacea