Minimize generative AI hallucinations with Amazon Bedrock Automated Reasoning checks

Through logic-based algorithms and mathematical validation, Automated Reasoning checks validate LLM outputs against domain knowledge encoded in the Automated Reasoning policy to help prevent factual inaccuracies… This approach mirrors the rigors of auditors verifying financial statements or compl…

Foundation models (FMs) and generative AI are transforming enterprise operations across industries. McKinsey & Company’s recent research estimates generative AI could contribute up to $4.4 trillion annually to the global economy through enhanced operational efficiency, productivity growth of 0.1% to 0.6% annually, improved customer experience through personalized interactions, and accelerated digital transformation.

Today, organizations struggle with AI hallucination when moving generative AI applications from experimental to production environments. Model hallucination, where AI systems generate plausible but incorrect information, remains a primary concern. The 2024 Gartner CIO Generative AI Survey highlights three major risks: reasoning errors from hallucinations (59% of respondents), misinformation from bad actors (48%), and privacy concerns (44%).

To improve factual accuracy of large language model (LLM) responses, AWS announced Amazon Bedrock Automated Reasoning checks (in gated preview) at AWS re:Invent 2024. Through logic-based algorithms and mathematical validation, Automated Reasoning checks validate LLM outputs against domain knowledge encoded in the Automated Reasoning policy to help prevent factual inaccuracies. Automated reasoning checks is part of Amazon Bedrock Guardrails, a comprehensive framework that also provides content filtering, personally identifiable information (PII) redaction, and enhanced security measures. Together, these capabilities enable organizations to implement reliable generative AI safeguards—with Automated Reasoning checks addressing factual accuracy while other Amazon Bedrock Guardrails features help protect against harmful content and safeguard sensitive information.

In this post, we discuss how to help prevent generative AI hallucinations using Amazon Bedrock Automated Reasoning checks.

Automated Reasoning overview

Automated Reasoning is a specialized branch of computer science that uses mathematical proof techniques and formal logical deduction to verify compliance with rules and requirements with absolute certainty under given assumptions. As organizations face increasing needs to verify complex rules and requirements with mathematical certainty, automated reasoning techniques offer powerful capabilities. For example, AWS customers have direct access to automated reasoning-based features such as IAM Access Analyzer, S3 Block Public Access, or VPC Reachability Analyzer.

Unlike probabilistic approaches prevalent in machine learning, Automated Reasoning relies on formal mathematical logic to provide definitive guarantees about what can and can’t be proven. This approach mirrors the rigors of auditors verifying financial statements or compliance officers validating regulatory requirements, but with mathematical precision. By using rigorous logical frameworks and theorem-proving methodologies, Automated Reasoning can conclusively determine whether statements are true or false under given assumptions. This makes it exceptionally valuable for applications that demand high assurance and need to deliver unambiguous conclusions to their users.

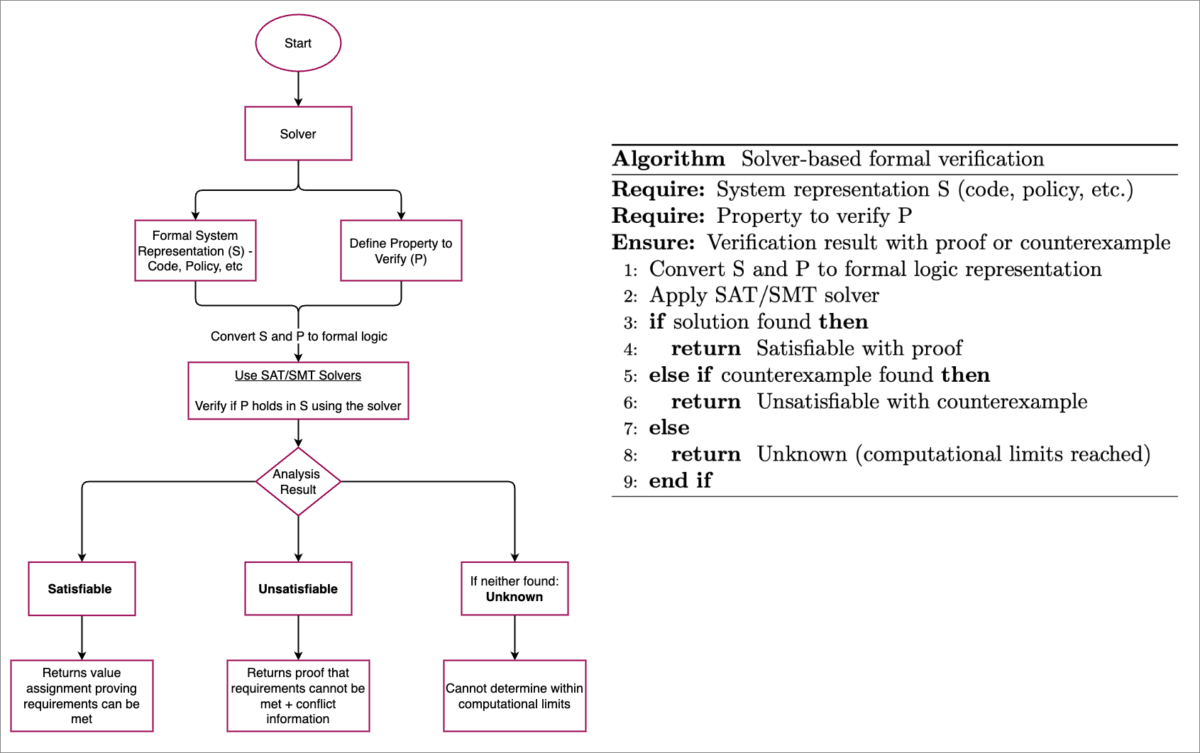

The following workflow illustrates solver-based formal verification, showing both the process flow and algorithm for verifying formal system properties through logical analysis and SAT/SMT solvers.

One of the widely used Automated Reasoning techniques is SAT/SMT solving, which involves encoding a representation of rules and requirements into logical formulas. A logical formula is a mathematical expression that uses variables and logical operators to represent conditions and relationships. After the rules and requirements are encoded into these formulas, specialized tools known as solvers are applied to compute solutions that satisfy these constraints. These solvers determine whether the formulas can be satisfied—whether there exist values for variables that make the formulas true.

This process starts with two main inputs: a formal representation of the system (like code or a policy) expressed as logical formulas, and a property to analyze (such as whether certain conditions are possible or requirements can be met). The solver can return one of three possible outcomes:

- Satisfiable – The solver finds an assignment of values that makes the formulas true, proving that the system can satisfy the given requirements. The solver provides this assignment, which can serve as a concrete example of correct behavior.

- Unsatisfiable – The solver proves that no assignment exists that make all formulas true, proving that the requirements can’t be met. This often comes with information about which constraints are in conflict, helping identify the incorrect assumptions in the system.

- Unknown – In some cases, the solver might not be able to determine satisfiability within reasonable computational limits, or the encoding might not contain enough information to reach a conclusion.

This technique makes sure that you either get confirmation that the specific property holds (with a concrete example), proof that it can’t be satisfied (with information on conflicting constraints), or an indication that the problem needs to be reformulated or analyzed differently.

Key features of Automated Reasoning checks

Automated Reasoning checks offer the following key features:

- Mathematical validation framework – The feature verifies LLM outputs using mathematical logical deduction. Unlike probabilistic methods, it uses sound mathematical approaches to provide definitive guarantees about system behaviors within defined parameters.

- Policy-based knowledge representation – Organizations can create Automated Reasoning policies that encode their rules, procedures, and guidelines into structured, mathematical formats. Organizations can upload documents like PDFs containing HR guidelines or operational workflows, which are then automatically converted into formal logic structures. Policy changes are automatically versioned with unique Amazon Resource Names (ARNs), allowing for change tracking, auditing, and rollback capabilities to maintain consistent policy enforcement.

- Domain expert enablement – The feature is designed to empower domain experts, such as HR personnel or operational managers, to directly encode their knowledge without technical intermediaries. This makes sure that business rules and policies are accurately captured and maintained by those who understand them best.

- Natural language to logic translation – The system uses two complementary approaches: LLMs handle natural language understanding, and a symbolic reasoning engine performs mathematical validation. This hybrid architecture allows users to input policies in plain language while maintaining mathematically rigorous verification.

- Explainable validation results – Each validation check produces detailed findings that indicate whether content is Valid, Invalid, or No Data. The feature provides clear explanations for its decisions, including extracted factual statements, and suggested corrections for invalid content.

- Interactive testing environment – Users can access a chat playground on the Amazon Bedrock console to test and refine policies in real time. The feature supports both interactive testing through the Amazon Bedrock console and automated testing through API integrations, with the ability to export test cases in JSON format for integration into continuous testing pipelines or documentation workflows.

- Seamless AWS integration – The feature integrates directly with Amazon Bedrock Guardrails and can be used alongside other configurable guardrails like Contextual Grounding checks. It can be accessed through both the Amazon Bedrock console and APIs, making it flexible for various implementation needs.

These features combine to create a powerful framework that helps organizations maintain factual accuracy in their AI applications while providing transparent and mathematically sound validation processes.

Solution overview

Now that we understand the key features of Automated Reasoning checks, let’s examine how this capability works within Amazon Bedrock Guardrails. The following section provides a comprehensive overview of the architecture and demonstrates how different components work together to promote factual accuracy and help prevent hallucinations in generative AI applications.

Automated Reasoning checks in Amazon Bedrock Guardrails provides an end-to-end solution for validating AI model outputs using mathematically sound principles. This automated process uses formal logic and mathematical proofs to verify responses against established policies, offering definitive validation results that can significantly improve the reliability of your AI applications.

The following solution architecture follows a systematic workflow that enables rigorous validation of model outputs.

The workflow consists of the following steps:

- Source documents (such as HR guidelines or operational procedures) are uploaded to the system. These documents, along with optional intent descriptions, are processed to create structured rules and variables that form the foundation of an Automated Reasoning policy.

- Subject matter experts review and inspect the created policy to verify accurate representation of business rules. Each validated policy is versioned and assigned a unique ARN for tracking and governance purposes.

- The validated Automated Reasoning policy is associated with Amazon Bedrock Guardrails, where specific policy versions can be selected for implementation. This integration enables automated validation of generative AI outputs.

- When the generative AI application produces a response, Amazon Bedrock Guardrails triggers the Automated Reasoning checks. The system creates logical representations of both the input question and the application’s response, evaluating them against the established policy rules.

- The Automated Reasoning check provides detailed validation results, including whether statements are Valid, Invalid, or No Data. For each finding, it explains which rules and variables were considered, and provides suggestions for making invalid statements valid.

With this solution architecture in place, organizations can confidently deploy generative AI applications knowing that responses will be automatically validated against your established policies using mathematically sound principles.

Prerequisites

To use Automated Reasoning checks in Amazon Bedrock, make sure you have met the following prerequisites:

- An active AWS account

- Access permission through your AWS Account Manager, because Automated Reasoning checks is currently in gated preview

- Confirmation of AWS Regions where Automated Reasoning checks is available

Input dataset

For this post, we examine a sample Paid Leave of Absence (LoAP) policy document as our example dataset. This policy document contains detailed guidelines covering employee eligibility criteria, duration limits, application procedures, and benefits coverage for paid leave. It’s an ideal example to demonstrate how Automated Reasoning checks can validate AI-generated responses against structured business policies, because it contains clear rules and conditions that can be converted into logical statements. The document’s mix of quantitative requirements (such as minimum tenure and leave duration) and qualitative conditions (like performance status and approval processes) makes it particularly suitable for showcasing the capabilities of automated reasoning validation.

The following screenshot shows an example of our policy document.

Start an Automated Reasoning check using the Amazon Bedrock console

The first step is to encode your knowledge—in this case, the sample LoAP policy—into an Automated Reasoning policy. Complete the following steps to initiate an Automated Reasoning check using the Amazon Bedrock console:

- On the Amazon Bedrock console, choose Automated Reasoning Preview under Safeguards in the navigation pane.

- Choose Create policy.

- Provide a policy name and policy description.

- Upload your source document. The source content can’t be modified after creation and must not exceed 6,000 characters with limitations on table sizes and image processing.

- Include a description of the intent of the Automated Reasoning policy you’re creating. For the sample policy, you can use the following intent:

The policy creation process takes a few minutes to complete. The rules and variables are created after creating the policy and they can be edited, removed, or have new rules or variables added to them.

The policy document version is outlined in the details section along with the intent description and build status.

Next, you create a guardrail in Amazon Bedrock by configuring as many filters as you need.

- On the Amazon Bedrock console, choose Guardrails under Safeguards in the navigation pane.

- Choose Create guardrail.

- Provide guardrail details such as a name and an optional description.

- Add an Automated Reasoning check by choosing Enable Automated Reasoning policy, and choose the policy name and version.

- Choose Next and complete the creation of the guardrail.

- Navigate back to the Automated Reasoning section of the Amazon Bedrock console and open the newly created policy. You can use the test playground and input sample questions and answers that represent real user interactions with your LLM.

- Choose the guardrail you created, then choose Submit to evaluate how your policy handles these exchanges.

After submitting, you’ll be presented with one or more findings. A finding contains a set of facts that were extracted from the input Q&A and are analyzed independently. Each finding includes four key components:

- Validation results – Shows the outcome of Automated Reasoning checks. The system determines these results by evaluating extracted variable assignments against your defined policy rules.

- Applied rules – Displays the specific rules from your policy that were used to reach the validation conclusion.

- Extracted variables – Lists the variables that were identified and used in the validation process.

- Suggestions – Shows variable assignments that would make invalid responses valid, or for valid responses, identifies necessary assumptions. These can be used to generate feedback for your LLM.

Finally, you can use the feedback suggestions to improve your LLM’s responses.

- Collect rules from valid results with suggestions and invalid results.

- Feed these collected variables and rules back to your LLM to revise its original.

- Refine your policy:

- Edit incorrect rules using natural language.

- Improve variable descriptions when Automated Reasoning checks fail to assign values.

- For effective variable descriptions, include both technical definitions and common user expressions. For example, for a variable named

is_full_time, "works more than 20 hours per week"is technically correct because it’s a quote from the source policy, but won’t help Automated Reasoning checks understand what users mean when they say “part-time.” Instead, use"works more than 20 hours per week; set to true if user says 'full-time' and false if user says 'part-time'".

Start an Automated Reasoning check using Python SDK and APIs

First, you need to create an Automated Reasoning policy from your documents using the Amazon Bedrock console as outlined in the previous section. Next, you can use the policy created with the ApplyGuardrail API to validate your generative AI application.

To use the Python SDK for validation using Automated Reasoning checks, follow these steps:

- First, set up the required configurations:

- Before using Amazon Bedrock with Automated Reasoning policies, you will need to load the required service models. After being allowlisted for Amazon Bedrock access, you will receive two model files along with their corresponding version information. The following is a Python script to help you load these service models:

- After you set up the service models, initialize the AWS clients for both Amazon Bedrock and Amazon Bedrock Runtime services. These clients will be used to interact with the models and apply guardrails.

- Before applying Automated Reasoning policies, you need to either locate an existing guardrail or create a new one. The following code first attempts to find a guardrail by name, and if not found, creates a new guardrail with the specified Automated Reasoning policy configuration. This makes sure you have a valid guardrail to work with before proceeding with policy enforcement.

- When testing guardrails with Automated Reasoning policies, you need to properly format your input data. The following code shows how to structure a sample question and answer pair for validation:

- Now that you have your formatted input data, you can apply the guardrail with Automated Reasoning policies to validate the content. The following code sends the input to Amazon Bedrock Guardrails and returns the validation results:

- After applying guardrails, you need to extract and analyze the Automated Reasoning assessment results. The following code shows how to process the guardrail output:

The output will look something like the following:

When a response violates AR policies, the system identifies which rules were violated and provides information about the conflicts. The feedback from the AR policy validation can be routed back to improve the model’s output, promoting compliance while maintaining response quality.

Possible use cases

Automated Reasoning checks can be applied across various industries to promote accuracy, compliance, and reliability in AI-generated responses while maintaining industry-specific standards and regulations. Although we have tested these checks across multiple applications, we continue to explore additional potential use cases. The following table provides some applications across different sectors.

| Industry | Use Cases |

| Healthcare |

|

| Financial Services |

|

| Travel and Hospitality |

|

| Insurance |

|

| Energy and Utilities |

|

| Manufacturing |

|

Best practices for implementation

Successfully implementing Automated Reasoning checks requires careful attention to detail and a systematic approach to achieve optimal validation accuracy and reliable results. The following are some key best practices:

- Document preparation – Use structured text-based PDF documents. Content should be limited to 6,000 characters. Avoid complex formatting that could interfere with the logical model generation.

- Intent description engineering – Create precise policy intents using a clear format. The intent should comprehensively cover expected use cases and potential edge cases. For example:

- Policy validation – Review the generated rules and variables to make sure they accurately capture your business logic and policy requirements. Regular audits of these rules help maintain alignment with current business policies.

- Comprehensive testing –Develop a diverse set of sample Q&As in the test playground to evaluate different validation scenarios (valid, valid with suggestions, and invalid responses). Include edge cases and complex scenarios to provide robust validation coverage.

- Iterative improvement –Regularly update rules and LLM applications based on validation feedback, paying special attention to suggested variables and invalid results to enhance response accuracy. Maintain a feedback loop for continuous refinement.

- Version control management – Implement a systematic approach to policy versioning, maintaining detailed documentation of changes and conducting proper testing before deploying new versions. This helps track policy evolution and facilitates rollbacks if needed.

- Error handling strategy – Develop a comprehensive plan for handling different validation results, including specific procedures for managing invalid responses and incorporating suggested improvements into the response generation process.

- Runtime optimization – Understand and monitor the two-step validation process (fact extraction and logic validation) to achieve optimal performance. Regularly review validation results to identify patterns that might indicate needed improvements in variable descriptions or rule definitions.

- Feedback integration – Establish a systematic process for collecting and analyzing validation feedback, particularly focusing on cases where NO_DATA is returned or when factual claims are incorrectly extracted. Use this information to continuously refine variable descriptions and policy rules.

Conclusion

Amazon Bedrock Automated Reasoning checks represent a significant advancement in formally verifying the outputs of generative AI applications. By combining rigorous mathematical validation with a user-friendly interface, this feature addresses one of the most critical challenges in AI deployment: maintaining factual consistency and minimizing hallucinations. The solution’s ability to validate AI-generated responses against established policies using formal logic provides organizations with a powerful framework for building trustworthy AI applications that can be confidently deployed in production environments.

The versatility of Automated Reasoning checks, demonstrated through various industry use cases and implementation approaches, makes it a valuable tool for organizations across sectors. Whether implemented through the Amazon Bedrock console or programmatically using APIs, the feature’s comprehensive validation capabilities, detailed feedback mechanisms, and integration with existing AWS services enable organizations to establish quality control processes that scale with their needs. The best practices outlined in this post provide a foundation for organizations to maximize the benefits of this technology while maintaining high standards of accuracy.

As enterprises continue to expand their use of generative AI, the importance of automated validation mechanisms becomes increasingly critical. We encourage organizations to explore Amazon Bedrock Automated Reasoning checks and use its capabilities to build more reliable and accurate AI applications. To help you get started, we’ve provided detailed implementation guidance, practical examples, and a Jupyter notebook with code snippets in our GitHub repository that demonstrate how to effectively integrate this feature into your generative AI development workflow. Through systematic validation and continuous refinement, organizations can make sure that their AI applications deliver consistent, accurate, and trustworthy results.

About the Authors

Adewale Akinfaderin is a Sr. Data Scientist–Generative AI, Amazon Bedrock, where he contributes to cutting edge innovations in foundational models and generative AI applications at AWS. His expertise is in reproducible and end-to-end AI/ML methods, practical implementations, and helping global customers formulate and develop scalable solutions to interdisciplinary problems. He has two graduate degrees in physics and a doctorate in engineering.

Adewale Akinfaderin is a Sr. Data Scientist–Generative AI, Amazon Bedrock, where he contributes to cutting edge innovations in foundational models and generative AI applications at AWS. His expertise is in reproducible and end-to-end AI/ML methods, practical implementations, and helping global customers formulate and develop scalable solutions to interdisciplinary problems. He has two graduate degrees in physics and a doctorate in engineering.

Nafi Diallo is a Sr. Applied Scientist in the Automated Reasoning Group and holds a PhD in Computer Science. She is passionate about using automated reasoning to ensure the security of computer systems, improve builder productivity, and enable the development of trustworthy and responsible AI workloads. She worked for more than 5 years in the AWS Application Security organization, helping build scalable API security testing solutions and shifting security assessment left.

Nafi Diallo is a Sr. Applied Scientist in the Automated Reasoning Group and holds a PhD in Computer Science. She is passionate about using automated reasoning to ensure the security of computer systems, improve builder productivity, and enable the development of trustworthy and responsible AI workloads. She worked for more than 5 years in the AWS Application Security organization, helping build scalable API security testing solutions and shifting security assessment left.

Author: Adewale Akinfaderin