Modulate scales ToxMod AI voice chat moderation tool with AWS

It’s a problem that Massachusetts-based startup Modulate is tackling head-on with its ToxMod voice chat moderation tool… Today, ToxMod empowers titles like Call of Duty: Modern Warfare III and VR experiences like RecRoom, to achieve comprehensive, cost-efficient voice chat content moderation……

From in-game chats to gig economy apps, social media, and beyond, digital conversations can quickly turn toxic, even dangerous. It’s a problem that Massachusetts-based startup Modulate is tackling head-on with its ToxMod voice chat moderation tool. The technology applies artificial intelligence (AI) and machine learning (ML) to voice conversations to flag problematic behavior, and has quickly captured the game industry’s attention.

As interest continued to expand, Modulate scaled up with Amazon Web Services (AWS) to support rapid growth. Today, ToxMod empowers titles like Call of Duty: Modern Warfare III and VR experiences like RecRoom, to achieve comprehensive, cost-efficient voice chat content moderation. To help power the continued growth of ToxMod, Modulate recently selected AWS as its preferred cloud provider. Using AWS serverless technologies and AWS Graviton instances with auto-scaling groups at the core of its infrastructure, ToxMod can process and sift through billions of voice chats a day, while leveraging AI to identify and flag problematic behavior to content moderation teams.

As Modulate evolves the tool and the ML models that power it, the company continues to expand ToxMod’s capabilities to support more digital platforms outside of gaming. Modulate CTO Carter Huffman explains, “Gaming was such a formative part of my life growing up that I want to share that experience, but I can’t ignore the darker sides and rough edges therein. To be able to protect some of the most vulnerable players and apply ToxMod to improve voice chat interactions in other digital environments by detecting threats to customers and employees is super gratifying. We’re thrilled to be able to make an impact, and AWS is integral to this mission.”

A rich history in games

Modulate got its start developing an AI voice skin tool for customizing voice chats in gaming, but soon uncovered a deeper purpose, proactively addressing negative interactions in gaming communities. “Gaming harassment has become more prevalent, because social norms aren’t the same in virtual and physical worlds,” Huffman notes. “The probability of walking into a diner and a stranger screaming profanities at you is low, but it happens often in video game lobbies. It’s a huge problem we knew our tech could solve.”

Combining its cost-effective, fast, and accurate understanding of gamer voice chat with AI and ML, Modulate developed ToxMod to identify toxic chat in games so that developers and publishers could determine the appropriate action. These actions could include issuing a warning, muting a player, kicking them out, or in certain cases, reporting them to the authorities.

Once ToxMod released, it caught on fast. “When we first talked to customers about voice moderation, they were excited, but had the impression voice moderation for trust and safety was too expensive—bringing them a usage-based pricing offer came as a bit of a shock,” Huffman shares. “We’ve gotten so good at optimizing ToxMod by using high-performing, cost-efficient AWS services that we can store massive volumes of audio, process it, moderate it, and apply AI to refine it at a price that developers can afford.”

How ToxMod works

Prior to a client deploying ToxMod, Modulate works with them to feed its code of conduct into the tool, and complete a trial run with the client’s human moderation team. After a few iteration cycles, Huffman says clients often achieve 99% accuracy, which is made possible by a high-scale, low-latency voice understanding engine at ToxMod’s core.

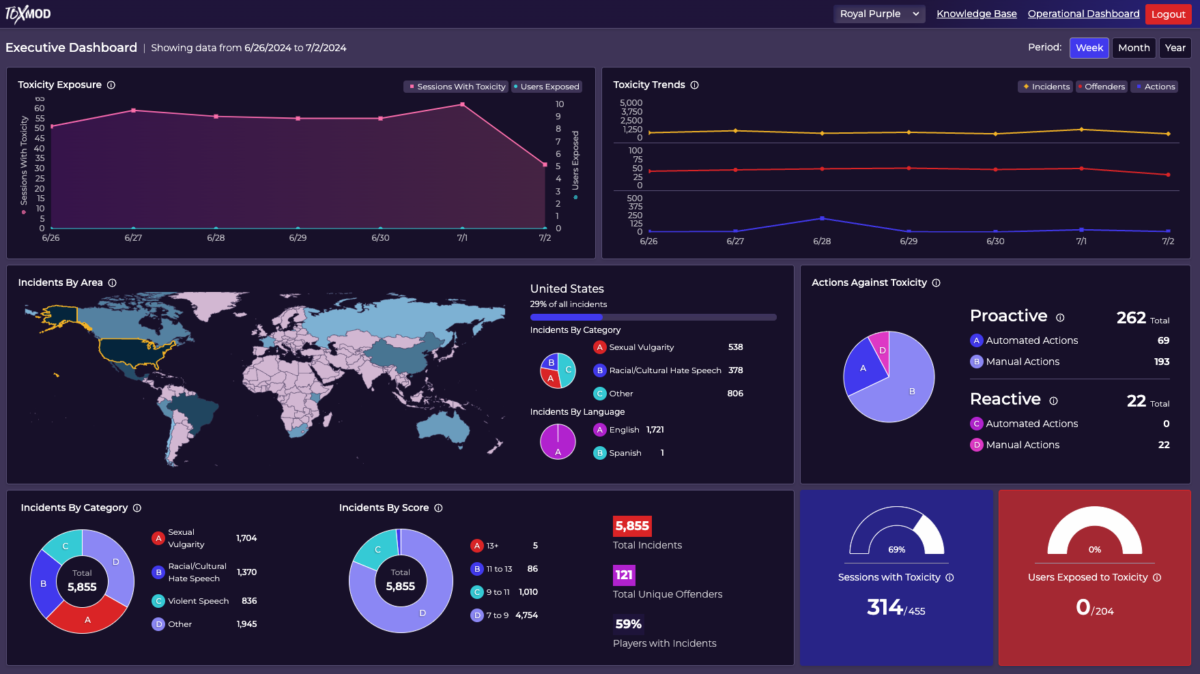

The solution continuously monitors voice chats and proactively ingests millions of audio snippets a minute. Using AI and ML, its engine identifies categorized instances of problematic chat, such as hate speech, harassment, and vulgar language. It then elevates severe violations and flags them to content moderation teams. ToxMod records every problematic chat instance and generates a transcript, analysis, and audio clip of it, so if a banned community member files an appeal, there’s a record of the offense on file. As more instances are triaged and developers provide input, Modulate continuously improves model accuracy.

“Harassment runs rampant in gaming and digital communities, and as a moderation team, you could be tasked with managing thousands of individual instances per minute, an impossible feat through human review alone. ToxMod is changing the game, identifying harassment on an unprecedented scale with exceptional accuracy, which AWS helped us achieve,” explains Huffman. “We’ve trained ToxMod to the point that it can find that needle in a haystack of problematic behavior, categorize it into a bucket, and alert the client so their moderation team can evaluate and take appropriate action in a cost-efficient way.”

Responsible AI is a key component of ToxMod’s development, which demands myriad data sources for training its ML models, and perspectives to understand that data. Collaborating with data labelers worldwide, who get access to and review anonymized slices of ToxMod data, Modulate trains, tunes, and validates its models against diverse data representing an array of contexts. They work to understand what’s happening in an interaction and apply their background and knowledge of gaming cultures to supply Modulate with the appropriate metadata to bring into its AI systems. The systems then perform more equitably across circumstances to get it right nearly every time, no matter who is listening.

“Responsible training and validation processes are so important, but it’s something people miss when talking about deploying an AI system. You think it’s easy to just grab an off-the-shelf analysis model or LLM [large language model], apply your data, and it will get it right most of the time, but ML models inherently have random and systematic errors,” Huffman says. “If one group or subset is unduly harmed by low accuracy from a model, that’s a failure, and we go back to the drawing board to re-architect and retrain it.”

The technology underpinning ToxMod

Audio ingestion is crucial to ToxMod, which Modulate achieves by integrating its software development kit (SDK) with the voice chat infrastructure of each game or platform it serves. ToxMod leverages the SDK to encode voice chats in short buffers and sends them over the internet as a package into AWS, where it saves incoming data using AWS Lambda functions. From there, the tool analyzes the audio processing on Amazon Elastic Cloud Compute (Amazon EC2) G5g instances, powered by NVIDIA T4G Tensor Core GPUs and featuring AWS Graviton2 processors that run a variety of its ML audio models. To minimize overhead, it batches audio clips to create queues for processing. Auto-scaling groups connected to these queues then efficiently scale up or down to accommodate peaks and dips in traffic.

“The scale of what ToxMod can do with AWS is so huge that it would be untenable for any game studio or digital platform to achieve on its own,” Huffman notes. “We’ve built this impressive triage structure using powerful, cost-effective, serverless capabilities from AWS. This includes databases that can be used from serverless infrastructures, S3 storage, and a vast array of Graviton instances that churn through all the content to run some really powerful models that grasp gaming voice chat.”

Collaborating and scaling with AWS

The AWS team worked closely with Modulate from ToxMod’s outset, especially as they began building the underlying architecture and triage layers. Huffman and team leaned on AWS for expertise in determining the solution’s GPU requirements, completing an architecture review, identifying scaling pain points, and solving for them. “The AWS team was so proactive in talking to us and bringing up all the considerations we didn’t even think about. Then, they helped us find the right AWS technologies and services to solve for them,” he adds. “Even in our most crucial seed stage, when we were starting to partner with influential gaming companies, AWS was there; they’re not just a vendor but a strong partner.”

After securing Series A funding and activating its first Call of Duty deployment, Modulate went into ramp up mode. The company worked with AWS to diversify GPU instances amongst different regions and hardware types to keep up availability. “AWS first suggested a mixture of spot and on-demand instances to curb costs, and when I expressed availability concerns, they introduced us to auto scaling groups,” Huffman shares. “It’s that kind of expertise that’s so valuable, especially when we’re training pipelines; they brought us all these ideas to optimize costs and help us figure out the best approach, and it’s paid off in a huge way. We’ve been able to keep our services cost competitive and deliver maximum value to our customers even though we’re operating at 10,000 times the scale than we did when we started.”

Expanding beyond gaming

As ToxMod adoption has grown in gaming, digital platforms outside the industry are taking note and inquiring with Modulate as to how they could apply ToxMod to improve voice chat moderation in other digital environments. Huffman says the company has seen a lot of interest from the gig economy, especially from food and grocery delivery and ride share apps, where person-to-person exchanges can turn from unpleasant to unsafe quickly. Getting ahead of the demand, ToxMod is already working with these app developers to give them the tools to detect proactively when these potentially problematic interactions occur and notify the platform, so it can intervene and cancel the order or ride.

Huffman also sees potential for ToxMod to understand the nuances of conversations in other online person-to-person voice communications, such as identifying and preventing scams where a voice impersonates customer support or presents a faulty investment opportunity. “We’ve established this incredible set of ML models that understand basic things about a conversation and make judgment calls on whether or not to flag it as problematic. While our initial definition of problematic was tailored to the gaming community, we’ve since expanded that definition and tuned the underlying models, structure, and capabilities to support other scenarios,” he notes. “The scalability of AWS has been huge to these efforts. We’ve never encountered a scaling problem that couldn’t be solved by using AWS.”

Continued experimentation with AI generates future opportunity

As Modulate advances ToxMod and expands into new markets, it’s also started exploring AI toolsets in managed AWS services like Amazon Bedrock and Amazon SageMaker to experiment, iterate, and optimize its ML models. Integrating these technologies into training their iteration pipeline allows the Modulate team to quickly test a huge variety of models. They can generate standardized comparisons between them to figure out where the current models stand in terms of cost and accuracy comparisons.

“The flexibility and speed that managed AWS AI services like Amazon Bedrock and SageMaker provide are huge and crucial to our ML and research teams. It would’ve taken months for them to implement these models from scratch,” Huffman explains. “By developing a pipeline of easily managed service experimentation, we can compare against our existing capabilities, and determine what we want to bring into production. For a company like us, the cost efficiency this supports alongside the accuracy, speed of processing, and ability to correctly encode that code of conduct is vital.”

Regardless of any future experiments, ToxMod remains confident that it can succeed with the AWS team and account managers by its side. Huffman concluded, “AWS is so incredibly helpful. They ensure that we can achieve the scale and availability that we need to support our customers, and at the end of the day, we value that most above all in a technology partner.”

Find out more about the services ToxMod is using to optimize its AI and ML technology by reading how Modulate reduced infrastructure costs by a factor of five with Amazon EC2 G5g instances.

Contact an AWS Representative to learn how we can help accelerate your business.

Author: AWS Editorial Team