Book Bits: 7 September 2024

TutoSartup excerpt from this article:

By purchasing books through this site, you provide support for The Capital Spectator’s free content... Press) Economic inequality is one of the most daunting challenges of our time, with public debate often turning to questions of whether it is an inevitable outcome of economic systems and what, if anything, can be done about it... ● How Economics Explains the World: A Short History of Humani...

By purchasing books through this site, you provide support for The Capital Spectator’s free content... Press) Economic inequality is one of the most daunting challenges of our time, with public debate often turning to questions of whether it is an inevitable outcome of economic systems and what, if anything, can be done about it... ● How Economics Explains the World: A Short History of Humani...

How Vidmob is using generative AI to transform its creative data landscape

TutoSartup excerpt from this article:

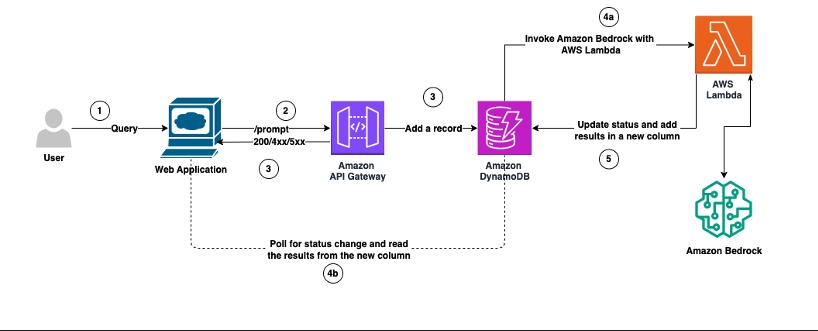

For example, insights from creative data (advertising analytics) using campaign performance can not only uncover which creative works best but also help you understand the reasons behind its success... In this post, we illustrate how Vidmob, a creative data company, worked with the AWS Generative AI Innovation Center (GenAIIC) team to uncover meaningful insights at scale within creative data usi...

For example, insights from creative data (advertising analytics) using campaign performance can not only uncover which creative works best but also help you understand the reasons behind its success... In this post, we illustrate how Vidmob, a creative data company, worked with the AWS Generative AI Innovation Center (GenAIIC) team to uncover meaningful insights at scale within creative data usi...

Fine-tune Llama 3 for text generation on Amazon SageMaker JumpStart

TutoSartup excerpt from this article:

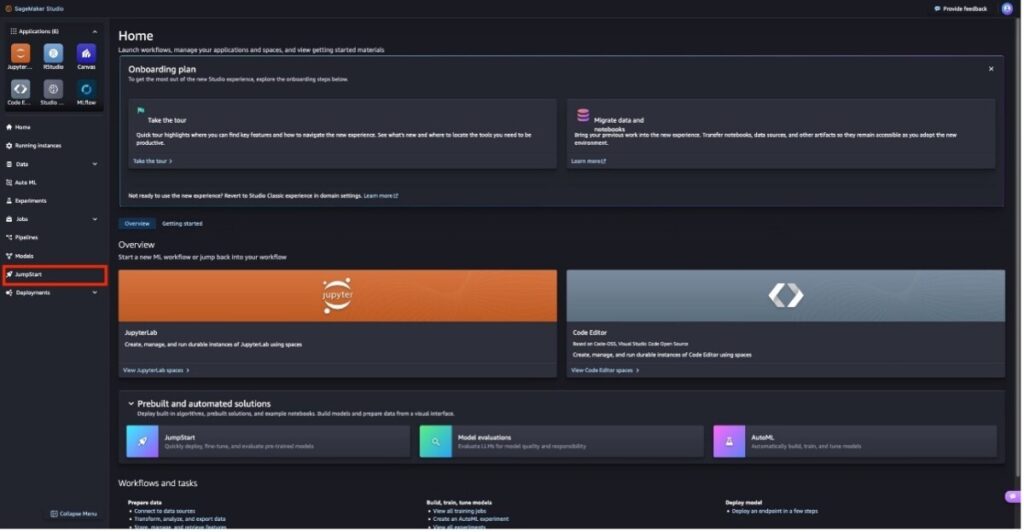

nn### Instruction:n{dialogue}nn”, “completion”: ” {summary}” } { “dialogue”: “#Person1#: Where do these flower vases come from? n#Person2#: They are made a town nearby... n#Person1#: Are they breakable? n#Person2#: No... n#Person1#: No wonder it’s so expensive... “, “summary”: “#Person2# explains the flower vases’ materials and advantages and #Person1# unde...

nn### Instruction:n{dialogue}nn”, “completion”: ” {summary}” } { “dialogue”: “#Person1#: Where do these flower vases come from? n#Person2#: They are made a town nearby... n#Person1#: Are they breakable? n#Person2#: No... n#Person1#: No wonder it’s so expensive... “, “summary”: “#Person2# explains the flower vases’ materials and advantages and #Person1# unde...

Ground truth curation and metric interpretation best practices for evaluating generative AI question answering using FMEval

TutoSartup excerpt from this article:

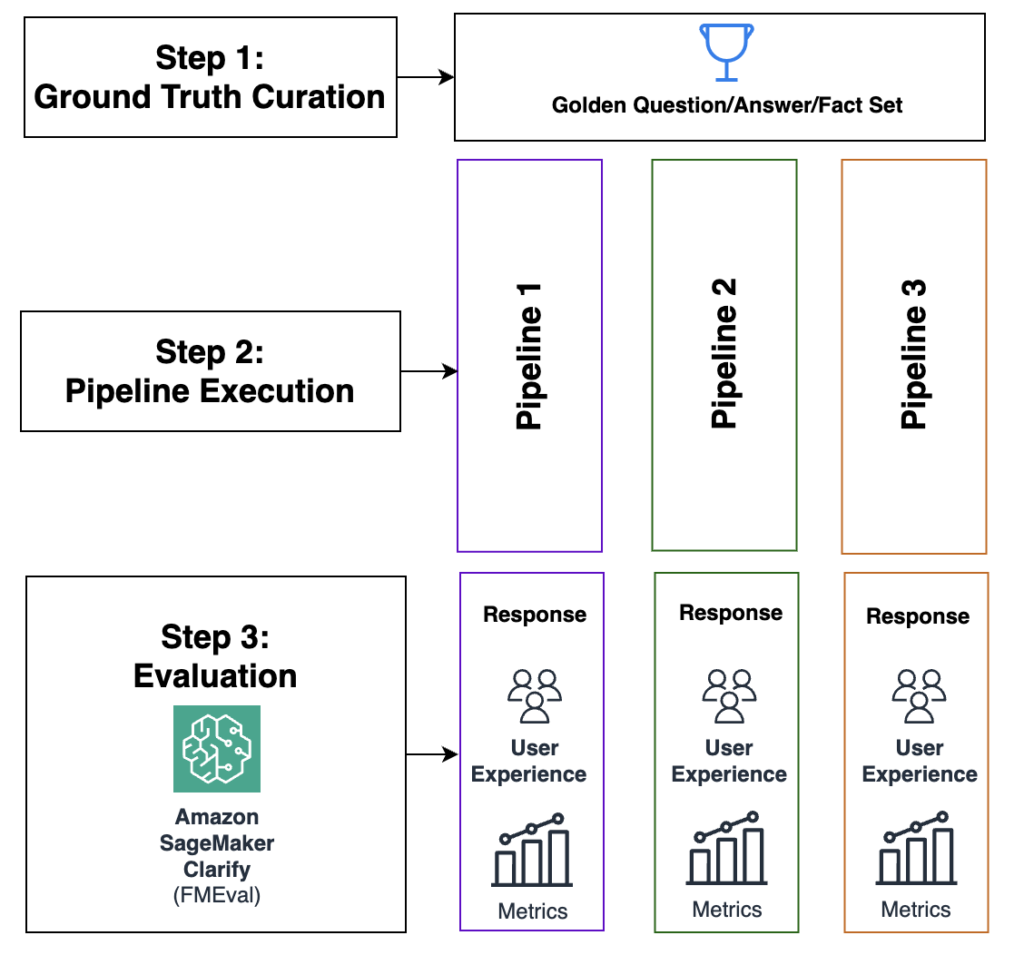

When golden answers are curated properly, a low recall suggests strong deviation between the ground truth and the model response, whereas a high recall suggests strong agreement... However, building and deploying such assistants with responsible AI best practices requires a robust ground truth and evaluation framework to make sure they meet quality standards and user experience expectations, as w...

When golden answers are curated properly, a low recall suggests strong deviation between the ground truth and the model response, whereas a high recall suggests strong agreement... However, building and deploying such assistants with responsible AI best practices requires a robust ground truth and evaluation framework to make sure they meet quality standards and user experience expectations, as w...

Research Review | 6 September 2024 | Portfolio Risk Management

TutoSartup excerpt from this article:

Semivolatility-managed portfolios Daniel Batista da Silva (U...) July 2024 There is ample evidence that volatility management helps improve the risk-adjusted performance of momentum portfolios... However, it is less clear that it works for other factors and anomaly portfolios... We show that controlling by the upside and downside components of volatility yields more robust risk-adjusted performanc...

Semivolatility-managed portfolios Daniel Batista da Silva (U...) July 2024 There is ample evidence that volatility management helps improve the risk-adjusted performance of momentum portfolios... However, it is less clear that it works for other factors and anomaly portfolios... We show that controlling by the upside and downside components of volatility yields more robust risk-adjusted performanc...

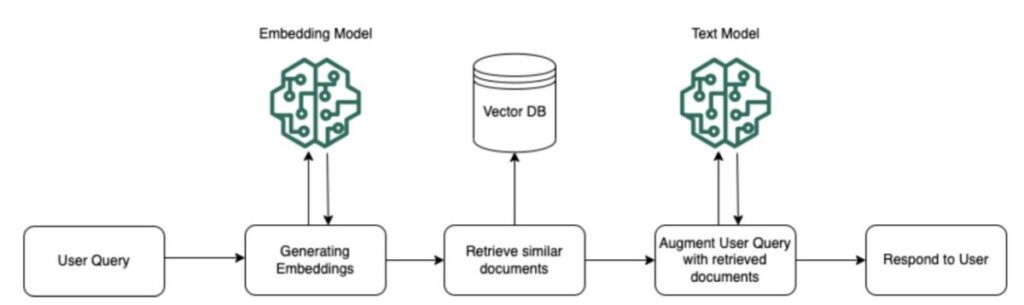

Build powerful RAG pipelines with LlamaIndex and Amazon Bedrock

TutoSartup excerpt from this article:

This necessitates more advanced RAG techniques on the query understanding, retrieval, and generation components in order to handle these failure modes... It provides a flexible and modular framework for building and querying document indexes, integrating with various LLMs, and implementing advanced RAG patterns... We discuss how to set up the following: Simple RAG pipeline – Set up a RAG...

This necessitates more advanced RAG techniques on the query understanding, retrieval, and generation components in order to handle these failure modes... It provides a flexible and modular framework for building and querying document indexes, integrating with various LLMs, and implementing advanced RAG patterns... We discuss how to set up the following: Simple RAG pipeline – Set up a RAG...

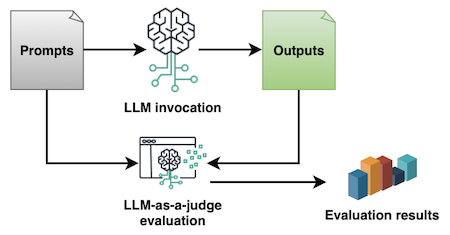

Evaluating prompts at scale with Prompt Management and Prompt Flows for Amazon Bedrock

TutoSartup excerpt from this article:

As generative artificial intelligence (AI) continues to revolutionize every industry, the importance of effective prompt optimization through prompt engineering techniques has become key to efficiently balancing the quality of outputs, response time, and costs... A high-quality prompt maximizes the chances of having a good response from the generative AI models... Beyond the most common evaluation...

As generative artificial intelligence (AI) continues to revolutionize every industry, the importance of effective prompt optimization through prompt engineering techniques has become key to efficiently balancing the quality of outputs, response time, and costs... A high-quality prompt maximizes the chances of having a good response from the generative AI models... Beyond the most common evaluation...