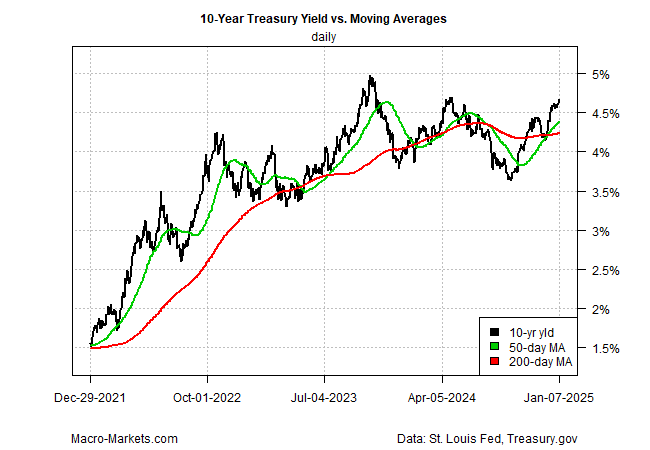

Are Treasury Yields Flashing A Warning Sign For Inflation?

TutoSartup excerpt from this article:

The bond market is paying attention, or so the renewed rise in the US 10-year Treasury yield suggests...The benchmark 10-year rate jumped to 4... Meanwhile, the policy-sensitive 2-year yield ticked up to 4... As a result, the 2-year rate is essentially trading in line with the current Fed funds target rate, which is pegged at a 4... This is the first time in nearly two years that the 2-year...

The bond market is paying attention, or so the renewed rise in the US 10-year Treasury yield suggests...The benchmark 10-year rate jumped to 4... Meanwhile, the policy-sensitive 2-year yield ticked up to 4... As a result, the 2-year rate is essentially trading in line with the current Fed funds target rate, which is pegged at a 4... This is the first time in nearly two years that the 2-year...

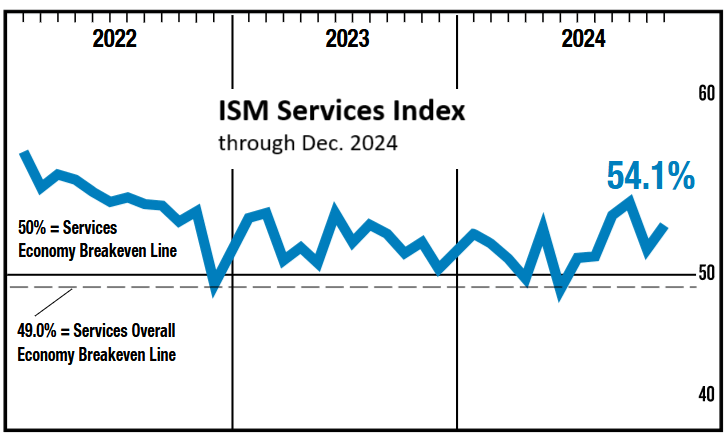

Macro Briefing: 8 January 2025

TutoSartup excerpt from this article:

The ongoing rebound in the one-year growth rate of broad US money supply (M2) suggests that reflation risk persists, according to a research note from TMC Research, a division of The Milwaukee Co... “Year-over-year growth in US broad money supply continued to accelerate through November...7% annual pace marks the fastest pace in nearly 2-1/2 years… The rebound in money supply growth comes at ...

The ongoing rebound in the one-year growth rate of broad US money supply (M2) suggests that reflation risk persists, according to a research note from TMC Research, a division of The Milwaukee Co... “Year-over-year growth in US broad money supply continued to accelerate through November...7% annual pace marks the fastest pace in nearly 2-1/2 years… The rebound in money supply growth comes at ...

Announcing the new AWS Asia Pacific (Thailand) Region

TutoSartup excerpt from this article:

Today, we’re pleased to announce that the AWS Asia Pacific (Thailand) Region is now generally available with three Availability Zones and API name ap-southeast-7... The AWS Asia Pacific (Thailand) Region is the first infrastructure Region in Thailand and the fourteenth Region in Asia Pacific, joining existing Regions in Hong Kong, Hyderabad, Jakarta, Malaysia, Melbourne, Mumbai, Osaka, Seoul, S...

Today, we’re pleased to announce that the AWS Asia Pacific (Thailand) Region is now generally available with three Availability Zones and API name ap-southeast-7... The AWS Asia Pacific (Thailand) Region is the first infrastructure Region in Thailand and the fourteenth Region in Asia Pacific, joining existing Regions in Hong Kong, Hyderabad, Jakarta, Malaysia, Melbourne, Mumbai, Osaka, Seoul, S...

Align and monitor your Amazon Bedrock powered insurance assistance chatbot to responsible AI principles with AWS Audit Manager

TutoSartup excerpt from this article:

Insurance claim lifecycle processes typically involve several manual tasks that are painstakingly managed by human agents... An Amazon Bedrock-powered insurance agent can assist human agents and improve existing workflows by automating repetitive actions as demonstrated in the example in this post, which can create new claims, send pending document reminders for open claims, gather claims eviden...

Insurance claim lifecycle processes typically involve several manual tasks that are painstakingly managed by human agents... An Amazon Bedrock-powered insurance agent can assist human agents and improve existing workflows by automating repetitive actions as demonstrated in the example in this post, which can create new claims, send pending document reminders for open claims, gather claims eviden...

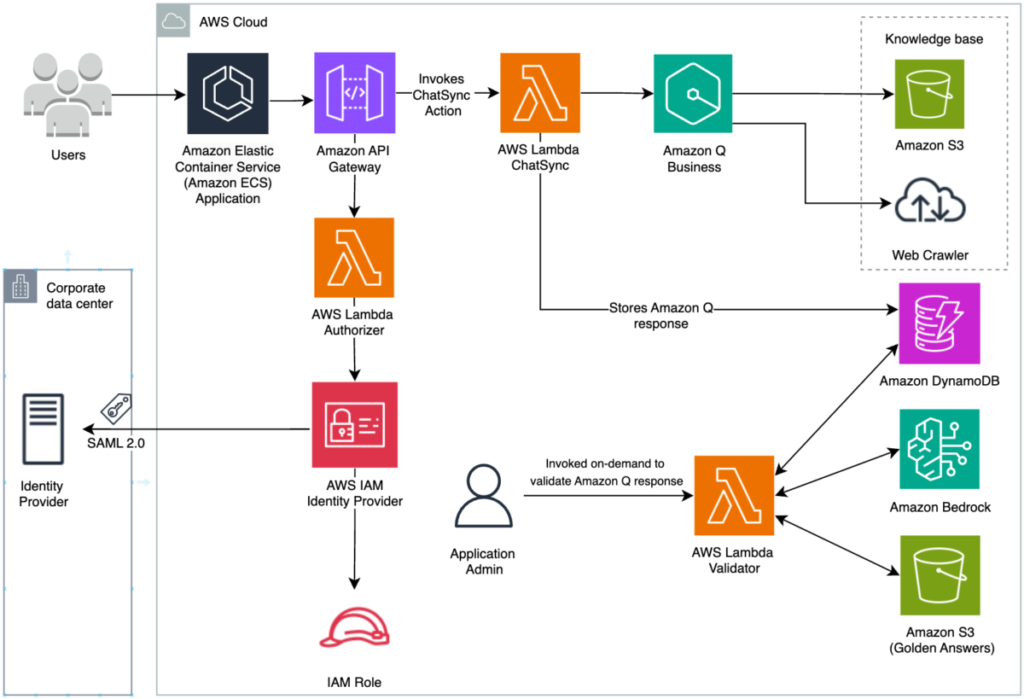

London Stock Exchange Group uses Amazon Q Business to enhance post-trade client services

TutoSartup excerpt from this article:

Amazon Q Business is a generative AI-powered assistant that can answer questions, provide summaries, generate content, and securely complete tasks based on data and information in your enterprise systems... Amazon Q Business enables employees to become more creative, data-driven, efficient, organized, and productive... In this blog post, we explore a client services agent assistant application d...

Amazon Q Business is a generative AI-powered assistant that can answer questions, provide summaries, generate content, and securely complete tasks based on data and information in your enterprise systems... Amazon Q Business enables employees to become more creative, data-driven, efficient, organized, and productive... In this blog post, we explore a client services agent assistant application d...

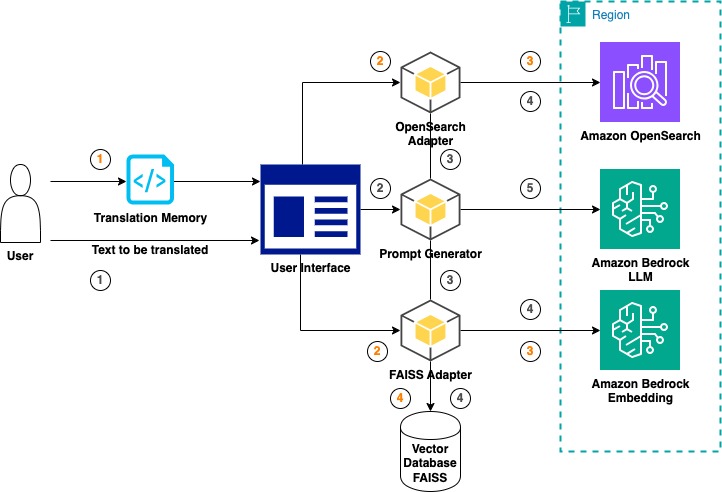

Evaluate large language models for your machine translation tasks on AWS

TutoSartup excerpt from this article:

Large language models (LLMs) have demonstrated promising capabilities in machine translation (MT) tasks... Depending on the use case, they are able to compete with neural translation models such as Amazon Translate... LLMs particularly stand out for their natural ability to learn from the context of the input text, which allows them to pick up on cultural cues and produce more natural sounding tra...

Large language models (LLMs) have demonstrated promising capabilities in machine translation (MT) tasks... Depending on the use case, they are able to compete with neural translation models such as Amazon Translate... LLMs particularly stand out for their natural ability to learn from the context of the input text, which allows them to pick up on cultural cues and produce more natural sounding tra...

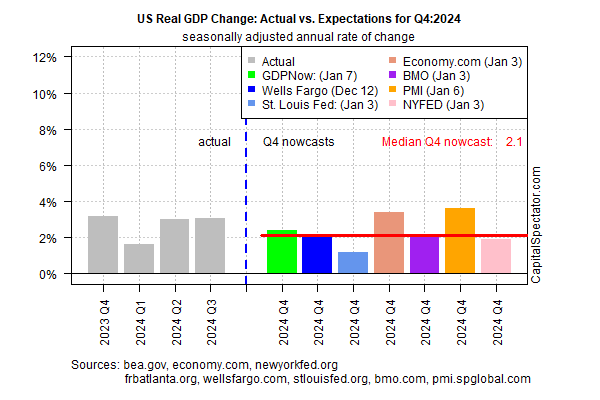

US Q4 GDP Growth Still On Track To Slow After Strong Q3 Rise

TutoSartup excerpt from this article:

1% (real annualized rate), based on the median estimate...1% median nowcast is unchanged from the previous estimate published on Dec... The recent stability in the median estimate at this late date for Q4 analysis offers a degree of confidence for expecting growth in the 2% range...By some accounts, there’s room for stronger estimates... Based on the median nowcast from several sources, outp...

1% (real annualized rate), based on the median estimate...1% median nowcast is unchanged from the previous estimate published on Dec... The recent stability in the median estimate at this late date for Q4 analysis offers a degree of confidence for expecting growth in the 2% range...By some accounts, there’s room for stronger estimates... Based on the median nowcast from several sources, outp...