Scale AI training and inference for drug discovery through Amazon EKS and Karpenter

io/v1alpha5 kind: ClusterConfig metadata: name: do-eks-yaml-karpenter version: ‘1…28’ region: us-west-2 tags: karpenter…sh/discovery: do-eks-yaml-karpenter iam: withOIDC: true addons: – name: aws-ebs-csi-driver version: v1…1 wellKnownPolicies: ebsCSIController: true managedNodeGr…

This is a guest post co-written with the leadership team of Iambic Therapeutics.

Iambic Therapeutics is a drug discovery startup with a mission to create innovative AI-driven technologies to bring better medicines to cancer patients, faster.

Our advanced generative and predictive artificial intelligence (AI) tools enable us to search the vast space of possible drug molecules faster and more effectively. Our technologies are versatile and applicable across therapeutic areas, protein classes, and mechanisms of action. Beyond creating differentiated AI tools, we have established an integrated platform that merges AI software, cloud-based data, scalable computation infrastructure, and high-throughput chemistry and biology capabilities. The platform both enables our AI—by supplying data to refine our models—and is enabled by it, capitalizing on opportunities for automated decision-making and data processing.

We measure success by our ability to produce superior clinical candidates to address urgent patient need, at unprecedented speed: we advanced from program launch to clinical candidates in just 24 months, significantly faster than our competitors.

In this post, we focus on how we used Karpenter on Amazon Elastic Kubernetes Service (Amazon EKS) to scale AI training and inference, which are core elements of the Iambic discovery platform.

The need for scalable AI training and inference

Every week, Iambic performs AI inference across dozens of models and millions of molecules, serving two primary use cases:

- Medicinal chemists and other scientists use our web application, Insight, to explore chemical space, access and interpret experimental data, and predict properties of newly designed molecules. All of this work is done interactively in real time, creating a need for inference with low latency and medium throughput.

- At the same time, our generative AI models automatically design molecules targeting improvement across numerous properties, searching millions of candidates, and requiring enormous throughput and medium latency.

Guided by AI technologies and expert drug hunters, our experimental platform generates thousands of unique molecules each week, and each is subjected to multiple biological assays. The generated data points are automatically processed and used to fine-tune our AI models every week. Initially, our model fine-tuning took hours of CPU time, so a framework for scaling model fine-tuning on GPUs was imperative.

Our deep learning models have non-trivial requirements: they are gigabytes in size, are numerous and heterogeneous, and require GPUs for fast inference and fine-tuning. Looking to cloud infrastructure, we needed a system that allows us to access GPUs, scale up and down quickly to handle spiky, heterogeneous workloads, and run large Docker images.

We wanted to build a scalable system to support AI training and inference. We use Amazon EKS and were looking for the best solution to auto scale our worker nodes. We chose Karpenter for Kubernetes node auto scaling for a number of reasons:

- Ease of integration with Kubernetes, using Kubernetes semantics to define node requirements and pod specs for scaling

- Low-latency scale-out of nodes

- Ease of integration with our infrastructure as code tooling (Terraform)

The node provisioners support effortless integration with Amazon EKS and other AWS resources such as Amazon Elastic Compute Cloud (Amazon EC2) instances and Amazon Elastic Block Store volumes. The Kubernetes semantics used by the provisioners support directed scheduling using Kubernetes constructs such as taints or tolerations and affinity or anti-affinity specifications; they also facilitate control over the number and types of GPU instances that may be scheduled by Karpenter.

Solution overview

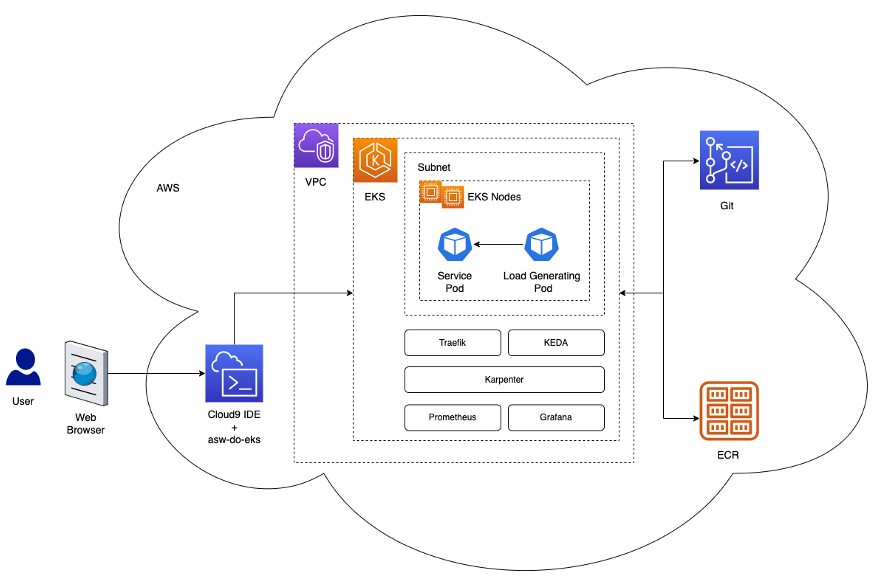

In this section, we present a generic architecture that is similar to the one we use for our own workloads, which allows elastic deployment of models using efficient auto scaling based on custom metrics.

The following diagram illustrates the solution architecture.

The architecture deploys a simple service in a Kubernetes pod within an EKS cluster. This could be a model inference, data simulation, or any other containerized service, accessible by HTTP request. The service is exposed behind a reverse-proxy using Traefik. The reverse proxy collects metrics about calls to the service and exposes them via a standard metrics API to Prometheus. The Kubernetes Event Driven Autoscaler (KEDA) is configured to automatically scale the number of service pods, based on the custom metrics available in Prometheus. Here we use the number of requests per second as a custom metric. The same architectural approach applies if you choose a different metric for your workload.

Karpenter monitors for any pending pods that can’t run due to lack of sufficient resources in the cluster. If such pods are detected, Karpenter adds more nodes to the cluster to provide the necessary resources. Conversely, if there are more nodes in the cluster than what is needed by the scheduled pods, Karpenter removes some of the worker nodes and the pods get rescheduled, consolidating them on fewer instances. The number of HTTP requests per second and number of nodes can be visualized using a Grafana dashboard. To demonstrate auto scaling, we run one or more simple load-generating pods, which send HTTP requests to the service using curl.

Solution deployment

In the step-by-step walkthrough, we use AWS Cloud9 as an environment to deploy the architecture. This enables all steps to be completed from a web browser. You can also deploy the solution from a local computer or EC2 instance.

To simplify deployment and improve reproducibility, we follow the principles of the do-framework and the structure of the depend-on-docker template. We clone the aws-do-eks project and, using Docker, we build a container image that is equipped with the necessary tooling and scripts. Within the container, we run through all the steps of the end-to-end walkthrough, from creating an EKS cluster with Karpenter to scaling EC2 instances.

For the example in this post, we use the following EKS cluster manifest:

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: do-eks-yaml-karpenter

version: '1.28'

region: us-west-2

tags:

karpenter.sh/discovery: do-eks-yaml-karpenter

iam:

withOIDC: true

addons:

- name: aws-ebs-csi-driver

version: v1.26.0-eksbuild.1

wellKnownPolicies:

ebsCSIController: true

managedNodeGroups:

- name: c5-xl-do-eks-karpenter-ng

instanceType: c5.xlarge

instancePrefix: c5-xl

privateNetworking: true

minSize: 0

desiredCapacity: 2

maxSize: 10

volumeSize: 300

iam:

withAddonPolicies:

cloudWatch: true

ebs: trueThis manifest defines a cluster named do-eks-yaml-karpenter with the EBS CSI driver installed as an add-on. A managed node group with two c5.xlarge nodes is included to run system pods that are needed by the cluster. The worker nodes are hosted in private subnets, and the cluster API endpoint is public by default.

You could also use an existing EKS cluster instead of creating one. We deploy Karpenter by following the instructions in the Karpenter documentation, or by running the following script, which automates the deployment instructions.

The following code shows the Karpenter configuration we use in this example:

apiVersion: karpenter.sh/v1beta1

kind: NodePool

metadata:

name: default

spec:

template:

metadata: null

labels:

cluster-name: do-eks-yaml-karpenter

annotations:

purpose: karpenter-example

spec:

nodeClassRef:

apiVersion: karpenter.k8s.aws/v1beta1

kind: EC2NodeClass

name: default

requirements:

- key: karpenter.sh/capacity-type

operator: In

values:

- spot

- on-demand

- key: karpenter.k8s.aws/instance-category

operator: In

values:

- c

- m

- r

- g

- p

- key: karpenter.k8s.aws/instance-generation

operator: Gt

values:

- '2'

disruption:

consolidationPolicy: WhenUnderutilized

#consolidationPolicy: WhenEmpty

#consolidateAfter: 30s

expireAfter: 720h

---

apiVersion: karpenter.k8s.aws/v1beta1

kind: EC2NodeClass

metadata:

name: default

spec:

amiFamily: AL2

subnetSelectorTerms:

- tags:

karpenter.sh/discovery: "do-eks-yaml-karpenter"

securityGroupSelectorTerms:

- tags:

karpenter.sh/discovery: "do-eks-yaml-karpenter"

role: "KarpenterNodeRole-do-eks-yaml-karpenter"

tags:

app: autoscaling-test

blockDeviceMappings:

- deviceName: /dev/xvda

ebs:

volumeSize: 80Gi

volumeType: gp3

iops: 10000

deleteOnTermination: true

throughput: 125

detailedMonitoring: trueWe define a default Karpenter NodePool with the following requirements:

- Karpenter can launch instances from both

spotandon-demandcapacity pools - Instances must be from the “

c” (compute optimized), “m” (general purpose), “r” (memory optimized), or “g” and “p” (GPU accelerated) computing families - Instance generation must be greater than 2; for example,

g3is acceptable, butg2is not

The default NodePool also defines disruption policies. Underutilized nodes will be removed so pods can be consolidated to run on fewer or smaller nodes. Alternatively, we can configure empty nodes to be removed after the specified time period. The expireAfter setting specifies the maximum lifetime of any node, before it is stopped and replaced if necessary. This helps reduce security vulnerabilities as well as avoid issues that are typical for nodes with long uptimes, such as file fragmentation or memory leaks.

By default, Karpenter provisions nodes with a small root volume, which can be insufficient for running AI or machine learning (ML) workloads. Some of the deep learning container images can be tens of GB in size, and we need to make sure there is enough storage space on the nodes to run pods using these images. To do that, we define EC2NodeClass with blockDeviceMappings, as shown in the preceding code.

Karpenter is responsible for auto scaling at the cluster level. To configure auto scaling at the pod level, we use KEDA to define a custom resource called ScaledObject, as shown in the following code:

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: keda-prometheus-hpa

namespace: hpa-example

spec:

scaleTargetRef:

name: php-apache

minReplicaCount: 1

cooldownPeriod: 30

triggers:

- type: prometheus

metadata:

serverAddress: http://prometheus- server.prometheus.svc.cluster.local:80

metricName: http_requests_total

threshold: '1'

query: rate(traefik_service_requests_total{service="hpa-example-php-apache-80@kubernetes",code="200"}[2m])The preceding manifest defines a ScaledObject named keda-prometheus-hpa, which is responsible for scaling the php-apache deployment and always keeps at least one replica running. It scales the pods of this deployment based on the metric http_requests_total available in Prometheus obtained by the specified query, and targets to scale up the pods so that each pod serves no more than one request per second. It scales down the replicas after the request load has been below the threshold for longer than 30 seconds.

The deployment spec for our example service contains the following resource requests and limits:

resources:

limits:

cpu: 500m

nvidia.com/gpu: 1

requests:

cpu: 200m

nvidia.com/gpu: 1With this configuration, each of the service pods will use exactly one NVIDIA GPU. When new pods are created, they will be in Pending state until a GPU is available. Karpenter adds GPU nodes to the cluster as needed to accommodate the pending pods.

A load-generating pod sends HTTP requests to the service with a pre-set frequency. We increase the number of requests by increasing the number of replicas in the load-generator deployment.

A full scaling cycle with utilization-based node consolidation is visualized in a Grafana dashboard. The following dashboard shows the number of nodes in the cluster by instance type (top), the number of requests per second (bottom left), and the number of pods (bottom right).

We start with just the two c5.xlarge CPU instances that the cluster was created with. Then we deploy one service instance, which requires a single GPU. Karpenter adds a g4dn.xlarge instance to accommodate this need. We then deploy the load generator, which causes KEDA to add more service pods and Karpenter adds more GPU instances. After optimization, the state settles on one p3.8xlarge instance with 8 GPUs and one g5.12xlarge instance with 4 GPUs.

When we scale the load-generating deployment to 40 replicas, KEDA creates additional service pods to maintain the required request load per pod. Karpenter adds g4dn.metal and g4dn.12xlarge nodes to the cluster to provide the needed GPUs for the additional pods. In the scaled state, the cluster contains 16 GPU nodes and serves about 300 requests per second. When we scale down the load generator to 1 replica, the reverse process takes place. After the cooldown period, KEDA reduces the number of service pods. Then as fewer pods run, Karpenter removes the underutilized nodes from the cluster and the service pods get consolidated to run on fewer nodes. When the load generator pod is removed, a single service pod on a single g4dn.xlarge instance with 1 GPU remains running. When we remove the service pod as well, the cluster is left in the initial state with only two CPU nodes.

We can observe this behavior when the NodePool has the setting consolidationPolicy: WhenUnderutilized.

With this setting, Karpenter dynamically configures the cluster with as few nodes as possible, while providing sufficient resources for all pods to run and also minimizing cost.

The scaling behavior shown in the following dashboard is observed when the NodePool consolidation policy is set to WhenEmpty, along with consolidateAfter: 30s.

In this scenario, nodes are stopped only when there are no pods running on them after the cool-off period. The scaling curve appears smooth, compared to the utilization-based consolidation policy; however, it can be seen that more nodes are used in the scaled state (22 vs. 16).

Overall, combining pod and cluster auto scaling makes sure that the cluster scales dynamically with the workload, allocating resources when needed and removing them when not in use, thereby maximizing utilization and minimizing cost.

Outcomes

Iambic used this architecture to enable efficient use of GPUs on AWS and migrate workloads from CPU to GPU. By using EC2 GPU powered instances, Amazon EKS, and Karpenter, we were able to enable faster inference for our physics-based models and fast experiment iteration times for applied scientists who rely on training as a service.

The following table summarizes some of the time metrics of this migration.

| Task | CPUs | GPUs |

| Inference using diffusion models for physics-based ML models | 3,600 seconds | 100 seconds (due to inherent batching of GPUs) |

| ML model training as a service | 180 minutes | 4 minutes |

The following table summarizes some of our time and cost metrics.

| Task | Performance/Cost | |

| CPUs | GPUs | |

| ML model training | 240 minutes average $0.70 per training task | 20 minutes average $0.38 per training task |

Summary

In this post, we showcased how Iambic used Karpenter and KEDA to scale our Amazon EKS infrastructure to meet the latency requirements of our AI inference and training workloads. Karpenter and KEDA are powerful open source tools that help auto scale EKS clusters and workloads running on them. This helps optimize compute costs while meeting performance requirements. You can check out the code and deploy the same architecture in your own environment by following the complete walkthrough in this GitHub repo.

About the Authors

Matthew Welborn is the director of Machine Learning at Iambic Therapeutics. He and his team leverage AI to accelerate the identification and development of novel therapeutics, bringing life-saving medicines to patients faster.

Matthew Welborn is the director of Machine Learning at Iambic Therapeutics. He and his team leverage AI to accelerate the identification and development of novel therapeutics, bringing life-saving medicines to patients faster.

Paul Whittemore is a Principal Engineer at Iambic Therapeutics. He supports delivery of the infrastructure for the Iambic AI-driven drug discovery platform.

Paul Whittemore is a Principal Engineer at Iambic Therapeutics. He supports delivery of the infrastructure for the Iambic AI-driven drug discovery platform.

Alex Iankoulski is a Principal Solutions Architect, ML/AI Frameworks, who focuses on helping customers orchestrate their AI workloads using containers and accelerated computing infrastructure on AWS.

Alex Iankoulski is a Principal Solutions Architect, ML/AI Frameworks, who focuses on helping customers orchestrate their AI workloads using containers and accelerated computing infrastructure on AWS.

Author: Matthew Welborn