Securing the RAG ingestion pipeline: Filtering mechanisms

For example, let’s assume you crawl a public website and ingest the data into your knowledge base… Because it’s public data, you risk also ingesting malicious content that was injected into that website by threat actors with the goal of exploiting the knowledge base component of the RAG appl…

Retrieval-Augmented Generative (RAG) applications enhance the responses retrieved from large language models (LLMs) by integrating external data such as downloaded files, web scrapings, and user-contributed data pools. This integration improves the models’ performance by adding relevant context to the prompt.

While RAG applications are a powerful way to dynamically add additional context to an LLM’s prompt and make model responses more relevant, incorporating data from external sources can pose security risks.

For example, let’s assume you crawl a public website and ingest the data into your knowledge base. Because it’s public data, you risk also ingesting malicious content that was injected into that website by threat actors with the goal of exploiting the knowledge base component of the RAG application. Through this mechanism, threat actors can intentionally change the model’s behavior.

Risks like these emphasize the need for security measures in the design and deployment of RAG systems in general. Security measures should be applied not only at inference time (that is, filtering model outputs), but also when ingesting external data into the knowledge base of the RAG application.

In this post, we explore some of the potential security risks of ingesting external data or documents into the knowledge base of your RAG application. We propose practical steps and architecture patterns that you can implement to help mitigate these risks.

Overview of security of the RAG ingestion workflow

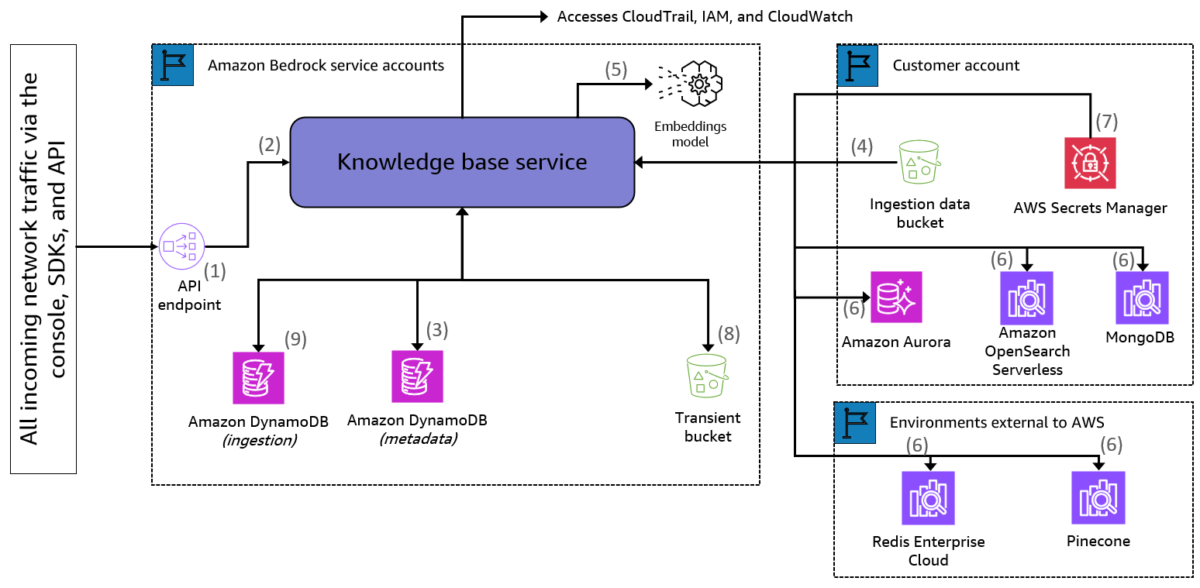

Before diving into specifics of mitigating risk in the ingestion pipeline, let’s have a look at a generic RAG workflow and which aspects you should keep in mind when it comes to securing a RAG application. For this post, let’s assume that you’re using Amazon Bedrock Knowledge Bases to build a RAG application. Amazon Bedrock Knowledge Bases offers built-in, robust security controls for data protection, access control, network security, logging and monitoring, and input/output validation that help mitigate many of the security risks.

In a RAG workflow with Amazon Bedrock Knowledge Bases, you have the following environments:

- An Amazon Bedrock service account, which is managed by the Amazon Bedrock service team.

- An AWS account where you can store your RAG data (if you’re using an AWS service as your vector store).

- A possible external environment, depending on the vector database you’ve chosen to store vector embeddings of your ingested content. If you choose Pinecone or Redis Enterprise Cloud for your vector database, you will use an environment external to AWS.

Figure 1: Visual representation of the knowledge base data ingestion flow

Looking at the workflow shown in Figure 1 for the ingestion of data into a knowledge base, an ingestion request is started by invoking the StartIngestionJob Bedrock API. From that point:

- If this request has the correct IAM permissions associated with it, it’s sent to the Bedrock API endpoint.

- This request is then passed to the knowledge base service component.

- The metadata collected related to the request is stored in the metadata Amazon DynamoDB database. This database is used solely to enumerate and characterize the data sources and their sync status. The API call includes metadata for the Amazon Simple Storage Service (Amazon S3) source location of the data to ingest, in addition to the vector store that will be used to store the embeddings.

- The process will begin to ingest customer-provided data from Amazon S3. If this data was encrypted using customer managed KMS keys, then these keys will be used to decrypt the data.

- As data is read from Amazon S3, chunks will be sent internally to invoke the chosen embedding model in Amazon Bedrock. A chunk refers to an excerpt from a data source that’s returned when the vector store that it’s stored in is queried. Using knowledge bases, you can chunk either with a fixed size (standard chunking), hierarchical chunking, semantic chunking, advanced parsing options for parsing non-textual information, or custom transformations. More information about chunking for knowledge bases can be found in How content chunking and parsing works for knowledge bases.

- The embeddings model in Amazon Bedrock will create the embeddings, which are then sent to your chosen vector store. Amazon Bedrock Knowledge Bases supports popular databases for vector storage, including the vector engine for Amazon OpenSearch Serverless, Pinecone, Redis Enterprise Cloud, Amazon Aurora, and MongoDB. If you don’t have an existing vector database, Amazon Bedrock creates an OpenSearch Serverless vector store for you. This option is only available through the console, not through the SDK or CLI.

- If credentials or secrets are required to access the vector store, they can be stored in AWS Secrets Manager where they will be automatically retrieved and used. Afterwards, the embeddings will be inserted into (or updated in) the configured vector store.

- Checkpoints for the in-progress ingestion jobs will be temporarily stored in a transient S3 bucket, encrypted with customer managed AWS Key Management Service (AWS KMS) keys. These checkpoints allow you to resume interrupted ingestion jobs from a previous successful checkpoint. Both the Aurora database and the Amazon OpenSearch Serverless database can be configured as public or private, and of course we recommend private databases. Changes in your ingestion data bucket (for example, uploading new files or new versions of files) will be reflected after the data source is synchronized; this synchronization is done incrementally. After the completion of an ingestion job, the data is automatically purged and deleted after a maximum of 8 days.

- The ingestion DynamoDB table stores information required for syncing the vector store. It stores metadata related to the chunks needed to keep track of data in the underlying vector database. The table is used so that the service can identify which chunks need to be inserted, updated, or deleted between one ingestion job and another.

When it comes to encryption at rest for the different environments:

- Customer AWS accounts – The resources in these can be encrypted using customer managed KMS keys

- External environments – Redis Enterprise Cloud and Pinecone have their own encryption features

- Amazon Bedrock service accounts – The S3 bucket (step 8) can be encrypted using customer managed KMS keys, but in the context of Amazon Bedrock, the DynamoDB tables of steps 3 and 9 can only be encrypted with AWS owned keys. However, the tables managed by Amazon Bedrock don’t contain personally identifiable information (PII) or customer-identifiable data.

Throughout the RAG ingestion workflow, data is encrypted in transit. Amazon Bedrock Knowledge Bases uses TLS encryption for communication with third-party vector stores where the provider permits and supports TLS encryption in transit. Customer data is not persistently stored in the Amazon Bedrock service accounts.

For identity and access management, it’s important to follow the principle of least privilege while creating the custom service role for Amazon Bedrock Knowledge Bases. As part of the role’s permissions, you create a trust relationship that allows Amazon Bedrock to assume this role and create and manage knowledge bases. For more information about the necessary permissions, see Providing secure access, usage, and implementation to generative AI RAG techniques.

Security risks of the RAG data ingestion pipeline and the need for ingest time filtering

RAG applications inherently rely on foundation models, introducing additional security considerations beyond the traditional application safeguards. Foundation models can analyze complex linguistic patterns and provide responses depending on the input context, and can be subject to malicious events such as jailbreaking, data poisoning, and inversion. Some of these LLM-specific risks are mapped out in documents such as the OWASP Top 10 for LLM Applications and MITRE ATLAS.

A risk that’s particularly relevant for the RAG ingestion pipeline, and one of the most common risks we see nowadays, is prompt injection. In prompt injection attacks, threat actors manipulate generative AI applications by feeding them malicious inputs disguised as legitimate user prompts. There are two forms of prompt injection: direct and indirect.

Direct prompt injections occur when a threat actor overwrites the underlying system prompt. This might allow them to probe backend systems by interacting with insecure functions and data stores accessible through the LLM. When it comes to securing generative AI applications against prompt injection, this type tends to be the one that customers focus on the most. To mitigate risks, you can use tools such as Amazon Bedrock Guardrails to set up inference-time filtering of the LLM’s completions.

Indirect prompt injections occur when an LLM accepts input from external sources that can be controlled by a threat actor, such as websites or files. This injection type is particularly important when you consider the ingestion pipeline of RAG applications, where a threat actor might embed a prompt injection in external content which is ingested into the database. This can enable the threat actor to manipulate additional systems that the LLM can access or return a different answer to the user. Additionally, indirect prompt injections might not be recognizable by humans. Security issues can result not only from the LLM’s responses based on its training data, but also from the data sources the RAG application has access to from its knowledge base. To mitigate these risks, you should focus on the intersection of the LLM, knowledge base, and external content ingested into the RAG application.

To give you a better idea of indirect prompt ingestion, let’s first discuss an example.

External data source ingestion risk: Examples of indirect prompt injection

Let’s say a threat actor crafts a document or injects content into a website. This content is designed to manipulate an LLM to generate incorrect responses. To a human, such a document could be indistinguishable from legitimate ones. However, the document could contain an invisible sequence, which, when used as a reference source for RAG, could manipulate the LLM into generating an undesirable response.

For example, let’s assume you have a file describing the process for downloading a company’s software. This file is ingested into a knowledge base for an LLM-powered chatbot. A user can ask the chatbot where to find the correct link to download software packages and then download the package by clicking on the link.

A threat actor could include a second link in the document using white text on a white background. This text is invisible to the reader and the company downloading the document to store in their knowledge base. However, it’s visible when parsed by the document parser and saved in the knowledge base. This could result in the LLM returning the hidden link, which could lead the user to download malware hosted by the threat actor on a site they manage, rather than legitimate software from the expected site.

If your application is connected to plugins or agents so that it can call APIs or execute code, the model could be manipulated to run code, open URLs chosen by the threat actor, and more.

If you look at Figure 2 that follows, you can see what the typical RAG workflow is and how an indirect prompt injection attack can happen (this example uses Amazon Bedrock Knowledge Bases).

Figure 2: Visual representation of the RAG workflow with both a generic file and a malicious file that looks identical to the generic one

As shown in Figure 2, for data ingestion (starting at the bottom right), File 1, the legitimate and unmodified file, is saved in the data source (typically an S3 bucket). During ingestion, the document is parsed by a document parser, split into chunks, converted into embeddings, and then saved in the vector store. When a user (top left) asks a question about the file, information from this file will be added as context to the user prompt. However, you might have a malicious File 2 instead, that looks exactly the same to a human reader but contains an invisible character sequence. After this sequence is inserted into the prompt sent to the LLM, it can influence the overall response of the environment.

Threat actors might analyze the following three aspects in the RAG workflow to create and place a malicious sequence:

- The document parser is software designed to read and interpret the contents of a document. It analyzes the text and extracts relevant information based on predefined rules or patterns. By analyzing the document parser, threat actors can determine how they might inject invisible content into different document formats.

- The text splitter (or chunker) splits text based on the subject matter of the content. Threat actors will analyze the text splitters to locate a proper injection position for their invisible sequence. Section-based splitters divide content according to tags that label different sections, which threat actors can use to place their invisible sequences within these delineated chunks. Length-based splitters split the content into fixed-length chunks with overlap (to help keep context between chunks).

- The prompt template is a predefined structure that is used to generate specific outputs or guide interactions with LLMs. Prompt templates determine how the content retrieved from the vector database is organized alongside the user’s original prompt to form the augmented prompt. The template is crucial, because it impacts the overall performance of RAG-based applications. If threat actors are aware of the prompt template used in your application, they can take that into account when constructing their threat sequence.

Potential mitigations

Threat actors can release documents containing well-constructed and well-placed invisible sequences onto the internet, thereby posing a threat to RAG applications that ingest this external content. Therefore, whenever possible, only ingest data from trusted sources. However, if your application requires you to use and ingest data from untrusted sources, it’s recommended to process them carefully to mitigate risks such as indirect prompt injection. To harden your RAG ingestion pipeline, you can use the following mitigation techniques to place additional security measures on your RAG ingestion pipeline. These can be implemented individually or together.

- Configure your application to display the source content underlying its responses, allowing users to cross-reference the content with the response. This is possible using Amazon Bedrock Knowledge Bases by using citations. However, this method isn’t a prevention technique. Also, it might be less effective with complex content because it can require that users invest a lot of time in verification to be effective.

- Establish trust boundaries between the LLM, external sources, and extensible functionality (for example, plugins, agents, or downstream functions). Treat the LLM as an untrusted actor and maintain final user control on decision-making processes. This comes back to the principle of least privilege. Make sure your LLM has access only to data sources that it needs to have access to and be especially careful when connecting it to external plugins or APIs.

- Continuous evaluation plays a vital role in maintaining the accuracy and reliability of your RAG system. When evaluating RAG applications, you can use labeled datasets containing prompts and target answers. However, frameworks such as RAGAS propose automated metrics that enable reference-free evaluation, alleviating the need for human-annotated ground truth answers. Implementing a mechanism for RAG evaluation can help you discover irregularities in your model responses and in the data retrieved from your knowledge base. If you want to explore how to evaluate your RAG application in greater depth, see Evaluate the reliability of Retrieval Augmented Generation applications using Amazon, which provides further insights and guidance on this topic.

- You can manually monitor content that you intend to ingest into your vector database—especially when the data includes external content such as websites and files. A human in the loop could potentially protect against less sophisticated, visible threat sequences.

For more advice on mitigating risks in generative AI applications, see the mitigations listed in the OWASP Top 10 for LLMs and MITRE ATLAS.

Architectural pattern 1: Using format breakers and Amazon Textract as document filters

Figure 3: Visual representation of a potential workflow to remove threat sequences from your files is using a format breaker and Amazon Textract

One potential workflow to remove potential threat sequences from your ingest files is to use a format breaker and Amazon Textract. This workflow specifically focuses on invisible threat vectors. The preceding Figure 3 shows a potential setup using AWS services that allows you to automate this.

- Let’s say you use an S3 bucket to ingest your files. Whichever file you want to upload into your knowledge base is initially uploaded in this bucket. The upload action in Amazon S3 automatically starts a workflow that will take care of the format break.

- A format break is a process used to sanitize and secure documents, by transforming them in a way that strips out potentially harmful elements such as macros, scripts, embedded objects, and other non-text content that could carry security risks. The format break in the ingest-time filter involves converting text content into PDF format and then to OCR format. To start, convert the text to PDF format. One of the options is to use an AWS Lambda function to convert text to PDF format. As an example, you can create such a function by putting the file renderers and PDF generator from LibreOffice into a Lambda function. This step is necessary to process the file using Amazon Textract because the service currently supports only PNG, JPEG, TIFF, and PDF formats.

- After the data is put into PDF format, you can save it into an S3 bucket. This upload to S3 can, in turn, trigger the next step in the format break: converting the PDF content to OCR format.

- You can process the PDF content using Amazon Textract, which will convert the text content to OCR format. Amazon Textract will render the PDF as an image. This involves extracting the text from the PDF, essentially creating a plain text version of the document. The OCR format makes sure that non-text elements, such as images or embedded files, aren’t carried over to the final document. Only the readable text is extracted, which significantly reduces the risk of hidden malicious content. This also removes white text on white backgrounds because that text is invisible when the PDF is rendered as an image before OCR conversion is performed. To use Amazon Textract to convert text to OCR format, create a Lambda function that will trigger Amazon Textract and input your PDF that was saved in Amazon S3.

- You can use Amazon Textract to process multipage documents in PDF format and detect printed and handwritten text from the Standard English alphabet and ASCII symbols. The service will extract printed text, forms, and tables in English, German, French, Spanish, Italian and Portuguese. This means that non-visible threat vectors won’t be detected or recognized by Amazon Textract and are automatically removed from the input. Amazon Textract operations return a Block object in the API response to the Lambda function.

- To ingest the information into a knowledge base, you need to transform the Amazon Textract output into a format that’s supported by your knowledge base. In this case, you would use code in your Lambda function to transform the Amazon Textract output into a plain text (.txt) file.

- The plain text file is then saved into an S3 bucket. This S3 bucket can then be used as a source for your knowledge base.

- You can automate the reflection of changes in your S3 bucket to your knowledge base by either having your Lambda function that created the Amazon S3 file run a

start_ingestion_job()API call or use an Amazon S3 event trigger on the destination bucket to configure a new Lambda function to run when a file is uploaded to this S3 bucket. Synchronization is incremental, so changes from the previous synchronization are incorporated. More info on managing your data sources can be found in Connect to your data repository for your knowledge base.

In addition to invisible sequences, threat actors can add sophisticated threat sequences that are difficult to classify or filter. Manually checking each document for unusual content isn’t feasible at scale, and creating a filter or model that accurately detects misleading information in such documents is challenging.

One powerful characteristic of LLMs is that they can analyze complex linguistic patterns. An optional pathway is to add a filtering LLM to your knowledge base ingest pipeline to detect malicious or misleading content, susceptible code, or unrelated context that might mislead your model.

Again, it’s important to note that threat actors might deliberately choose content that’s difficult to classify or filter and that resembles normal content. More capable, general-purpose LLMs provide a larger surface for threat actors, because they aren’t tuned to detect these specific attempts. The question is: can we train models to be robust against a wide variety of threats? Currently, there’s no definitive answer, and it remains a highly researched topic. However, some models address specific use cases. For example, LLamaGuard, a fine-tuned version of Meta’s Llama model, predicts safety labels in 14 categories such as elections, privacy, and defamation. It can classify content in both LLM inputs (prompt classification) and LLM responses (response classification).

For document classification, relevant for filtering ingest data, even a small model like BERT can be used. BERT is an encoder-only language model with a bi-directional attention mechanism, making it strong in tasks requiring deep contextual understanding, such as text classification, named entity recognition (NER), and question answering (QA). It’s open source and can be fine-tuned for various applications. This includes use cases in cybersecurity, such as phishing detection in email messages or detecting prompt injection attacks. If you have the resources in-house and work on critical applications that need advanced filtering for specific threats, consider fine-tuning a model like BERT to classify documents that might contain undesirable material.

In addition to natural-language text, threat actors might use data encoding techniques to obfuscate or conceal undesirable payloads within documents. These techniques include encoded scripts, malware, or other harmful content disguised using methods like base64 encoding, hexadecimal encoding, morse code, uucode, ASCII art, and more.

An effective way to detect such sequences is by using the Amazon Comprehend DetectDominantLanguage API. If a document is written entirely in a supported language, DetectDominantLanguage will return a high confidence score, indicating the absence of encoded data. Conversely, if a document contains encoded strings, such as base64, the API will struggle to categorize this text, resulting in a low confidence score. To automate the detection process, you can route documents to a human review stage if the confidence score falls below a certain threshold (for example, 85 percent). This reduces the need for manual checks for potentially malicious encoded data.

Additionally, the encoding and decoding capabilities of LLMs can assist in decoding encoded data. Various LLMs understand encoding schemes and can interpret encoded data within documents or files. For example, Anthropic’s Claude 3 Haiku can decode a base64 encoded string such as TGVhcm5pbmcgaG93IHRvIGNhbGwgU2FnZU1ha2VyIGVuZHBvaW50cyBmcm9tIExhbWJkYSBpcyB2ZXJ5IHVzZWZ1bC4 into its original plaintext form: “Learning how to call Amazon SageMaker endpoints from Lambda is very useful.” While this example is benign, it demonstrates the ability of LLMs to detect and decode encoded data, which can then be stripped before ingestion into your vector store.

Figure 4: Visual representation of a potential workflow to trigger a human in the loop review in case threat sequences are detected in your ingest files

In the preceding Figure 4, you can see a workflow that shows how you can integrate the above features into your document processing workflow to detect malicious content in ingest documents:

- As your ingestion point, you can use an S3 bucket. Files that you want to upload into your knowledge base are first uploaded into this bucket. In this diagram, the files are assumed to be .txt files.

- The upload action in Amazon S3 automatically starts an AWS Step Functions workflow.

- Amazon EventBridge is used to trigger the Step Functions workflow.

- The first Lambda function in the workflow calls the Amazon Comprehend

DetectDominantLanguageAPI, which flags documents if the confidence score of the language is below a certain threshold, indicating that the text might contain encoded data or data in other formats (such as a language Amazon Comprehend doesn’t recognize) that might be malicious. - If this is the case, the document is sent to a foundation model in Amazon Bedrock that can translate or decode the data.

- Next, another Lambda function is triggered. This function invokes a SageMaker endpoint, where you can deploy a model, such as a fine-tuned version of BERT, to classify documents as suspicious or not.

- If no suspicious content is detected, nothing is done and the content in the bucket remains the same (no need to override content, to prevent unnecessary costs) and the workflow ends. If undesirable content is detected, the document is stored in a second S3 bucket for human review.

- If not, the workflow ends.

Additional considerations for RAG data ingestion pipeline security

In previous sections, we focused on filtering patterns and current recommendations to secure the RAG ingestion pipeline. However, content filters that address indirect prompt injection aren’t the only mitigation to keep in mind when building a secure RAG application. To effectively secure generative AI-powered applications, responsible AI considerations and traditional security recommendations are still crucial.

To moderate content in your ingest pipeline, you might want to remove toxic language and PII data from your ingest documents. Amazon Comprehend offers built-in features for toxic content detection and PII detection in text documents. The Toxicity Detection API can identify content in categories such as hate speech, insults, and sexual content. This feature is particularly useful for making sure that harmful or inappropriate content isn’t ingested into your system. You can use the Toxicity Detection API to analyze up to 10 text segments at a time, each with a size limit of 1 KB. You might need to split larger documents into smaller segments before processing. For detailed guidance on using Amazon Comprehend toxicity detection, see Amazon Comprehend Toxicity Detection. For more information on PII detection and redaction with Amazon Comprehend, we recommend Detecting and redacting PII using Amazon Comprehend.

Keep the principle of least privilege in mind for your RAG application. Think about which permissions your application has, and give it only the permissions it needs to successfully function. Your application sends data in the context or orchestrates tools on behalf of the LLM, so it’s important that these permissions are limited. If you want to dive deep into achieving least privilege at scale, we recommend Strategies for achieving least privilege at scale. This is especially important when your RAG applications involves agents that might call APIs or databases. Make sure you carefully grant permissions to prevent potential security issues such as an SQL injection attack on your database.

Develop a threat model for your RAG application. It’s recommended that you document potential security risks in your application and have mitigation strategies for each risk. This session from Re:Invent 2023 gives an overview of how to approach threat modeling a generative AI workload. In addition, you can use the Threat Composer tool, which comes with a sample generative AI application, to help you in threat modeling your applications.

Lastly, when deciding what data to ingest into your RAG application, make sure to ask the right questions about the origin of the content, such as “who has access and edit rights to this content?” For example, anyone can edit a Wikipedia page. In addition, assess what the scope of your application is. Can the RAG application run code? Can it query a database? If so, this poses additional risks, so external data in your vector database should be carefully filtered.

Conclusion

In this blog post, you read about some of the security risks of RAG applications, with a specific focus on the RAG ingestion pipeline. Threat actors might engineer sophisticated methods to embed invisible content within websites or files. Without filtering or an evaluation mechanism, these might result in the LLM generating incorrect information, or worse, depending on the capabilities of the application (such as execute code, query a database, and so on). This makes it challenging to spot these threats when reviewing content.

You learned about some strategies and architectural patterns with filtering mechanisms to mitigate these risks. It’s important to note that the filtering mechanisms might not catch all undesirable content that should be removed from a file (for example, PII, base64 encoded data, and other undesirable sequences). Therefore, an evaluation mechanism and a human in the loop are crucial because there’s no model trained to detect such sequences for techniques like indirect prompt injection at this time (although there are models trained specifically to detect impolite language, but this doesn’t cover all possible cases).

Although there is currently no way to completely mitigate threats like injection attacks, these strategies and architectural patterns are a first step and form part of a layered approach to securing your application. In addition to these, make sure to evaluate your data regularly, consider having a human in the loop, and stay up to date on advancements in this space such as OWASP top 10 for LLM Applications or MITRE ATLAS

If you have feedback about this post, submit comments in the Comments section below.

Author: Laura Verghote