Transcribe, translate, and summarize live streams in your browser with AWS AI and generative AI services

Prerequisites For this walkthrough, you should have the following prerequisites: A computer with Google Chrome installed on it An AWS account Access to one or more Amazon Bedrock models (for more information, see Access Amazon Bedrock foundation models) An AWS Identity and Access Manage…

Live streaming has been gaining immense popularity in recent years, attracting an ever-growing number of viewers and content creators across various platforms. From gaming and entertainment to education and corporate events, live streams have become a powerful medium for real-time engagement and content consumption. However, as the reach of live streams expands globally, language barriers and accessibility challenges have emerged, limiting the ability of viewers to fully comprehend and participate in these immersive experiences.

Recognizing this need, we have developed a Chrome extension that harnesses the power of AWS AI and generative AI services, including Amazon Bedrock, an AWS managed service to build and scale generative AI applications with foundation models (FMs). This extension aims to revolutionize the live streaming experience by providing real-time transcription, translation, and summarization capabilities directly within your browser.

With this extension, viewers can seamlessly transcribe live streams into text, enabling them to follow along with the content even in noisy environments or when listening to audio is not feasible. Moreover, the extension’s translation capabilities open up live streams to a global audience, breaking down language barriers and fostering more inclusive participation. By offering real-time translations into multiple languages, viewers from around the world can engage with live content as if it were delivered in their first language.

In addition, the extension’s capabilities extend beyond mere transcription and translation. Using the advanced natural language processing and summarization capabilities of FMs available through Amazon Bedrock, the extension can generate concise summaries of the content being transcribed in real time. This innovative feature empowers viewers to catch up with what is being presented, making it simpler to grasp key points and highlights, even if they have missed portions of the live stream or find it challenging to follow complex discussions.

In this post, we explore the approach behind building this powerful extension and provide step-by-step instructions to deploy and use it in your browser.

Solution overview

The solution is powered by two AWS AI services, Amazon Transcribe and Amazon Translate, along with Amazon Bedrock, a fully managed service that allows you to build generative AI applications. The solution also uses Amazon Cognito user pools and identity pools for managing authentication and authorization of users, Amazon API Gateway REST APIs, AWS Lambda functions, and an Amazon Simple Storage Service (Amazon S3) bucket.

After deploying the solution, you can access the following features:

- Live transcription and translation – The Chrome extension transcribes and translates audio streams for you in real time using Amazon Transcribe, an automatic speech recognition service. This feature also integrates with Amazon Transcribe automatic language identification for streaming transcriptions—with a minimum of 3 seconds of audio, the service can automatically detect the dominant language and generate a transcript without you having to specify the spoken language.

- Summarization – The Chrome extension uses FMs such as Anthropic’s Claude 3 models on Amazon Bedrock to summarize content being transcribed, so you can grasp key ideas of your live stream by reading the summary.

Live transcription is currently available in the over 50 languages currently supported by Amazon Transcribe streaming (Chinese, English, French, German, Hindi, Italian, Japanese, Korean, Brazilian Portuguese, Spanish, and Thai), while translation is available in the over 75 languages currently supported by Amazon Translate.

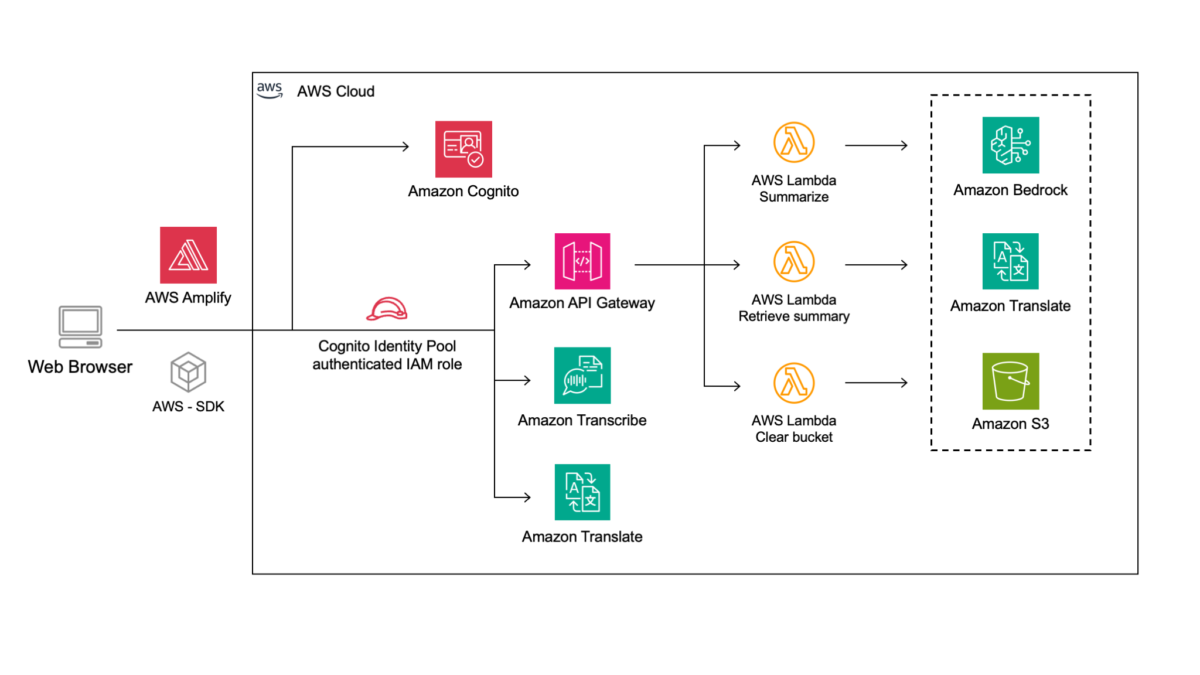

The following diagram illustrates the architecture of the application.

The solution workflow includes the following steps:

- A Chrome browser is used to access the desired live streamed content, and the extension is activated and displayed as a side panel. The extension delivers a web application implemented using the AWS SDK for JavaScript and the AWS Amplify JavaScript library.

- The user signs in by entering a user name and a password. Authentication is performed against the Amazon Cognito user pool. After a successful login, the Amazon Cognito identity pool is used to provide the user with the temporary AWS credentials required to access application features. For more details about the authentication and authorization flows, refer to Accessing AWS services using an identity pool after sign-in.

- The extension interacts with Amazon Transcribe (StartStreamTranscription operation), Amazon Translate (TranslateText operation), and Amazon Bedrock (InvokeModel operation). Interactions with Amazon Bedrock are handled by a Lambda function, which implements the application logic underlying an API made available using API Gateway.

- The user is provided with the transcription, translation, and summary of the content playing inside the browser tab. The summary is stored inside an S3 bucket, which can be emptied using the extension’s Clean Up feature.

In the following sections, we walk through how to deploy the Chrome extension and the underlying backend resources and set up the extension, then we demonstrate using the extension in a sample use case.

Prerequisites

For this walkthrough, you should have the following prerequisites:

- A computer with Google Chrome installed on it

- An AWS account

- Access to one or more Amazon Bedrock models (for more information, see Access Amazon Bedrock foundation models)

- An AWS Identity and Access Management (IAM) user with the AdministratorAccess policy granted (for production, we recommend restricting access as needed)

- The AWS Command Line Interface (AWS CLI) installed and configured to use with your AWS account

- The AWS CDK CLI installed

- js and npm installed

Deploy the backend

The first step consists of deploying an AWS Cloud Development Kit (AWS CDK) application that automatically provisions and configures the required AWS resources, including:

- An Amazon Cognito user pool and identity pool that allow user authentication

- An S3 bucket, where transcription summaries are stored

- Lambda functions that interact with Amazon Bedrock to perform content summarization

- IAM roles that are associated with the identity pool and have permissions required to access AWS services

Complete the following steps to deploy the AWS CDK application:

- Using a command line interface (Linux shell, macOS Terminal, Windows command prompt or PowerShell), clone the GitHub repository to a local directory, then open the directory:

- Open the

cdk/bin/config.jsonfile and populate the following configuration variables:

The template launches in the us-east-2 AWS Region by default. To launch the solution in a different Region, change the aws_region parameter accordingly. Make sure to select a Region in which all the AWS services in scope (Amazon Transcribe, Amazon Translate, Amazon Bedrock, Amazon Cognito, API Gateway, Lambda, Amazon S3) are available.

The Region used for bedrock_region can be different from aws_region because you might have access to Amazon Bedrock models in a Region different from the Region where you want to deploy the project.

By default, the project uses Anthropic’s Claude 3 Sonnet as a summarization model; however, you can use a different model by changing the bedrock_model_id in the configuration file. For the complete list of model IDs, see Amazon Bedrock model IDs. When selecting a model for your deployment, don’t forget to check that the desired model is available in your preferred Region; for more details about model availability, see Model support by AWS Region.

- If you have never used the AWS CDK on this account and Region combination, you will need to run the following command to bootstrap the AWS CDK on the target account and Region (otherwise, you can skip this step):

- Navigate to the

cdksub-directory, install dependencies, and deploy the stack by running the following commands:

- Confirm the deployment of the listed resources by entering y.

Wait for AWS CloudFormation to finish the stack creation.

You need to use the CloudFormation stack outputs to connect the frontend to the backend. After the deployment is complete, you have two options.

The preferred option is to use the provided postdeploy.sh script to automatically copy the cdk configuration parameters to a configuration file by running the following command, still in the /cdk folder:

Alternatively, you can copy the configuration manually:

- Open the AWS CloudFormation console in the same Region where you deployed the resources.

- Find the stack named

AwsStreamAnalysisStack. - On the Outputs tab, note of the output values to complete the next steps.

Set up the extension

Complete the following steps to get the extension ready for transcribing, translating, and summarizing live streams:

- Open the

src/config.jsBased on how you chose to collect the CloudFormation stack outputs, follow the appropriate step:- If you used the provided automation, check whether the values inside the

src/config.jsfile have been automatically updated with the corresponding values. - If you copied the configuration manually, populate the

src/config.jsfile with the values you noted. Use the following format:

- If you used the provided automation, check whether the values inside the

Take note of the CognitoUserPoolId, which will be needed in a later step to create a new user.

- In the command line interface, move back to the

aws-transcribe-translate-summarize-live-streams-in-browser directorywith a command similar to following:

- Install dependencies and build the package by running the following commands:

- Open your Chrome browser and navigate to

chrome://extensions/.

Make sure that developer mode is enabled by toggling the icon on the top right corner of the page.

- Choose Load unpacked and upload the build directory, which can be found inside the local project folder

aws-transcribe-translate-summarize-live-streams-in-browser. - Grant permissions to your browser to record your screen and audio:

- Identify the newly added Transcribe, translate and summarize live streams (powered by AWS)

- Choose Details and then Site Settings.

- In the Microphone section, choose Allow.

- Create a new Amazon Cognito user:

- On the Amazon Cognito console, choose User pools in the navigation pane.

- Choose the user pool with the

CognitoUserPoolIdvalue noted from the CloudFormation stack outputs. - On the Users tab, choose Create user and configure this user’s verification and sign-in options.

See a walkthrough of Steps 4-6 in the animated image below. For additional details, refer to Creating a new user in the AWS Management Console.

Use the extension

Now that the extension in set up, you can interact with it by completing these steps:

- On the browser tab, choose the Extensions.

- Choose (right-click) on the Transcribe, translate and summarize live streams (powered by AWS) extension and choose Open side panel.

- Log in using the credentials created in the Amazon Cognito user pool from the previous step.

- Close the side panel.

You’re now ready to experiment with the extension.

- Open a new tab in the browser, navigate to a website featuring an audio/video stream, and open the extension (choose the Extensions icon, then choose the option menu (three dots) next to AWS transcribe, translate, and summarize, and choose Open side panel).

- Use the Settings pane to update the settings of the application:

- Mic in use – The Mic not in use setting is used to record only the audio of the browser tab for a live video streaming. Mic in use is used for a real-time meeting where your microphone is recorded as well.

- Transcription language – This is the language of the live stream to be recorded (set to auto to allow automatic identification of the language).

- Translation language – This is the language in which the live stream will be translated and the summary will be printed. After you choose the translation language and start the recording, you can’t change your choice for the ongoing live stream. To change the translation language for the transcript and summary, you will have to record it from scratch.

- Choose Start recording to start recording, and start exploring the Transcription and Translation

Content on the Translation tab will appear with a few seconds of delay compared to what you see on the Transcription tab. When transcribing speech in real time, Amazon Transcribe incrementally returns a stream of partial results until it generates the final transcription for a speech segment. This Chrome extension has been implemented to translate text only after a final transcription result is returned.

- Expand the Summary section and choose Get summary to generate a summary. The operation will take a few seconds.

- Choose Stop recording to stop recording.

- Choose Clear all conversations in the Clean Up section to delete the summary of the live stream from the S3 bucket.

See the extension in action in the video below.

Troubleshooting

If you receive the error “Extension has not been invoked for the current page (see activeTab permission). Chrome pages cannot be captured.”, check the following:

- Make sure you’re using the extension on the tab where you first opened the side pane. If you want to use it on a different tab, stop the extension, close the side pane, and choose the extension icon again to run it

- Make sure you have given permissions for audio recording in the web browser.

If you can’t get the summary of the live stream, make sure you have stopped the recording and then request the summary. You can’t change the language of the transcript and summary after the recording has started, so remember to choose it appropriately before you start the recording.

Clean up

When you’re done with your tests, to avoid incurring future charges, delete the resources created during this walkthrough by deleting the CloudFormation stack:

- On the AWS CloudFormation console, choose Stacks in the navigation pane.

- Choose the stack

AwsStreamAnalysisStack. - Take note of the

CognitoUserPoolIdandCognitoIdentityPoolIdvalues among the CloudFormation stack outputs, which will be needed in the following step. - Choose Delete stack and confirm deletion when prompted.

Because the Amazon Cognito resources won’t be automatically deleted, delete them manually:

- On the Amazon Cognito console, locate the

CognitoUserPoolIdandCognitoIdentityPoolIdvalues previously retrieved in the CloudFormation stack outputs. - Select both resources and choose Delete.

Conclusion

In this post, we showed you how to deploy a code sample that uses AWS AI and generative AI services to access features such as live transcription, translation and summarization. You can follow the steps we provided to start experimenting with the browser extension.

To learn more about how to build and scale generative AI applications, refer to Transform your business with generative AI.

About the Authors

Luca Guida is a Senior Solutions Architect at AWS; he is based in Milan and he supports independent software vendors in their cloud journey. With an academic background in computer science and engineering, he started developing his AI/ML passion at university; as a member of the natural language processing and generative AI community within AWS, Luca helps customers be successful while adopting AI/ML services.

Luca Guida is a Senior Solutions Architect at AWS; he is based in Milan and he supports independent software vendors in their cloud journey. With an academic background in computer science and engineering, he started developing his AI/ML passion at university; as a member of the natural language processing and generative AI community within AWS, Luca helps customers be successful while adopting AI/ML services.

Chiara Relandini is an Associate Solutions Architect at AWS. She collaborates with customers from diverse sectors, including digital native businesses and independent software vendors. After focusing on ML during her studies, Chiara supports customers in using generative AI and ML technologies effectively, helping them extract maximum value from these powerful tools.

Chiara Relandini is an Associate Solutions Architect at AWS. She collaborates with customers from diverse sectors, including digital native businesses and independent software vendors. After focusing on ML during her studies, Chiara supports customers in using generative AI and ML technologies effectively, helping them extract maximum value from these powerful tools.

Arian Rezai Tabrizi is an Associate Solutions Architect based in Milan. She supports enterprises across various industries, including retail, fashion, and manufacturing, on their cloud journey. Drawing from her background in data science, Arian assists customers in effectively using generative AI and other AI technologies.

Arian Rezai Tabrizi is an Associate Solutions Architect based in Milan. She supports enterprises across various industries, including retail, fashion, and manufacturing, on their cloud journey. Drawing from her background in data science, Arian assists customers in effectively using generative AI and other AI technologies.

Author: Luca Guida