Whisper models for automatic speech recognition now available in Amazon SageMaker JumpStart

Today, we’re excited to announce that the OpenAI Whisper foundation model is available for customers using Amazon SageMaker JumpStart… Whisper is a pre-trained model for automatic speech recognition (ASR) and speech translation… Trained on 680 thousand hours of labelled data, Whisper models d…

Today, we’re excited to announce that the OpenAI Whisper foundation model is available for customers using Amazon SageMaker JumpStart. Whisper is a pre-trained model for automatic speech recognition (ASR) and speech translation. Trained on 680 thousand hours of labelled data, Whisper models demonstrate a strong ability to generalize to many datasets and domains without the need for fine-tuning. Sagemaker JumpStart is the machine learning (ML) hub of SageMaker that provides access to foundation models in addition to built-in algorithms and end-to-end solution templates to help you quickly get started with ML.

You can also do ASR using Amazon Transcribe ,a fully-managed and continuously trained automatic speech recognition service.

In this post, we show you how to deploy the OpenAI Whisper model and invoke the model to transcribe and translate audio.

The OpenAI Whisper model uses the huggingface-pytorch-inference container. As a SageMaker JumpStart model hub customer, you can use ASR without having to maintain the model script outside of the SageMaker SDK. SageMaker JumpStart models also improve security posture with endpoints that enable network isolation.

Foundation models in SageMaker

SageMaker JumpStart provides access to a range of models from popular model hubs including Hugging Face, PyTorch Hub, and TensorFlow Hub, which you can use within your ML development workflow in SageMaker. Recent advances in ML have given rise to a new class of models known as foundation models, which are typically trained on billions of parameters and can be adapted to a wide category of use cases, such as text summarization, generating digital art, and language translation. Because these models are expensive to train, customers want to use existing pre-trained foundation models and fine-tune them as needed, rather than train these models themselves. SageMaker provides a curated list of models that you can choose from on the SageMaker console.

You can now find foundation models from different model providers within SageMaker JumpStart, enabling you to get started with foundation models quickly. SageMaker JumpStart offers foundation models based on different tasks or model providers, and you can easily review model characteristics and usage terms. You can also try these models using a test UI widget. When you want to use a foundation model at scale, you can do so without leaving SageMaker by using pre-built notebooks from model providers. Because the models are hosted and deployed on AWS, you trust that your data, whether used for evaluating or using the model at scale, won’t be shared with third parties.

OpenAI Whisper foundation models

Whisper is a pre-trained model for ASR and speech translation. Whisper was proposed in the paper Robust Speech Recognition via Large-Scale Weak Supervision by Alec Radford, and others, from OpenAI. The original code can be found in this GitHub repository.

Whisper is a Transformer-based encoder-decoder model, also referred to as a sequence-to-sequence model. It was trained on 680 thousand hours of labelled speech data annotated using large-scale weak supervision. Whisper models demonstrate a strong ability to generalize to many datasets and domains without the need for fine-tuning.

The models were trained on either English-only data or multilingual data. The English-only models were trained on the task of speech recognition. The multilingual models were trained on speech recognition and speech translation. For speech recognition, the model predicts transcriptions in the same language as the audio. For speech translation, the model predicts transcriptions to a different language to the audio.

Whisper checkpoints come in five configurations of varying model sizes. The smallest four are trained on either English-only or multilingual data. The largest checkpoints are multilingual only. All ten of the pre-trained checkpoints are available on the Hugging Face hub. The checkpoints are summarized in the following table with links to the models on the hub:

| Model name | Number of parameters | Multilingual |

| whisper-tiny | 39 M | Yes |

| whisper-base | 74 M | Yes |

| whisper-small | 244 M | Yes |

| whisper-medium | 769 M | Yes |

| whisper-large | 1550 M | Yes |

| whisper-large-v2 | 1550 M | Yes |

Lets explore how you can use Whisper models in SageMaker JumpStart.

OpenAI Whisper foundation models WER and latency comparison

The word error rate (WER) for different OpenAI Whisper models based on the LibriSpeech test-clean is shown in the following table. WER is a common metric for the performance of a speech recognition or machine translation system. It measures the difference between the reference text (the ground truth or the correct transcription) and the output of an ASR system in terms of the number of errors, including substitutions, insertions, and deletions that are needed to transform the ASR output into the reference text. These numbers have been taken from the Hugging Face website.

| Model | WER (percent) |

| whisper-tiny | 7.54 |

| whisper-base | 5.08 |

| whisper-small | 3.43 |

| whisper-medium | 2.9 |

| whisper-large | 3 |

| whisper-large-v2 | 3 |

For this blog, we took the below audio file and compared the latency of speech recognition across different whisper models. Latency is the amount of time from the moment that a user sends a request until the time that your application indicates that the request has been completed. The numbers in the following table represent the average latency for a total of 100 requests using the same audio file with the model hosted on the ml.g5.2xlarge instance.

| Model | Average latency(s) | Model output |

| whisper-tiny | 0.43 | We are living in very exciting times with machine lighting. The speed of ML model development will really actually increase. But you won’t get to that end state that we won in the next coming years. Unless we actually make these models more accessible to everybody. |

| whisper-base | 0.49 | We are living in very exciting times with machine learning. The speed of ML model development will really actually increase. But you won’t get to that end state that we won in the next coming years. Unless we actually make these models more accessible to everybody. |

| whisper-small | 0.84 | We are living in very exciting times with machine learning. The speed of ML model development will really actually increase. But you won’t get to that end state that we want in the next coming years unless we actually make these models more accessible to everybody. |

| whisper-medium | 1.5 | We are living in very exciting times with machine learning. The speed of ML model development will really actually increase. But you won’t get to that end state that we want in the next coming years unless we actually make these models more accessible to everybody. |

| whisper-large | 1.96 | We are living in very exciting times with machine learning. The speed of ML model development will really actually increase. But you won’t get to that end state that we want in the next coming years unless we actually make these models more accessible to everybody. |

| whisper-large-v2 | 1.98 | We are living in very exciting times with machine learning. The speed of ML model development will really actually increase. But you won’t get to that end state that we want in the next coming years unless we actually make these models more accessible to everybody. |

Solution walkthrough

You can deploy Whisper models using the Amazon SageMaker console or using an Amazon SageMaker Notebook. In this post, we demonstrate how to deploy the Whisper API using the SageMaker Studio console or a SageMaker Notebook and then use the deployed model for speech recognition and language translation. The code used in this post can be found in this GitHub notebook.

Let’s expand each step in detail.

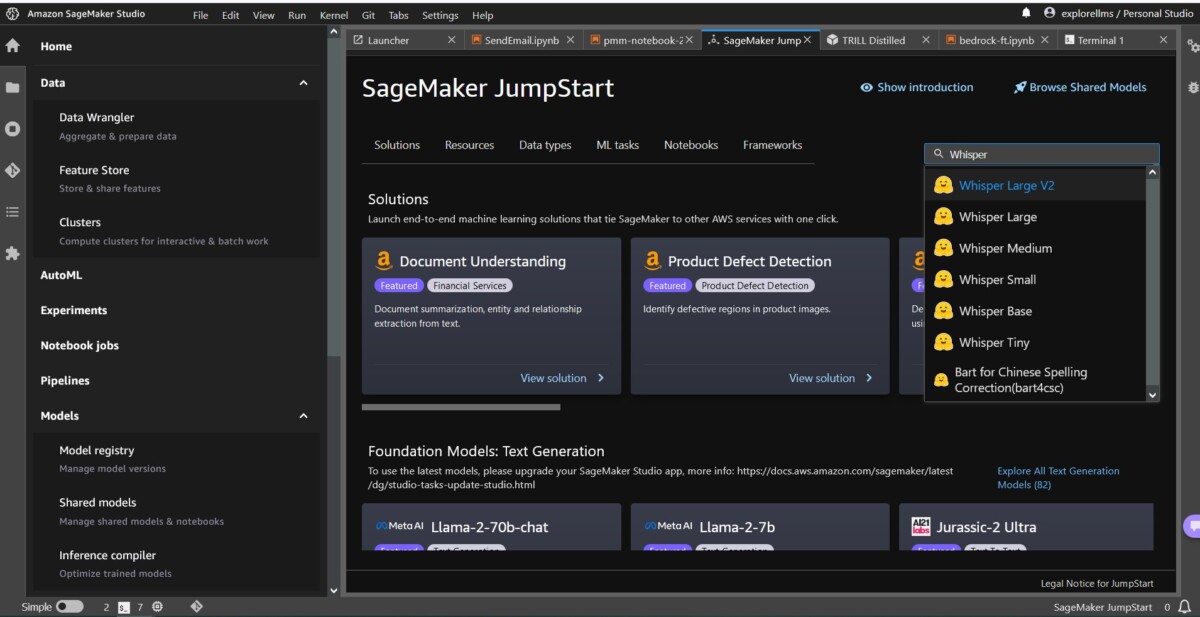

Deploy Whisper from the console

- To get started with SageMaker JumpStart, open the Amazon SageMaker Studio console and go to the launch page of SageMaker JumpStart and select Get Started with JumpStart.

- To choose a Whisper model, you can either use the tabs at the top or use the search box at the top right as shown in the following screenshot. For this example, use the search box on the top right and enter

Whisper, and then select the appropriate Whisper model from the dropdown menu.

- After you select the Whisper model, you can use the console to deploy the model. You can select an instance for deployment or use the default.

Deploy the foundation model from a Sagemaker Notebook

The steps to first deploy and then use the deployed model to solve different tasks are:

- Set up

- Select a model

- Retrieve artifacts and deploy an endpoint

- Use deployed model for ASR

- Use deployed model for language translation

- Clean up the endpoint

Set up

This notebook was tested on an ml.t3.medium instance in SageMaker Studio with the Python 3 (data science) kernel and in an Amazon SageMaker Notebook instance with the conda_python3 kernel.

Select a pre-trained model

Set up a SageMaker Session using Boto3, and then select the model ID that you want to deploy.

Retrieve artifacts and deploy an endpoint

Using SageMaker, you can perform inference on the pre-trained model, even without fine-tuning it first on a new dataset. To host the pre-trained model, create an instance of sagemaker.model.Model and deploy it. The following code uses the default instance ml.g5.2xlarge for the inference endpoint of a whisper-large-v2 model. You can deploy the model on other instance types by passing instance_type in the JumpStartModel class. The deployment might take few minutes.

Automatic speech recognition

Next, you read the sample audio file, sample1.wav, from a SageMaker Jumpstart public Amazon Simple Storage Service (Amazon S3) location and pass it to the predictor for speech recognition. You can replace this sample file with any other sample audio file but make sure the .wav file is sampled at 16 kHz because is required by the automatic speech recognition models. The input audio file must be less than 30 seconds.

This model supports many parameters when performing inference. They include:

max_length: The model generates text until the output length. If specified, it must be a positive integer.- language and task: Specify the output language and task here. The model supports the task of transcription or translation.

max_new_tokens: The maximum numbers of tokens to generate.num_return_sequences: The number of output sequences returned. If specified, it must be a positive integer.num_beams: The number of beams used in the greedy search. If specified, it must be integer greater than or equal tonum_return_sequences.no_repeat_ngram_size: The model ensures that a sequence of words ofno_repeat_ngram_sizeisn’t repeated in the output sequence. If specified, it must be a positive integer greater than 1.- temperature: This controls the randomness in the output. Higher temperature results in an output sequence with low-probability words and lower temperature results in an output sequence with high-probability words. If temperature approaches 0, it results in greedy decoding. If specified, it must be a positive float.

early_stopping: IfTrue, text generation is finished when all beam hypotheses reach the end of sentence token. If specified, it must be boolean.do_sample: IfTrue, sample the next word for the likelihood. If specified, it must be boolean.top_k: In each step of text generation, sample from only thetop_kmost likely words. If specified, it must be a positive integer.top_p: In each step of text generation, sample from the smallest possible set of words with cumulative probabilitytop_p. If specified, it must be a float between 0 and 1.

You can specify any subset of the preceding parameters when invoking an endpoint. Next, we show you an example of how to invoke an endpoint with these arguments.

Language translation

To showcase language translation using Whisper models, use the following audio file in French and translate it to English. The file must be sampled at 16 kHz (as required by the ASR models), so make sure to resample files if required and make sure your samples don’t exceed 30 seconds.

- Download the

sample_french1.wavfrom SageMaker JumpStart from the public S3 location so it can be passed in payload for translation by the Whisper model.

- Set the task parameter as

translateand language asFrenchto force the Whisper model to perform speech translation. - Use predictor to predict the translation of the language. If you receive client error (error 413), check the payload size to the endpoint. Payloads for SageMaker invoke endpoint requests are limited to about 5 MB.

- The text output translated to English from the French audio file follows:

Clean up

After you’ve tested the endpoint, delete the SageMaker inference endpoint and delete the model to avoid incurring charges.

Conclusion

In this post, we showed you how to test and use OpenAI Whisper models to build interesting applications using Amazon SageMaker. Try out the foundation model in SageMaker today and let us know your feedback!

This guidance is for informational purposes only. You should still perform your own independent assessment and take measures to ensure that you comply with your own specific quality control practices and standards, and the local rules, laws, regulations, licenses and terms of use that apply to you, your content, and the third-party model referenced in this guidance. AWS has no control or authority over the third-party model referenced in this guidance and does not make any representations or warranties that the third-party model is secure, virus-free, operational, or compatible with your production environment and standards. AWS does not make any representations, warranties, or guarantees that any information in this guidance will result in a particular outcome or result.

About the authors

Hemant Singh is an Applied Scientist with experience in Amazon SageMaker JumpStart. He got his masters from Courant Institute of Mathematical Sciences and B.Tech from IIT Delhi. He has experience in working on a diverse range of machine learning problems within the domain of natural language processing, computer vision, and time series analysis.

Hemant Singh is an Applied Scientist with experience in Amazon SageMaker JumpStart. He got his masters from Courant Institute of Mathematical Sciences and B.Tech from IIT Delhi. He has experience in working on a diverse range of machine learning problems within the domain of natural language processing, computer vision, and time series analysis.

Rachna Chadha is a Principal Solution Architect AI/ML in Strategic Accounts at AWS. Rachna is an optimist who believes that ethical and responsible use of AI can improve society in future and bring economical and social prosperity. In her spare time, Rachna likes spending time with her family, hiking and listening to music.

Rachna Chadha is a Principal Solution Architect AI/ML in Strategic Accounts at AWS. Rachna is an optimist who believes that ethical and responsible use of AI can improve society in future and bring economical and social prosperity. In her spare time, Rachna likes spending time with her family, hiking and listening to music.

Dr. Ashish Khetan is a Senior Applied Scientist with Amazon SageMaker built-in algorithms and helps develop machine learning algorithms. He got his PhD from University of Illinois Urbana-Champaign. He is an active researcher in machine learning and statistical inference, and has published many papers in NeurIPS, ICML, ICLR, JMLR, ACL, and EMNLP conferences.

Dr. Ashish Khetan is a Senior Applied Scientist with Amazon SageMaker built-in algorithms and helps develop machine learning algorithms. He got his PhD from University of Illinois Urbana-Champaign. He is an active researcher in machine learning and statistical inference, and has published many papers in NeurIPS, ICML, ICLR, JMLR, ACL, and EMNLP conferences.

Author: Hemant Singh